Guojia Hou

Dual High-Order Total Variation Model for Underwater Image Restoration

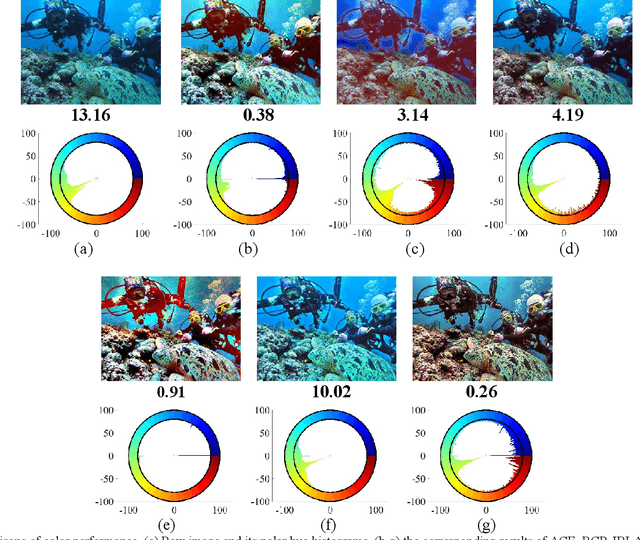

Jul 20, 2024Abstract:Underwater images are typically characterized by color cast, haze, blurring, and uneven illumination due to the selective absorption and scattering when light propagates through the water, which limits their practical applications. Underwater image enhancement and restoration (UIER) is one crucial mode to improve the visual quality of underwater images. However, most existing UIER methods concentrate on enhancing contrast and dehazing, rarely pay attention to the local illumination differences within the image caused by illumination variations, thus introducing some undesirable artifacts and unnatural color. To address this issue, an effective variational framework is proposed based on an extended underwater image formation model (UIFM). Technically, dual high-order regularizations are successfully integrated into the variational model to acquire smoothed local ambient illuminance and structure-revealed reflectance in a unified manner. In our proposed framework, the weight factors-based color compensation is combined with the color balance to compensate for the attenuated color channels and remove the color cast. In particular, the local ambient illuminance with strong robustness is acquired by performing the local patch brightest pixel estimation and an improved gamma correction. Additionally, we design an iterative optimization algorithm relying on the alternating direction method of multipliers (ADMM) to accelerate the solution of the proposed variational model. Considerable experiments on three real-world underwater image datasets demonstrate that the proposed method outperforms several state-of-the-art methods with regard to visual quality and quantitative assessments. Moreover, the proposed method can also be extended to outdoor image dehazing, low-light image enhancement, and some high-level vision tasks. The code is available at https://github.com/Hou-Guojia/UDHTV.

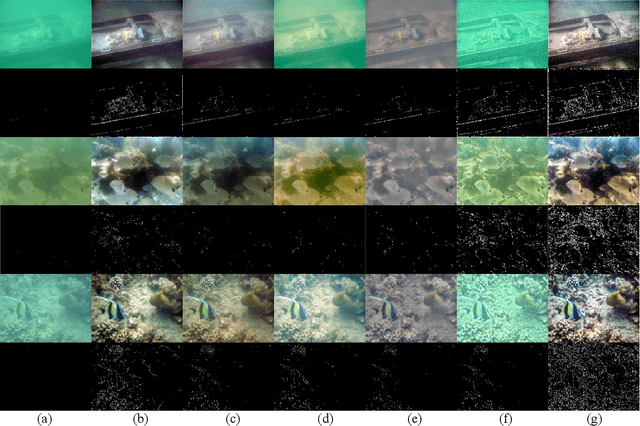

WaterMono: Teacher-Guided Anomaly Masking and Enhancement Boosting for Robust Underwater Self-Supervised Monocular Depth Estimation

Jun 19, 2024

Abstract:Depth information serves as a crucial prerequisite for various visual tasks, whether on land or underwater. Recently, self-supervised methods have achieved remarkable performance on several terrestrial benchmarks despite the absence of depth annotations. However, in more challenging underwater scenarios, they encounter numerous brand-new obstacles such as the influence of marine life and degradation of underwater images, which break the assumption of a static scene and bring low-quality images, respectively. Besides, the camera angles of underwater images are more diverse. Fortunately, we have discovered that knowledge distillation presents a promising approach for tackling these challenges. In this paper, we propose WaterMono, a novel framework for depth estimation coupled with image enhancement. It incorporates the following key measures: (1) We present a Teacher-Guided Anomaly Mask to identify dynamic regions within the images; (2) We employ depth information combined with the Underwater Image Formation Model to generate enhanced images, which in turn contribute to the depth estimation task; and (3) We utilize a rotated distillation strategy to enhance the model's rotational robustness. Comprehensive experiments demonstrate the effectiveness of our proposed method for both depth estimation and image enhancement. The source code and pre-trained models are available on the project home page: https://github.com/OUCVisionGroup/WaterMono.

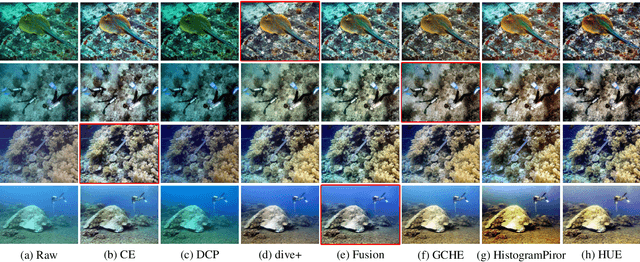

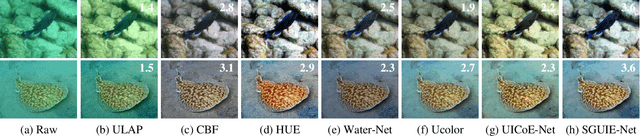

UID2021: An Underwater Image Dataset for Evaluation of No-reference Quality Assessment Metrics

Apr 19, 2022

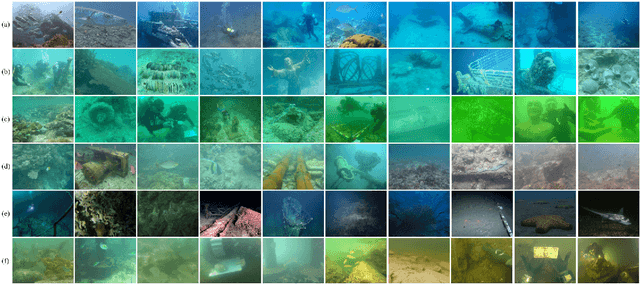

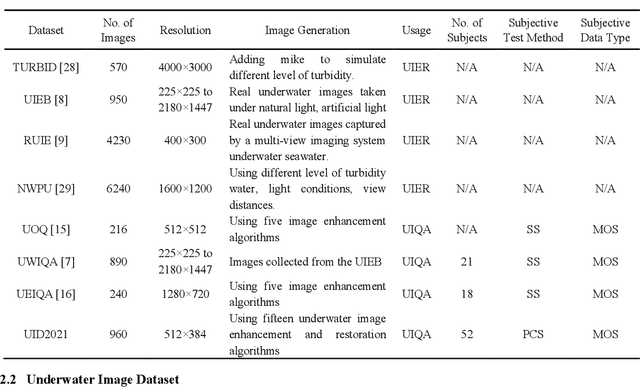

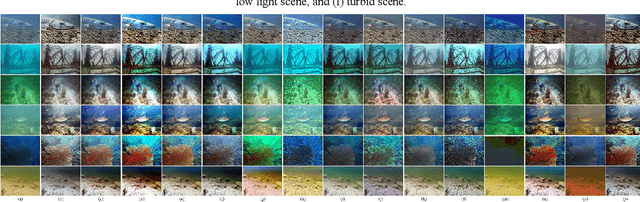

Abstract:Achieving subjective and objective quality assessment of underwater images is of high significance in underwater visual perception and image/video processing. However, the development of underwater image quality assessment (UIQA) is limited for the lack of comprehensive human subjective user study with publicly available dataset and reliable objective UIQA metric. To address this issue, we establish a large-scale underwater image dataset, dubbed UID2021, for evaluating no-reference UIQA metrics. The constructed dataset contains 60 multiply degraded underwater images collected from various sources, covering six common underwater scenes (i.e. bluish scene, bluish-green scene, greenish scene, hazy scene, low-light scene, and turbid scene), and their corresponding 900 quality improved versions generated by employing fifteen state-of-the-art underwater image enhancement and restoration algorithms. Mean opinion scores (MOS) for UID2021 are also obtained by using the pair comparison sorting method with 52 observers. Both in-air NR-IQA and underwater-specific algorithms are tested on our constructed dataset to fairly compare the performance and analyze their strengths and weaknesses. Our proposed UID2021 dataset enables ones to evaluate NR UIQA algorithms comprehensively and paves the way for further research on UIQA. Our UID2021 will be a free download and utilized for research purposes at: https://github.com/Hou-Guojia/UID2021.

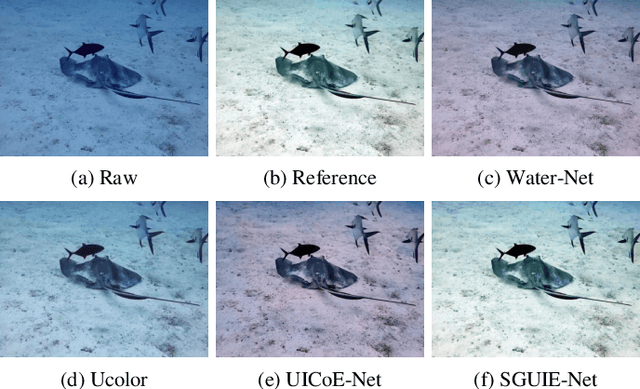

SGUIE-Net: Semantic Attention Guided Underwater Image Enhancement with Multi-Scale Perception

Jan 08, 2022

Abstract:Due to the wavelength-dependent light attenuation, refraction and scattering, underwater images usually suffer from color distortion and blurred details. However, due to the limited number of paired underwater images with undistorted images as reference, training deep enhancement models for diverse degradation types is quite difficult. To boost the performance of data-driven approaches, it is essential to establish more effective learning mechanisms that mine richer supervised information from limited training sample resources. In this paper, we propose a novel underwater image enhancement network, called SGUIE-Net, in which we introduce semantic information as high-level guidance across different images that share common semantic regions. Accordingly, we propose semantic region-wise enhancement module to perceive the degradation of different semantic regions from multiple scales and feed it back to the global attention features extracted from its original scale. This strategy helps to achieve robust and visually pleasant enhancements to different semantic objects, which should thanks to the guidance of semantic information for differentiated enhancement. More importantly, for those degradation types that are not common in the training sample distribution, the guidance connects them with the already well-learned types according to their semantic relevance. Extensive experiments on the publicly available datasets and our proposed dataset demonstrated the impressive performance of SGUIE-Net. The code and proposed dataset are available at: https://trentqq.github.io/SGUIE-Net.html

Enhancing Underwater Image via Adaptive Color and Contrast Enhancement, and Denoising

Apr 02, 2021

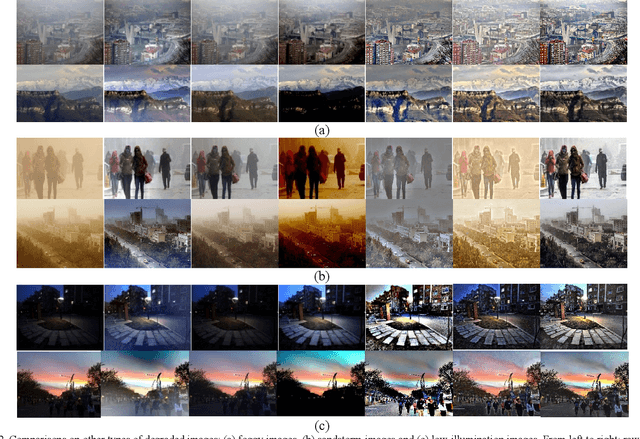

Abstract:Images captured underwater are often characterized by low contrast, color distortion, and noise. To address these visual degradations, we propose a novel scheme by constructing an adaptive color and contrast enhancement, and denoising (ACCE-D) framework for underwater image enhancement. In the proposed framework, Gaussian filter and Bilateral filter are respectively employed to decompose the high-frequency and low-frequency components. Benefited from this separation, we utilize soft-thresholding operation to suppress the noise in the high-frequency component. Accordingly, the low-frequency component is enhanced by using an adaptive color and contrast enhancement (ACCE) strategy. The proposed ACCE is a new adaptive variational framework implemented in the HSI color space, in which we design a Gaussian weight function and a Heaviside function to adaptively adjust the role of data item and regularized item. Moreover, we derive a numerical solution for ACCE, and adopt a pyramid-based strategy to accelerate the solving procedure. Experimental results demonstrate that our strategy is effective in color correction, visibility improvement, and detail revealing. Comparison with state-of-the-art techniques also validate the superiority of propose method. Furthermore, we have verified the utility of our proposed ACCE-D for enhancing other types of degraded scenes, including foggy scene, sandstorm scene and low-light scene.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge