Peijun Zhu

Harnessing Multimodal Large Language Models for Multimodal Sequential Recommendation

Aug 20, 2024

Abstract:Recent advances in Large Language Models (LLMs) have demonstrated significant potential in the field of Recommendation Systems (RSs). Most existing studies have focused on converting user behavior logs into textual prompts and leveraging techniques such as prompt tuning to enable LLMs for recommendation tasks. Meanwhile, research interest has recently grown in multimodal recommendation systems that integrate data from images, text, and other sources using modality fusion techniques. This introduces new challenges to the existing LLM-based recommendation paradigm which relies solely on text modality information. Moreover, although Multimodal Large Language Models (MLLMs) capable of processing multi-modal inputs have emerged, how to equip MLLMs with multi-modal recommendation capabilities remains largely unexplored. To this end, in this paper, we propose the Multimodal Large Language Model-enhanced Multimodaln Sequential Recommendation (MLLM-MSR) model. To capture the dynamic user preference, we design a two-stage user preference summarization method. Specifically, we first utilize an MLLM-based item-summarizer to extract image feature given an item and convert the image into text. Then, we employ a recurrent user preference summarization generation paradigm to capture the dynamic changes in user preferences based on an LLM-based user-summarizer. Finally, to enable the MLLM for multi-modal recommendation task, we propose to fine-tune a MLLM-based recommender using Supervised Fine-Tuning (SFT) techniques. Extensive evaluations across various datasets validate the effectiveness of MLLM-MSR, showcasing its superior ability to capture and adapt to the evolving dynamics of user preferences.

On unbiased performance evaluation for protein inference

Nov 29, 2012

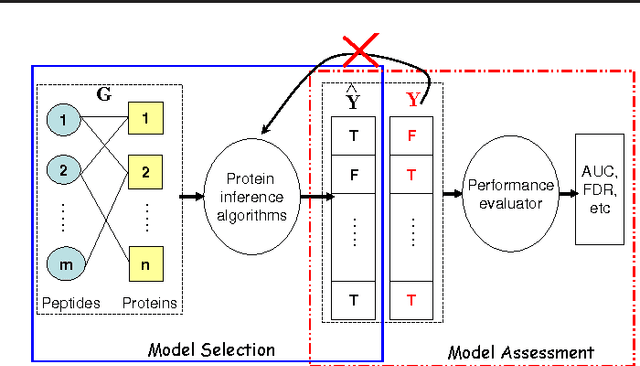

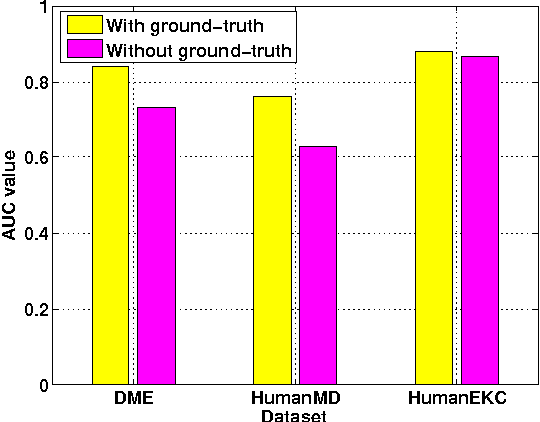

Abstract:This letter is a response to the comments of Serang (2012) on Huang and He (2012) in Bioinformatics. Serang (2012) claimed that the parameters for the Fido algorithm should be specified using the grid search method in Serang et al. (2010) so as to generate a deserved accuracy in performance comparison. It seems that it is an argument on parameter tuning. However, it is indeed the issue of how to conduct an unbiased performance evaluation for comparing different protein inference algorithms. In this letter, we would explain why we don't use the grid search for parameter selection in Huang and He (2012) and show that this procedure may result in an over-estimated performance that is unfair to competing algorithms. In fact, this issue has also been pointed out by Li and Radivojac (2012).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge