Pawel Drozdowski

secunet Security Networks AG

State of the Art of Quality Assessment of Facial Images

Nov 15, 2022

Abstract:The goal of the project "Facial Metrics for EES" is to develop, implement and publish an open source algorithm for the quality assessment of facial images (OFIQ) for face recognition, in particular for border control scenarios.1 In order to stimulate the harmonization of the requirements and practices applied for QA for facial images, the insights gained and algorithms developed in the project will be contributed to the current (2022) revision of the ISO/IEC 29794-5 standard. Furthermore, the implemented quality metrics and algorithms will consider the recommendations and requirements from other relevant standards, in particular ISO/IEC 19794-5:2011, ISO/IEC 29794-5:2010, ISO/IEC 39794-5:2019 and Version 5.2 of the BSI Technical Guideline TR-03121 Part 3 Volume 1. In order to establish an informed basis for the selection of quality metrics and the development of corresponding quality assessment algorithms, the state of the art of methods and algorithms (defining a metric), implementations and datasets for quality assessment for facial images is surveyed. For all relevant quality aspects, this document summarizes the requirements of the aforementioned standards, known results on their impact on face recognition performance, publicly available datasets, proposed methods and algorithms and open source software implementations.

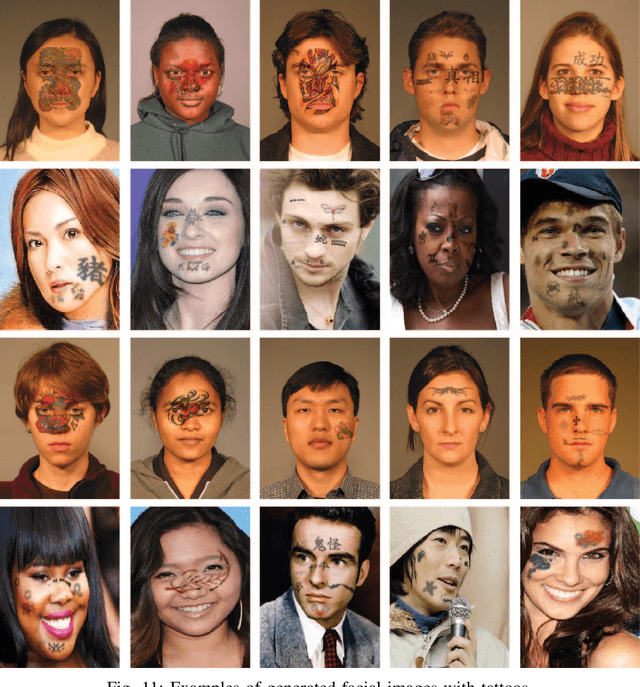

Face Beneath the Ink: Synthetic Data and Tattoo Removal with Application to Face Recognition

Feb 10, 2022

Abstract:Systems that analyse faces have seen significant improvements in recent years and are today used in numerous application scenarios. However, these systems have been found to be negatively affected by facial alterations such as tattoos. To better understand and mitigate the effect of facial tattoos in facial analysis systems, large datasets of images of individuals with and without tattoos are needed. To this end, we propose a generator for automatically adding realistic tattoos to facial images. Moreover, we demonstrate the feasibility of the generation by training a deep learning-based model for removing tattoos from face images. The experimental results show that it is possible to remove facial tattoos from real images without degrading the quality of the image. Additionally, we show that it is possible to improve face recognition accuracy by using the proposed deep learning-based tattoo removal before extracting and comparing facial features.

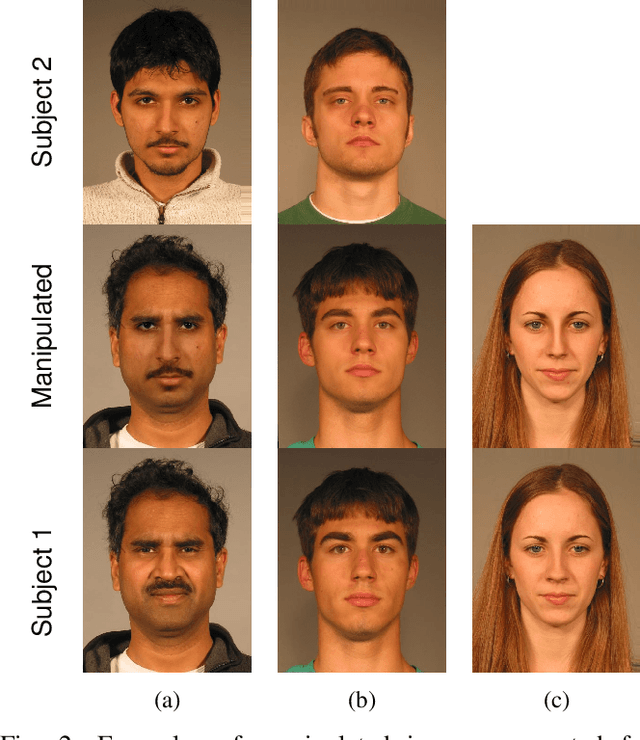

Crowd-powered Face Manipulation Detection: Fusing Human Examiner Decisions

Jan 31, 2022

Abstract:We investigate the potential of fusing human examiner decisions for the task of digital face manipulation detection. To this end, various decision fusion methods are proposed incorporating the examiners' decision confidence, experience level, and their time to take a decision. Conducted experiments are based on a psychophysical evaluation of digital face image manipulation detection capabilities of humans in which different manipulation techniques were applied, i.e. face morphing, face swapping and retouching. The decisions of 223 participants were fused to simulate crowds of up to seven human examiners. Experimental results reveal that (1) despite the moderate detection performance achieved by single human examiners, a high accuracy can be obtained through decision fusion and (2) a weighted fusion which takes the examiners' decision confidence into account yields the most competitive detection performance.

Psychophysical Evaluation of Human Performance in Detecting Digital Face Image Manipulations

Jan 28, 2022

Abstract:In recent years, increasing deployment of face recognition technology in security-critical settings, such as border control or law enforcement, has led to considerable interest in the vulnerability of face recognition systems to attacks utilising legitimate documents, which are issued on the basis of digitally manipulated face images. As automated manipulation and attack detection remains a challenging task, conventional processes with human inspectors performing identity verification remain indispensable. These circumstances merit a closer investigation of human capabilities in detecting manipulated face images, as previous work in this field is sparse and often concentrated only on specific scenarios and biometric characteristics. This work introduces a web-based, remote visual discrimination experiment on the basis of principles adopted from the field of psychophysics and subsequently discusses interdisciplinary opportunities with the aim of examining human proficiency in detecting different types of digitally manipulated face images, specifically face swapping, morphing, and retouching. In addition to analysing appropriate performance measures, a possible metric of detectability is explored. Experimental data of 306 probands indicate that detection performance is widely distributed across the population and detection of certain types of face image manipulations is much more challenging than others.

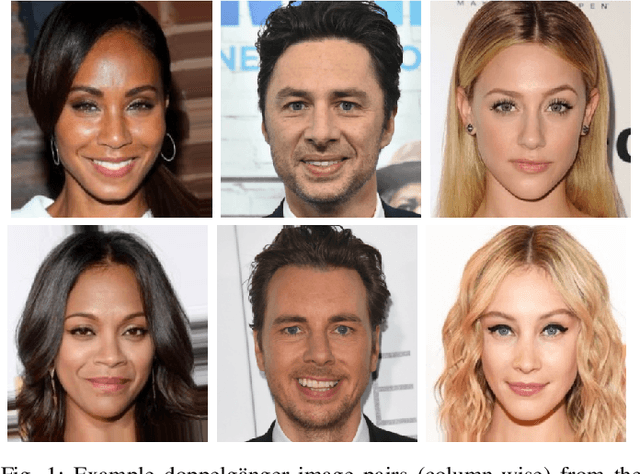

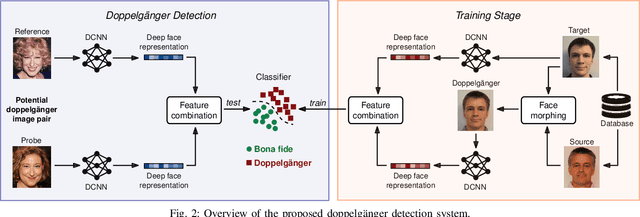

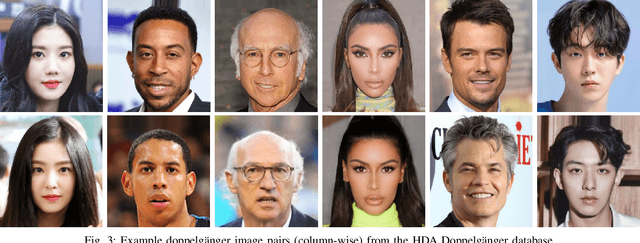

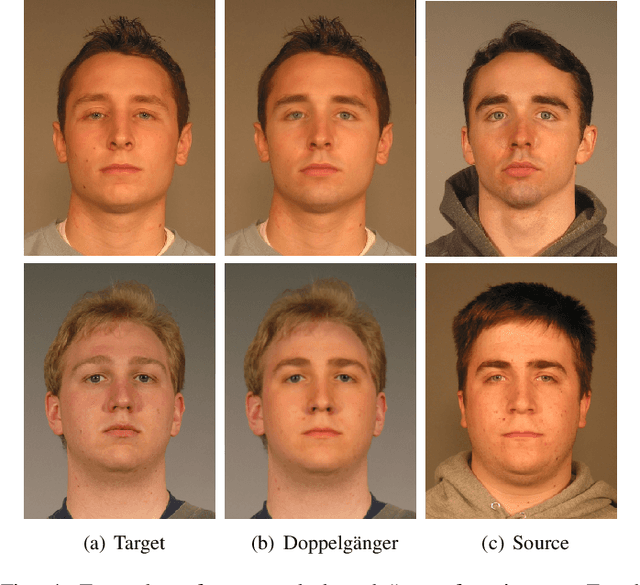

Reliable Detection of Doppelgängers based on Deep Face Representations

Jan 21, 2022

Abstract:Doppelg\"angers (or lookalikes) usually yield an increased probability of false matches in a facial recognition system, as opposed to random face image pairs selected for non-mated comparison trials. In this work, we assess the impact of doppelg\"angers on the HDA Doppelg\"anger and Disguised Faces in The Wild databases using a state-of-the-art face recognition system. It is found that doppelg\"anger image pairs yield very high similarity scores resulting in a significant increase of false match rates. Further, we propose a doppelg\"anger detection method which distinguishes doppelg\"angers from mated comparison trials by analysing differences in deep representations obtained from face image pairs. The proposed detection system employs a machine learning-based classifier, which is trained with generated doppelg\"anger image pairs utilising face morphing techniques. Experimental evaluations conducted on the HDA Doppelg\"anger and Look-Alike Face databases reveal a detection equal error rate of approximately 2.7% for the task of separating mated authentication attempts from doppelg\"angers.

An Attack on Feature Level-based Facial Soft-biometric Privacy Enhancement

Nov 24, 2021

Abstract:In the recent past, different researchers have proposed novel privacy-enhancing face recognition systems designed to conceal soft-biometric information at feature level. These works have reported impressive results, but usually do not consider specific attacks in their analysis of privacy protection. In most cases, the privacy protection capabilities of these schemes are tested through simple machine learning-based classifiers and visualisations of dimensionality reduction tools. In this work, we introduce an attack on feature level-based facial soft-biometric privacy-enhancement techniques. The attack is based on two observations: (1) to achieve high recognition accuracy, certain similarities between facial representations have to be retained in their privacy-enhanced versions; (2) highly similar facial representations usually originate from face images with similar soft-biometric attributes. Based on these observations, the proposed attack compares a privacy-enhanced face representation against a set of privacy-enhanced face representations with known soft-biometric attributes. Subsequently, the best obtained similarity scores are analysed to infer the unknown soft-biometric attributes of the attacked privacy-enhanced face representation. That is, the attack only requires a relatively small database of arbitrary face images and the privacy-enhancing face recognition algorithm as a black-box. In the experiments, the attack is applied to two representative approaches which have previously been reported to reliably conceal the gender in privacy-enhanced face representations. It is shown that the presented attack is able to circumvent the privacy enhancement to a considerable degree and is able to correctly classify gender with an accuracy of up to approximately 90% for both of the analysed privacy-enhancing face recognition systems.

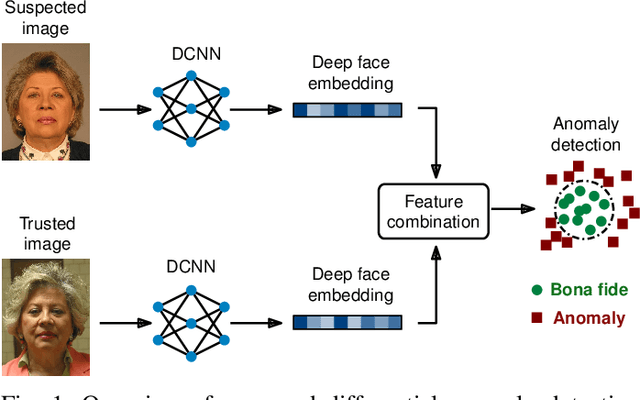

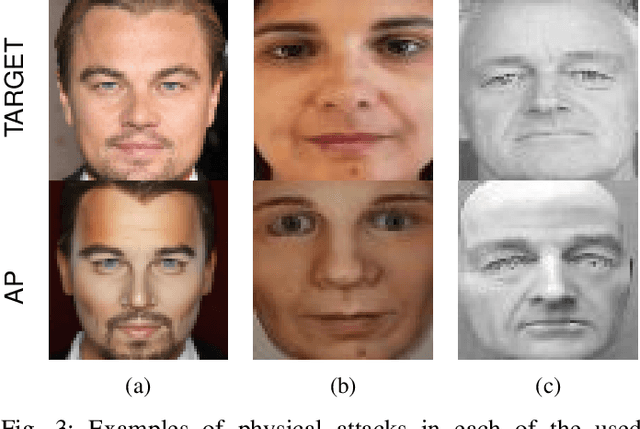

Differential Anomaly Detection for Facial Images

Oct 07, 2021

Abstract:Due to their convenience and high accuracy, face recognition systems are widely employed in governmental and personal security applications to automatically recognise individuals. Despite recent advances, face recognition systems have shown to be particularly vulnerable to identity attacks (i.e., digital manipulations and attack presentations). Identity attacks pose a big security threat as they can be used to gain unauthorised access and spread misinformation. In this context, most algorithms for detecting identity attacks generalise poorly to attack types that are unknown at training time. To tackle this problem, we introduce a differential anomaly detection framework in which deep face embeddings are first extracted from pairs of images (i.e., reference and probe) and then combined for identity attack detection. The experimental evaluation conducted over several databases shows a high generalisation capability of the proposed method for detecting unknown attacks in both the digital and physical domains.

Feature Fusion Methods for Indexing and Retrieval of Biometric Data: Application to Face Recognition with Privacy Protection

Jul 27, 2021

Abstract:Computationally efficient, accurate, and privacy-preserving data storage and retrieval are among the key challenges faced by practical deployments of biometric identification systems worldwide. In this work, a method of protected indexing of biometric data is presented. By utilising feature-level fusion of intelligently paired templates, a multi-stage search structure is created. During retrieval, the list of potential candidate identities is successively pre-filtered, thereby reducing the number of template comparisons necessary for a biometric identification transaction. Protection of the biometric probe templates, as well as the stored reference templates and the created index is carried out using homomorphic encryption. The proposed method is extensively evaluated in closed-set and open-set identification scenarios on publicly available databases using two state-of-the-art open-source face recognition systems. With respect to a typical baseline algorithm utilising an exhaustive search-based retrieval algorithm, the proposed method enables a reduction of the computational workload associated with a biometric identification transaction by 90%, while simultaneously suffering no degradation of the biometric performance. Furthermore, by facilitating a seamless integration of template protection with open-source homomorphic encryption libraries, the proposed method guarantees unlinkability, irreversibility, and renewability of the protected biometric data.

Demographic Fairness in Face Identification: The Watchlist Imbalance Effect

Jun 30, 2021

Abstract:Recently, different researchers have found that the gallery composition of a face database can induce performance differentials to facial identification systems in which a probe image is compared against up to all stored reference images to reach a biometric decision. This negative effect is referred to as "watchlist imbalance effect". In this work, we present a method to theoretically estimate said effect for a biometric identification system given its verification performance across demographic groups and the composition of the used gallery. Further, we report results for identification experiments on differently composed demographic subsets, i.e. females and males, of the public academic MORPH database using the open-source ArcFace face recognition system. It is shown that the database composition has a huge impact on performance differentials in biometric identification systems, even if performance differentials are less pronounced in the verification scenario. This study represents the first detailed analysis of the watchlist imbalance effect which is expected to be of high interest for future research in the field of facial recognition.

Demographic Fairness in Biometric Systems: What do the Experts say?

May 31, 2021

Abstract:Algorithmic decision systems have frequently been labelled as "biased", "racist", "sexist", or "unfair" by numerous media outlets, organisations, and researchers. There is an ongoing debate about whether such assessments are justified and whether citizens and policymakers should be concerned. These and other related matters have recently become a hot topic in the context of biometric technologies, which are ubiquitous in personal, commercial, and governmental applications. Biometrics represent an essential component of many surveillance, access control, and operational identity management systems, thus directly or indirectly affecting billions of people all around the world. Recently, the European Association for Biometrics organised an event series with "demographic fairness in biometric systems" as an overarching theme. The events featured presentations by international experts from academic, industry, and governmental organisations and facilitated interactions and discussions between the experts and the audience. Further consultation of experts was undertaken by means of a questionnaire. This work summarises opinions of experts and findings of said events on the topic of demographic fairness in biometric systems including several important aspects such as the developments of evaluation metrics and standards as well as related issues, e.g. the need for transparency and explainability in biometric systems or legal and ethical issues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge