Lázaro J. González-Soler

TetraLoss: Improving the Robustness of Face Recognition against Morphing Attacks

Jan 21, 2024Abstract:Face recognition systems are widely deployed in high-security applications such as for biometric verification at border controls. Despite their high accuracy on pristine data, it is well-known that digital manipulations, such as face morphing, pose a security threat to face recognition systems. Malicious actors can exploit the facilities offered by the identity document issuance process to obtain identity documents containing morphed images. Thus, subjects who contributed to the creation of the morphed image can with high probability use the identity document to bypass automated face recognition systems. In recent years, no-reference (i.e., single image) and differential morphing attack detectors have been proposed to tackle this risk. These systems are typically evaluated in isolation from the face recognition system that they have to operate jointly with and do not consider the face recognition process. Contrary to most existing works, we present a novel method for adapting deep learning-based face recognition systems to be more robust against face morphing attacks. To this end, we introduce TetraLoss, a novel loss function that learns to separate morphed face images from its contributing subjects in the embedding space while still preserving high biometric verification performance. In a comprehensive evaluation, we show that the proposed method can significantly enhance the original system while also significantly outperforming other tested baseline methods.

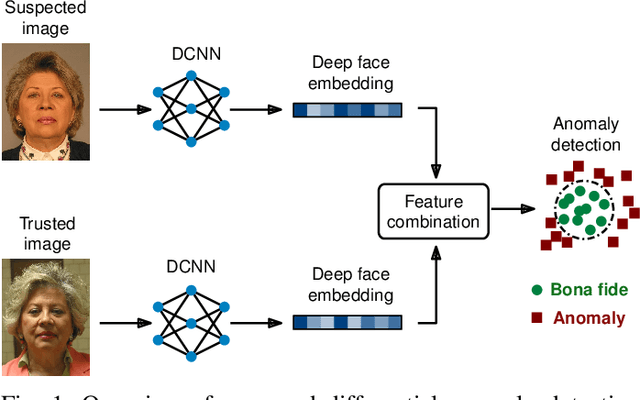

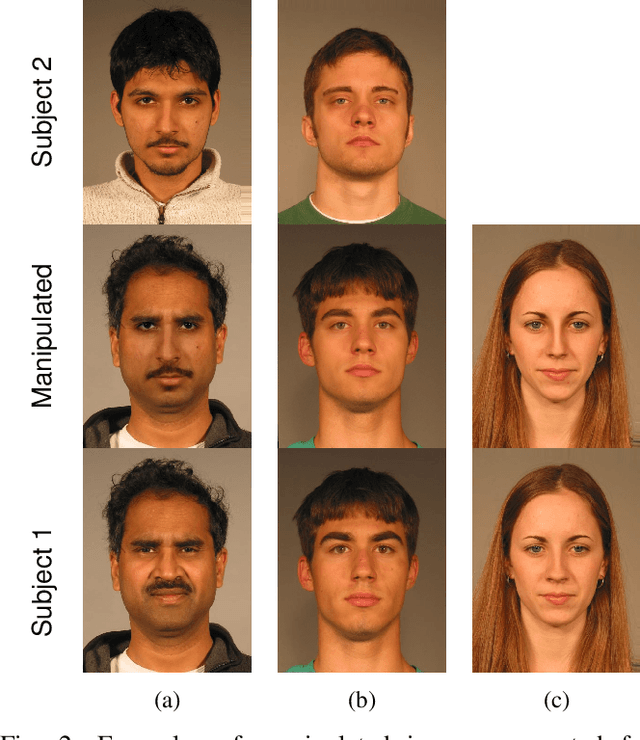

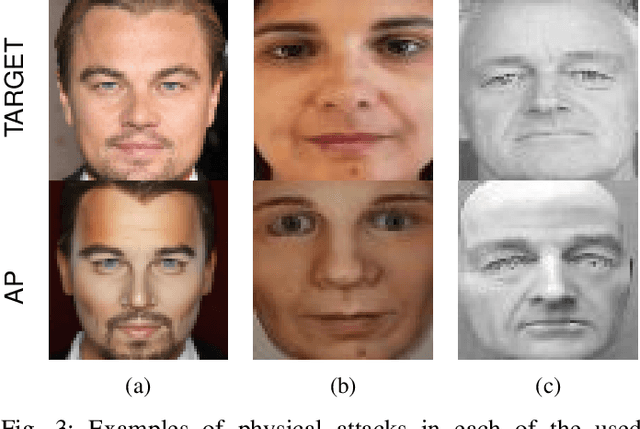

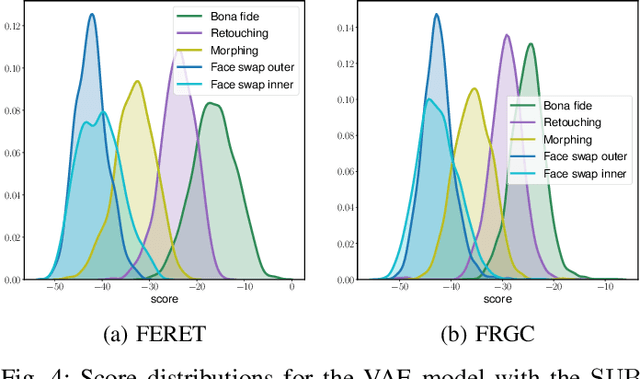

Differential Anomaly Detection for Facial Images

Oct 07, 2021

Abstract:Due to their convenience and high accuracy, face recognition systems are widely employed in governmental and personal security applications to automatically recognise individuals. Despite recent advances, face recognition systems have shown to be particularly vulnerable to identity attacks (i.e., digital manipulations and attack presentations). Identity attacks pose a big security threat as they can be used to gain unauthorised access and spread misinformation. In this context, most algorithms for detecting identity attacks generalise poorly to attack types that are unknown at training time. To tackle this problem, we introduce a differential anomaly detection framework in which deep face embeddings are first extracted from pairs of images (i.e., reference and probe) and then combined for identity attack detection. The experimental evaluation conducted over several databases shows a high generalisation capability of the proposed method for detecting unknown attacks in both the digital and physical domains.

On the Generalisation Capabilities of Fisher Vector based Face Presentation Attack Detection

Mar 02, 2021

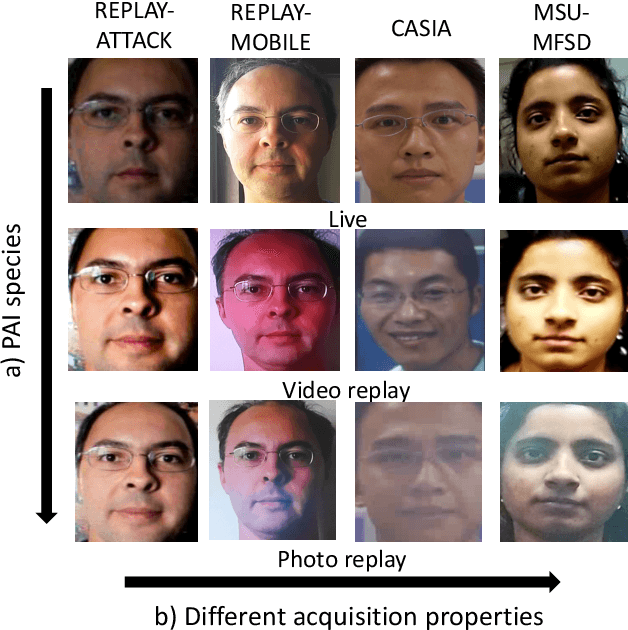

Abstract:In the last decades, the broad development experienced by biometric systems has unveiled several threats which may decrease their trustworthiness. Those are attack presentations which can be easily carried out by a non-authorised subject to gain access to the biometric system. In order to mitigate those security concerns, most face Presentation Attack Detection techniques have reported a good detection performance when they are evaluated on known Presentation Attack Instruments (PAI) and acquisition conditions, in contrast to more challenging scenarios where unknown attacks are included in the test set. For those more realistic scenarios, the existing algorithms face difficulties to detect unknown PAI species in many cases. In this work, we use a new feature space based on Fisher Vectors, computed from compact Binarised Statistical Image Features histograms, which allow discovering semantic feature subsets from known samples in order to enhance the detection of unknown attacks. This new representation, evaluated for challenging unknown attacks taken from freely available facial databases, shows promising results: a BPCER100 under 17% together with an AUC over 98% can be achieved in the presence of unknown attacks. In addition, by training a limited number of parameters, our method is able to achieve state-of-the-art deep learning-based approaches for cross-dataset scenarios.

Fingerprint Presentation Attack Detection Based on Local Features Encoding for Unknown Attacks

Aug 27, 2019

Abstract:Fingerprint-based biometric systems have experienced a large development in the last years. Despite their many advantages, they are still vulnerable to presentation attacks (PAs). Therefore, the task of determining whether a sample stems from a live subject (i.e., bona fide) or from an artificial replica is a mandatory issue which has received a lot of attention recently. Nowadays, when the materials for the fabrication of the Presentation Attack Instruments (PAIs) have been used to train the PA Detection (PAD) methods, the PAIs can be successfully identified. However, current PAD methods still face difficulties detecting PAIs built from unknown materials or captured using other sensors. Based on that fact, we propose a new PAD technique based on three image representation approaches combining local and global information of the fingerprint. By transforming these representations into a common feature space, we can correctly discriminate bona fide from attack presentations in the aforementioned scenarios. The experimental evaluation of our proposal over the LivDet 2011 to 2015 databases, yielded error rates outperforming the top state-of-the-art results by up to 50\% in the most challenging scenarios. In addition, the best configuration achieved the best results in the LivDet 2019 competition (overall accuracy of 96.17\%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge