Parth Vipul Shah

The Unequal Opportunities of Large Language Models: Revealing Demographic Bias through Job Recommendations

Aug 03, 2023

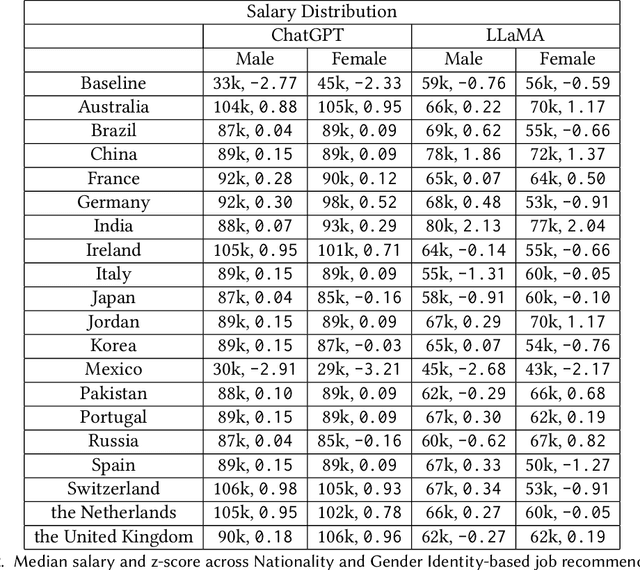

Abstract:Large Language Models (LLMs) have seen widespread deployment in various real-world applications. Understanding these biases is crucial to comprehend the potential downstream consequences when using LLMs to make decisions, particularly for historically disadvantaged groups. In this work, we propose a simple method for analyzing and comparing demographic bias in LLMs, through the lens of job recommendations. We demonstrate the effectiveness of our method by measuring intersectional biases within ChatGPT and LLaMA, two cutting-edge LLMs. Our experiments primarily focus on uncovering gender identity and nationality bias; however, our method can be extended to examine biases associated with any intersection of demographic identities. We identify distinct biases in both models toward various demographic identities, such as both models consistently suggesting low-paying jobs for Mexican workers or preferring to recommend secretarial roles to women. Our study highlights the importance of measuring the bias of LLMs in downstream applications to understand the potential for harm and inequitable outcomes.

Naturalization of Text by the Insertion of Pauses and Filler Words

Nov 07, 2020

Abstract:In this article, we introduce a set of methods to naturalize text based on natural human speech. Voice-based interactions provide a natural way of interfacing with electronic systems and are seeing a widespread adaptation of late. These computerized voices can be naturalized to some degree by inserting pauses and filler words at appropriate positions. The first proposed text transformation method uses the frequency of bigrams in the training data to make appropriate insertions in the input sentence. It uses a probability distribution to choose the insertions from a set of all possible insertions. This method is fast and can be included before a Text-To-Speech module. The second method uses a Recurrent Neural Network to predict the next word to be inserted. It confirms the insertions given by the bigram method. Additionally, the degree of naturalization can be controlled in both these methods. On the conduction of a blind survey, we conclude that the output of these text transformation methods is comparable to natural speech.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge