Pamela C. Cosman

Detecting Generated Images by Real Images Only

Nov 02, 2023

Abstract:As deep learning technology continues to evolve, the images yielded by generative models are becoming more and more realistic, triggering people to question the authenticity of images. Existing generated image detection methods detect visual artifacts in generated images or learn discriminative features from both real and generated images by massive training. This learning paradigm will result in efficiency and generalization issues, making detection methods always lag behind generation methods. This paper approaches the generated image detection problem from a new perspective: Start from real images. By finding the commonality of real images and mapping them to a dense subspace in feature space, the goal is that generated images, regardless of their generative model, are then projected outside the subspace. As a result, images from different generative models can be detected, solving some long-existing problems in the field. Experimental results show that although our method was trained only by real images and uses 99.9\% less training data than other deep learning-based methods, it can compete with state-of-the-art methods and shows excellent performance in detecting emerging generative models with high inference efficiency. Moreover, the proposed method shows robustness against various post-processing. These advantages allow the method to be used in real-world scenarios.

Analyzing Head Orientation of Neurotypical and Autistic Individuals in Triadic Conversations

Nov 01, 2023

Abstract:We propose a system that estimates people's body and head orientations using low-resolution point cloud data from two LiDAR sensors. Our models make accurate estimations in real-world conversation settings where the subject moves naturally with varying head and body poses. The body orientation estimation model uses ellipse fitting while the head orientation estimation model is a pipeline of geometric feature extraction and an ensemble of neural network regressors. Compared with other body and head orientation estimation systems using RGB cameras, our proposed system uses LiDAR sensors to preserve user privacy, while achieving comparable accuracy. Unlike other body/head orientation estimation systems, our sensors do not require a specified placement in front of the subject. Our models achieve a mean absolute estimation error of 5.2 degrees for body orientation and 13.7 degrees for head orientation. We use our models to quantify behavioral differences between neurotypical and autistic individuals in triadic conversations. Tests of significance show that people with autism spectrum disorder display significantly different behavior compared to neurotypical individuals in terms of distributing attention between participants in a conversation, suggesting that the approach could be a component of a behavioral analysis or coaching system.

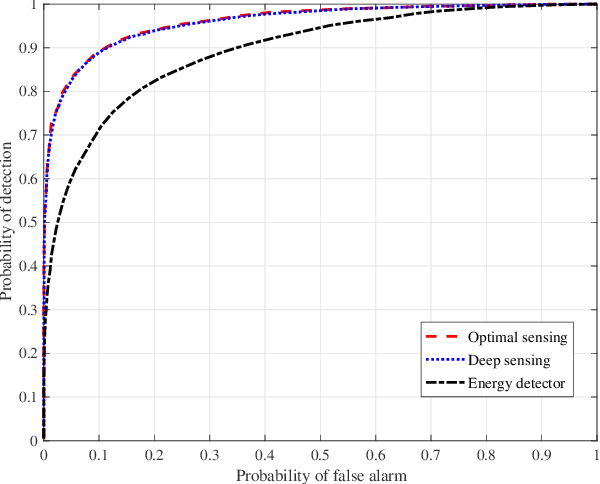

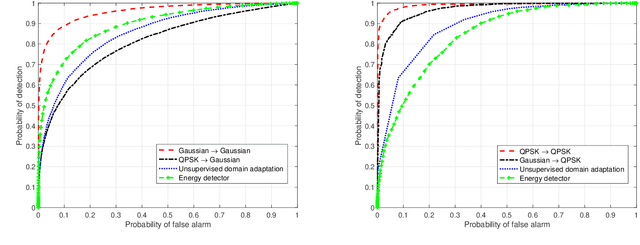

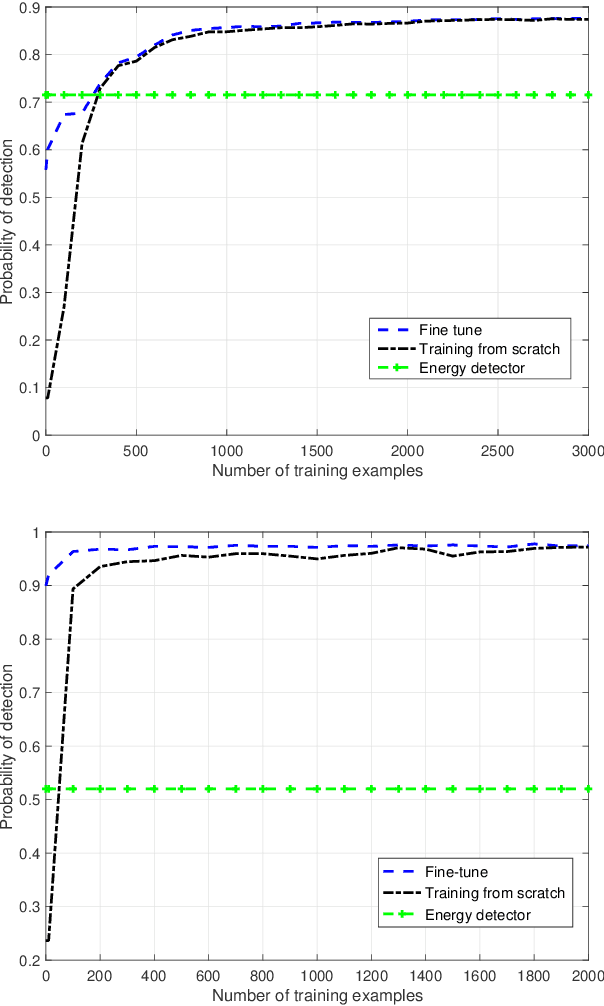

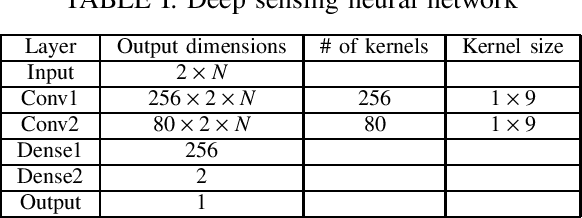

Robust Deep Sensing Through Transfer Learning in Cognitive Radio

Aug 01, 2019

Abstract:We propose a robust spectrum sensing framework based on deep learning. The received signals at the secondary user's receiver are filtered, sampled and then directly fed into a convolutional neural network. Although this deep sensing is effective when operating in the same scenario as the collected training data, the sensing performance is degraded when it is applied in a different scenario with different wireless signals and propagation. We incorporate transfer learning into the framework to improve the robustness. Results validate the effectiveness as well as the robustness of the proposed deep spectrum sensing framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge