Pablo Ortega

iTrash: Incentivized Token Rewards for Automated Sorting and Handling

Feb 25, 2025

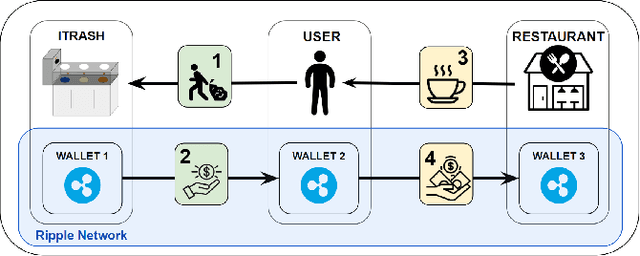

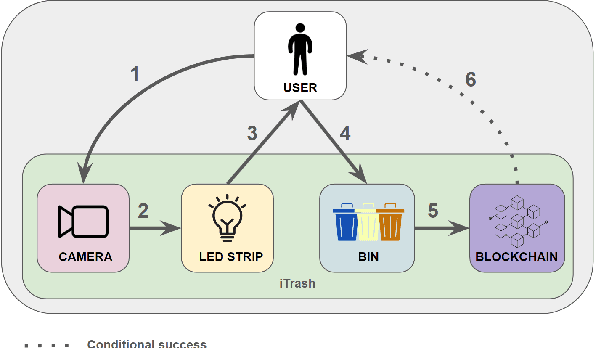

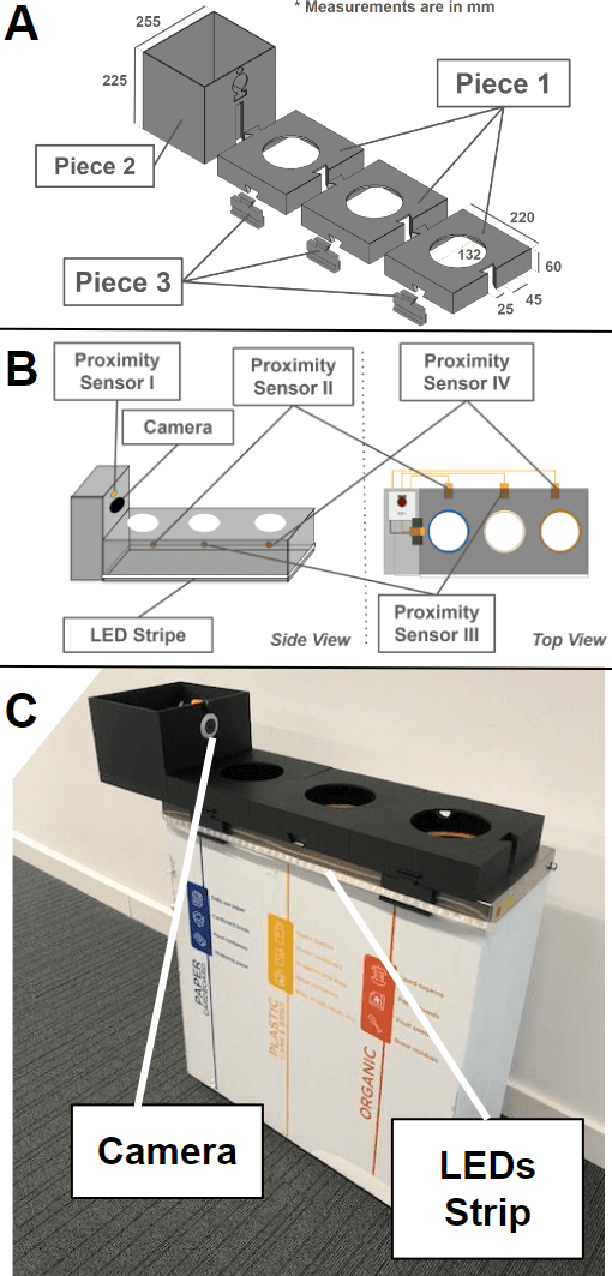

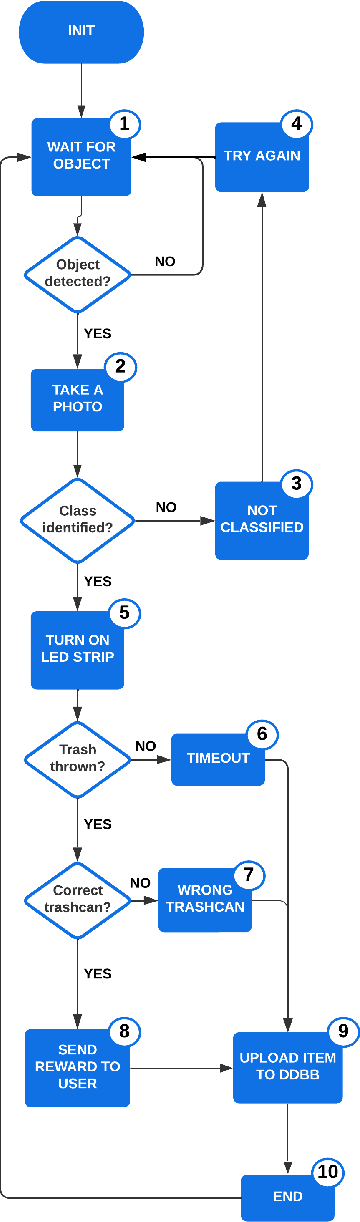

Abstract:As robotic systems (RS) become more autonomous, they are becoming increasingly used in small spaces and offices to automate tasks such as cleaning, infrastructure maintenance, or resource management. In this paper, we propose iTrash, an intelligent trashcan that aims to improve recycling rates in small office spaces. For that, we ran a 5 day experiment and found that iTrash can produce an efficiency increase of more than 30% compared to traditional trashcans. The findings derived from this work, point to the fact that using iTrash not only increase recyclying rates, but also provides valuable data such as users behaviour or bin usage patterns, which cannot be taken from a normal trashcan. This information can be used to predict and optimize some tasks in these spaces. Finally, we explored the potential of using blockchain technology to create economic incentives for recycling, following a Save-as-you-Throw (SAYT) model.

Word Sense Disambiguation in Native Spanish: A Comprehensive Lexical Evaluation Resource

Sep 30, 2024

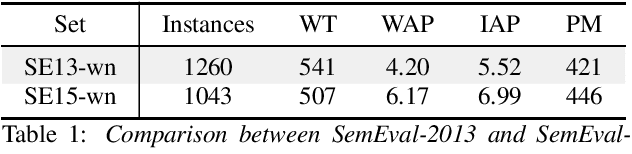

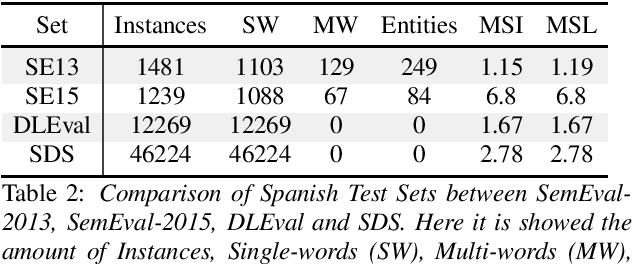

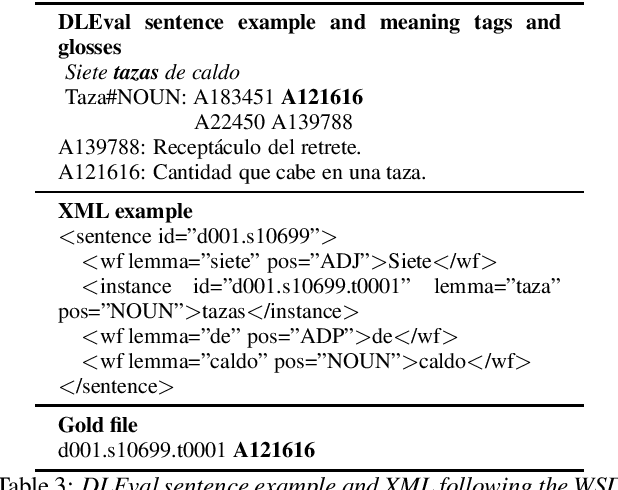

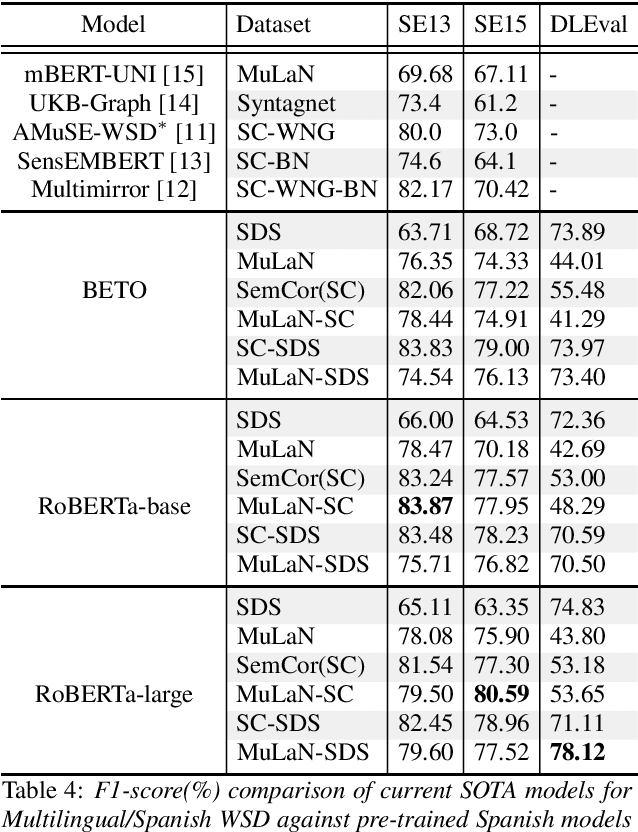

Abstract:Human language, while aimed at conveying meaning, inherently carries ambiguity. It poses challenges for speech and language processing, but also serves crucial communicative functions. Efficiently solve ambiguity is both a desired and a necessary characteristic. The lexical meaning of a word in context can be determined automatically by Word Sense Disambiguation (WSD) algorithms that rely on external knowledge often limited and biased toward English. When adapting content to other languages, automated translations are frequently inaccurate and a high degree of expert human validation is necessary to ensure both accuracy and understanding. The current study addresses previous limitations by introducing a new resource for Spanish WSD. It includes a sense inventory and a lexical dataset sourced from the Diccionario de la Lengua Espa\~nola which is maintained by the Real Academia Espa\~nola. We also review current resources for Spanish and report metrics on them by a state-of-the-art system.

CNNATT: Deep EEG & fNIRS Real-Time Decoding of bimanual forces

Mar 23, 2021

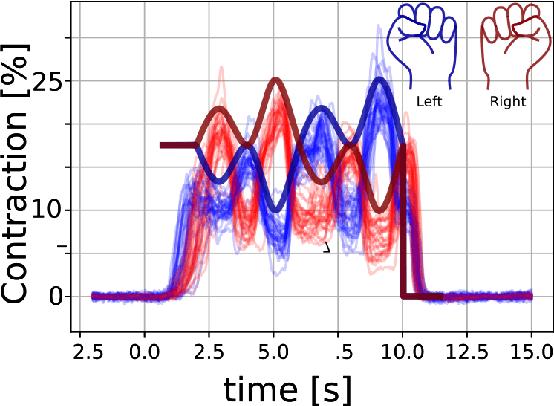

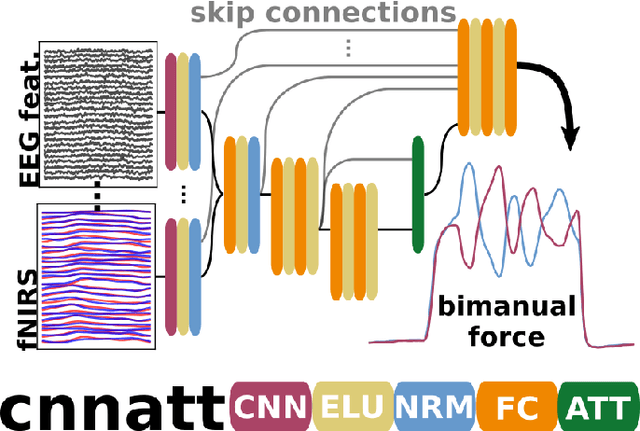

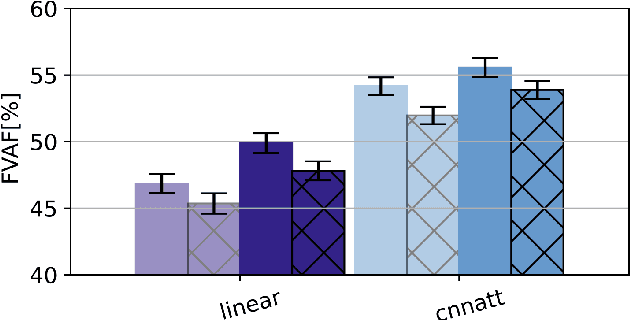

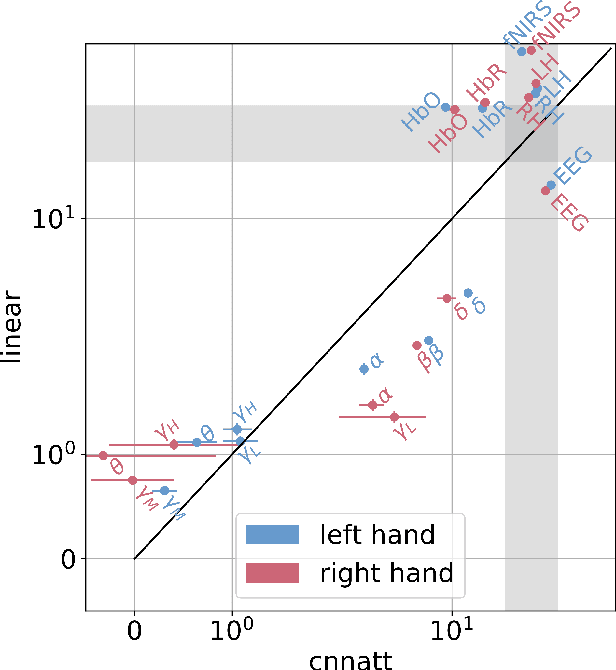

Abstract:Non-invasive cortical neural interfaces have only achieved modest performance in cortical decoding of limb movements and their forces, compared to invasive brain-computer interfaces (BCIs). While non-invasive methodologies are safer, cheaper and vastly more accessible technologies, signals suffer from either poor resolution in the space domain (EEG) or the temporal domain (BOLD signal of functional Near Infrared Spectroscopy, fNIRS). The non-invasive BCI decoding of bimanual force generation and the continuous force signal has not been realised before and so we introduce an isometric grip force tracking task to evaluate the decoding. We find that combining EEG and fNIRS using deep neural networks works better than linear models to decode continuous grip force modulations produced by the left and the right hand. Our multi-modal deep learning decoder achieves 55.2 FVAF[%] in force reconstruction and improves the decoding performance by at least 15% over each individual modality. Our results show a way to achieve continuous hand force decoding using cortical signals obtained with non-invasive mobile brain imaging has immediate impact for rehabilitation, restoration and consumer applications.

Model-Agnostic Meta-Learning for EEG Motor Imagery Decoding in Brain-Computer-Interfacing

Mar 10, 2021

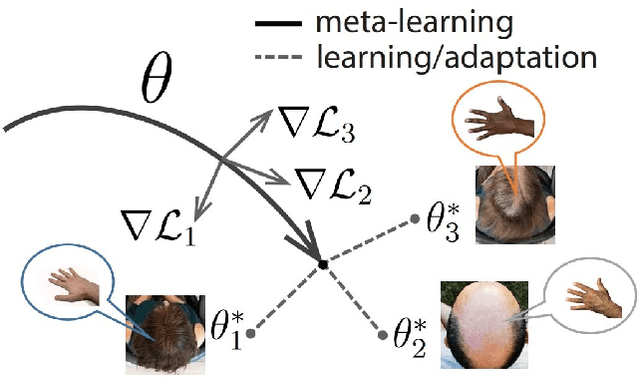

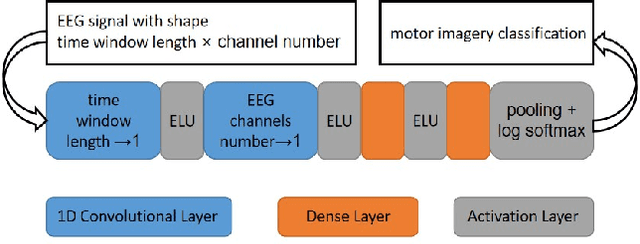

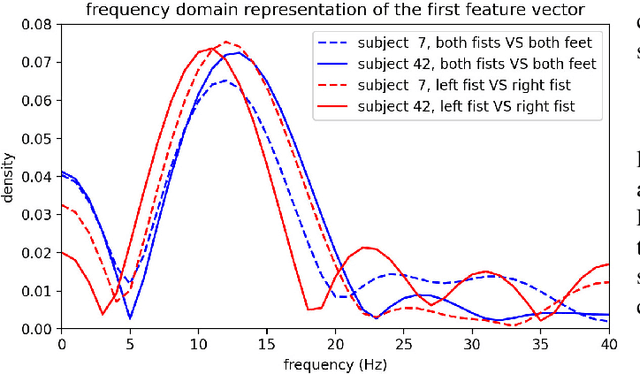

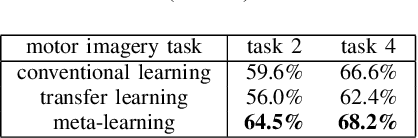

Abstract:We introduce here the idea of Meta-Learning for training EEG BCI decoders. Meta-Learning is a way of training machine learning systems so they learn to learn. We apply here meta-learning to a simple Deep Learning BCI architecture and compare it to transfer learning on the same architecture. Our Meta-learning strategy operates by finding optimal parameters for the BCI decoder so that it can quickly generalise between different users and recording sessions -- thereby also generalising to new users or new sessions quickly. We tested our algorithm on the Physionet EEG motor imagery dataset. Our approach increased motor imagery classification accuracy between 60% to 80%, outperforming other algorithms under the little-data condition. We believe that establishing the meta-learning or learning-to-learn approach will help neural engineering and human interfacing with the challenges of quickly setting up decoders of neural signals to make them more suitable for daily-life.

Inter-subject Deep Transfer Learning for Motor Imagery EEG Decoding

Mar 09, 2021

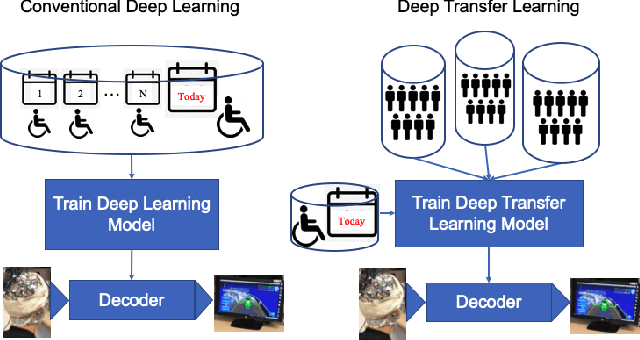

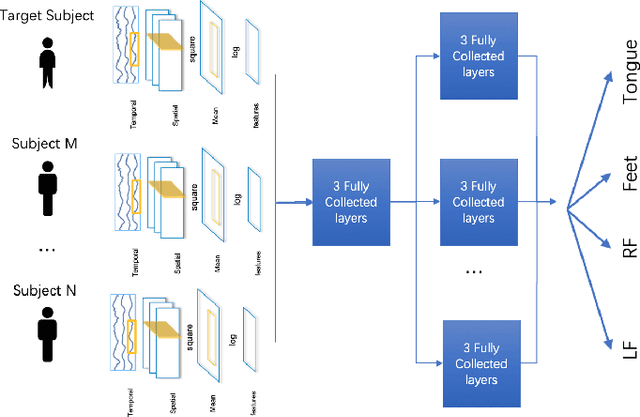

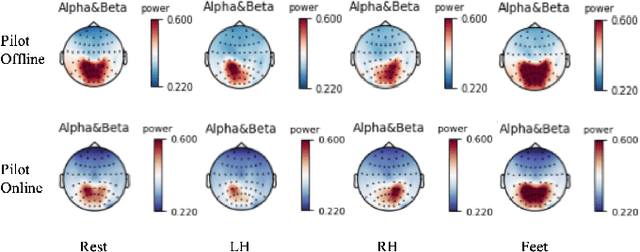

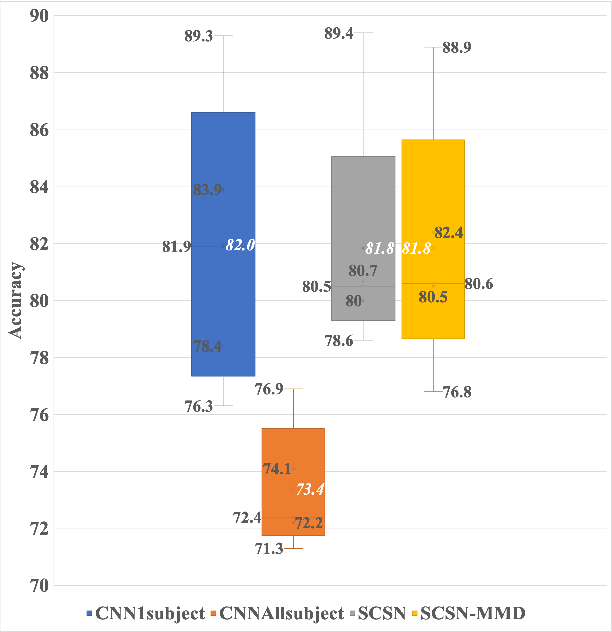

Abstract:Convolutional neural networks (CNNs) have become a powerful technique to decode EEG and have become the benchmark for motor imagery EEG Brain-Computer-Interface (BCI) decoding. However, it is still challenging to train CNNs on multiple subjects' EEG without decreasing individual performance. This is known as the negative transfer problem, i.e. learning from dissimilar distributions causes CNNs to misrepresent each of them instead of learning a richer representation. As a result, CNNs cannot directly use multiple subjects' EEG to enhance model performance directly. To address this problem, we extend deep transfer learning techniques to the EEG multi-subject training case. We propose a multi-branch deep transfer network, the Separate-Common-Separate Network (SCSN) based on splitting the network's feature extractors for individual subjects. We also explore the possibility of applying Maximum-mean discrepancy (MMD) to the SCSN (SCSN-MMD) to better align distributions of features from individual feature extractors. The proposed network is evaluated on the BCI Competition IV 2a dataset (BCICIV2a dataset) and our online recorded dataset. Results show that the proposed SCSN (81.8%, 53.2%) and SCSN-MMD (81.8%, 54.8%) outperformed the benchmark CNN (73.4%, 48.8%) on both datasets using multiple subjects. Our proposed networks show the potential to utilise larger multi-subject datasets to train an EEG decoder without being influenced by negative transfer.

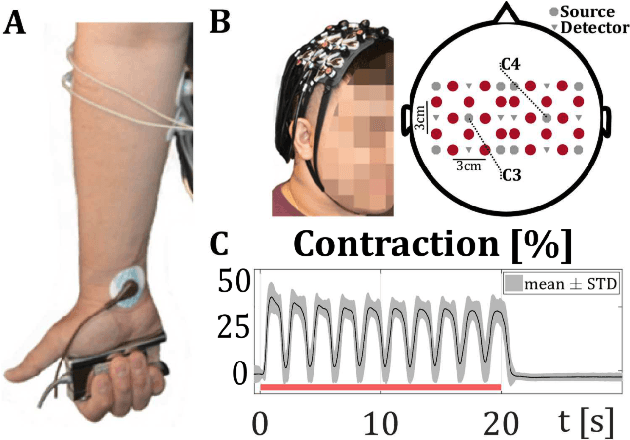

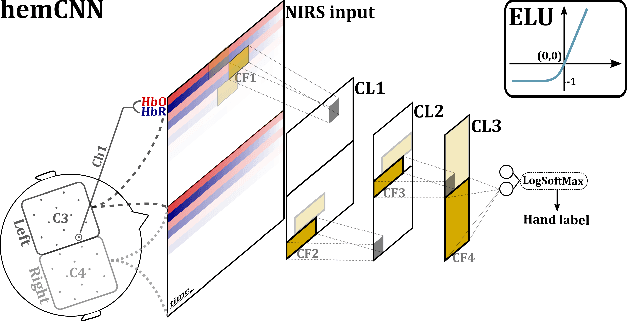

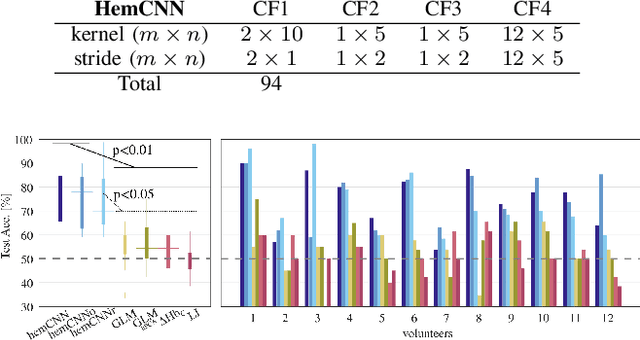

HemCNN: Deep Learning enables decoding of fNIRS cortical signals in hand grip motor tasks

Mar 09, 2021

Abstract:We solve the fNIRS left/right hand force decoding problem using a data-driven approach by using a convolutional neural network architecture, the HemCNN. We test HemCNN's decoding capabilities to decode in a streaming way the hand, left or right, from fNIRS data. HemCNN learned to detect which hand executed a grasp at a naturalistic hand action speed of $~1\,$Hz, outperforming standard methods. Since HemCNN does not require baseline correction and the convolution operation is invariant to time translations, our method can help to unlock fNIRS for a variety of real-time tasks. Mobile brain imaging and mobile brain machine interfacing can benefit from this to develop real-world neuroscience and practical human neural interfacing based on BOLD-like signals for the evaluation, assistance and rehabilitation of force generation, such as fusion of fNIRS with EEG signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge