HemCNN: Deep Learning enables decoding of fNIRS cortical signals in hand grip motor tasks

Paper and Code

Mar 09, 2021

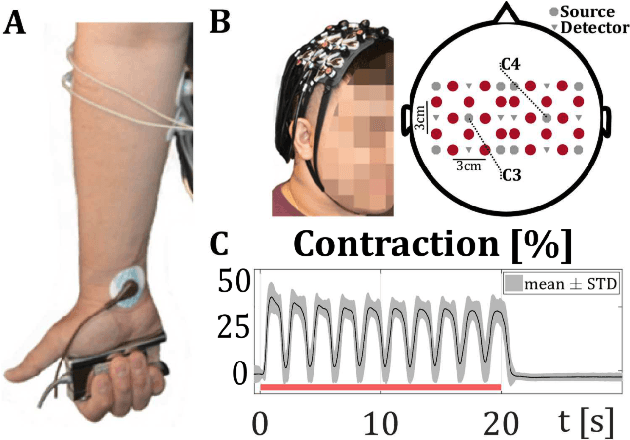

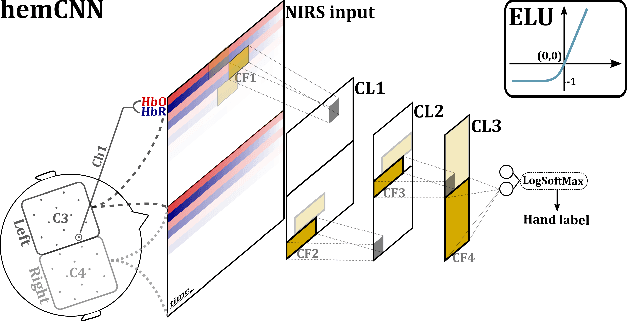

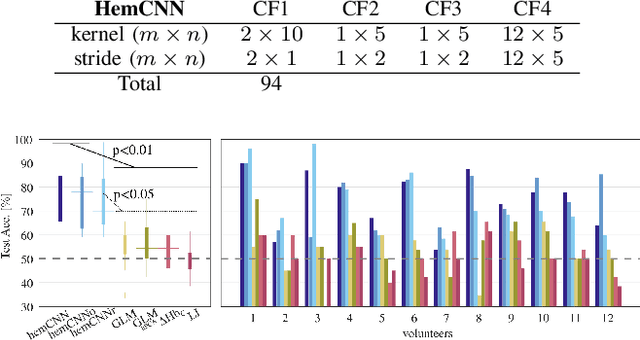

We solve the fNIRS left/right hand force decoding problem using a data-driven approach by using a convolutional neural network architecture, the HemCNN. We test HemCNN's decoding capabilities to decode in a streaming way the hand, left or right, from fNIRS data. HemCNN learned to detect which hand executed a grasp at a naturalistic hand action speed of $~1\,$Hz, outperforming standard methods. Since HemCNN does not require baseline correction and the convolution operation is invariant to time translations, our method can help to unlock fNIRS for a variety of real-time tasks. Mobile brain imaging and mobile brain machine interfacing can benefit from this to develop real-world neuroscience and practical human neural interfacing based on BOLD-like signals for the evaluation, assistance and rehabilitation of force generation, such as fusion of fNIRS with EEG signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge