Oliver Müller

TS-Arena Technical Report -- A Pre-registered Live Forecasting Platform

Dec 23, 2025Abstract:While Time Series Foundation Models (TSFMs) offer transformative capabilities for forecasting, they simultaneously risk triggering a fundamental evaluation crisis. This crisis is driven by information leakage due to overlapping training and test sets across different models, as well as the illegitimate transfer of global patterns to test data. While the ability to learn shared temporal dynamics represents a primary strength of these models, their evaluation on historical archives often permits the exploitation of observed global shocks, which violates the independence required for valid benchmarking. We introduce TS-Arena, a platform that restores the operational integrity of forecasting by treating the genuinely unknown future as the definitive test environment. By implementing a pre-registration mechanism on live data streams, the platform ensures that evaluation targets remain physically non-existent during inference, thereby enforcing a strict global temporal split. This methodology establishes a moving temporal frontier that prevents historical contamination and provides an authentic assessment of model generalization. Initially applied within the energy sector, TS-Arena provides a sustainable infrastructure for comparing foundation models under real-world constraints. A prototype of the platform is available at https://huggingface.co/spaces/DAG-UPB/TS-Arena.

Getting In Contract with Large Language Models -- An Agency Theory Perspective On Large Language Model Alignment

Sep 09, 2025Abstract:Adopting Large language models (LLMs) in organizations potentially revolutionizes our lives and work. However, they can generate off-topic, discriminating, or harmful content. This AI alignment problem often stems from misspecifications during the LLM adoption, unnoticed by the principal due to the LLM's black-box nature. While various research disciplines investigated AI alignment, they neither address the information asymmetries between organizational adopters and black-box LLM agents nor consider organizational AI adoption processes. Therefore, we propose LLM ATLAS (LLM Agency Theory-Led Alignment Strategy) a conceptual framework grounded in agency (contract) theory, to mitigate alignment problems during organizational LLM adoption. We conduct a conceptual literature analysis using the organizational LLM adoption phases and the agency theory as concepts. Our approach results in (1) providing an extended literature analysis process specific to AI alignment methods during organizational LLM adoption and (2) providing a first LLM alignment problem-solution space.

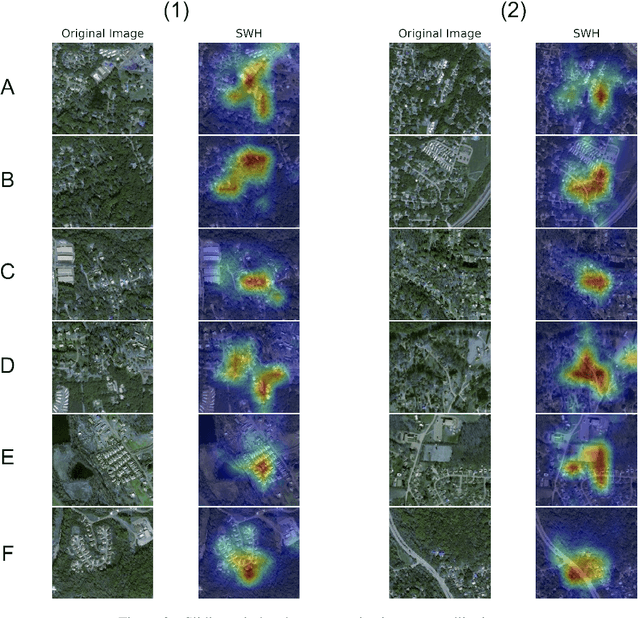

A Comparison of Multi-View Learning Strategies for Satellite Image-Based Real Estate Appraisal

May 11, 2021

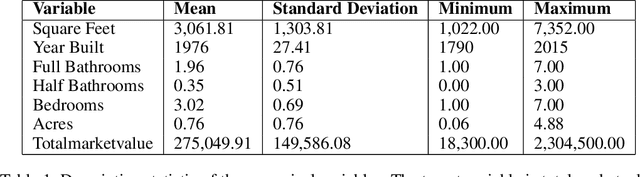

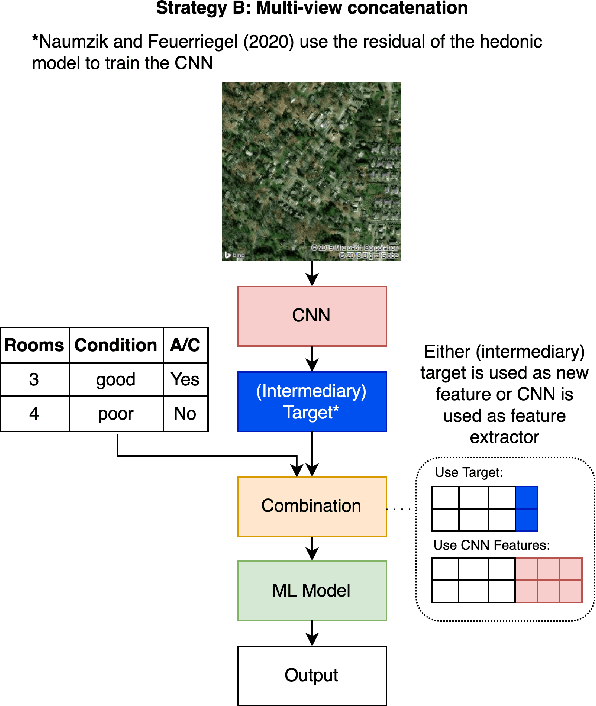

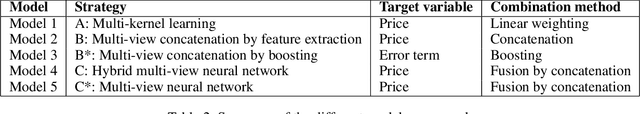

Abstract:In the house credit process, banks and lenders rely on a fast and accurate estimation of a real estate price to determine the maximum loan value. Real estate appraisal is often based on relational data, capturing the hard facts of the property. Yet, models benefit strongly from including image data, capturing additional soft factors. The combination of the different data types requires a multi-view learning method. Therefore, the question arises which strengths and weaknesses different multi-view learning strategies have. In our study, we test multi-kernel learning, multi-view concatenation and multi-view neural networks on real estate data and satellite images from Asheville, NC. Our results suggest that multi-view learning increases the predictive performance up to 13% in MAE. Multi-view neural networks perform best, however result in intransparent black-box models. For users seeking interpretability, hybrid multi-view neural networks or a boosting strategy are a suitable alternative.

Location, location, location: Satellite image-based real-estate appraisal

Jun 04, 2020

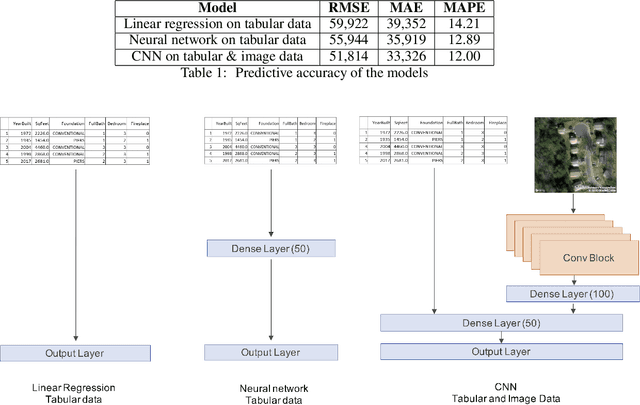

Abstract:Buying a home is one of the most important buying decisions people have to make in their life. The latest research on real-estate appraisal focuses on incorporating image data in addition to structured data into the modeling process. This research measures the prediction performance of satellite images and structured data by using convolutional neural networks. The resulting CNN model trained performs 7% better in MAE than the advanced baseline of a neural network trained on structured data. Moreover, sliding-window heatmap provides visual interpretability of satellite images, revealing that neighborhood structures are essential in the price estimation.

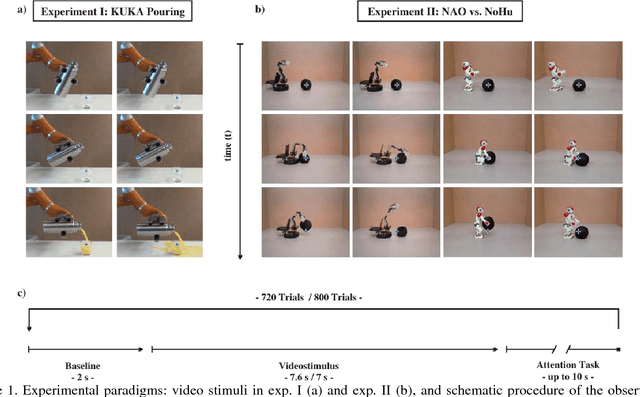

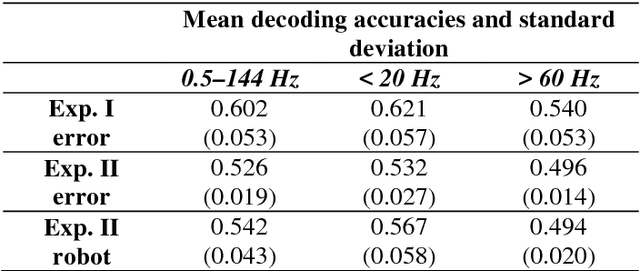

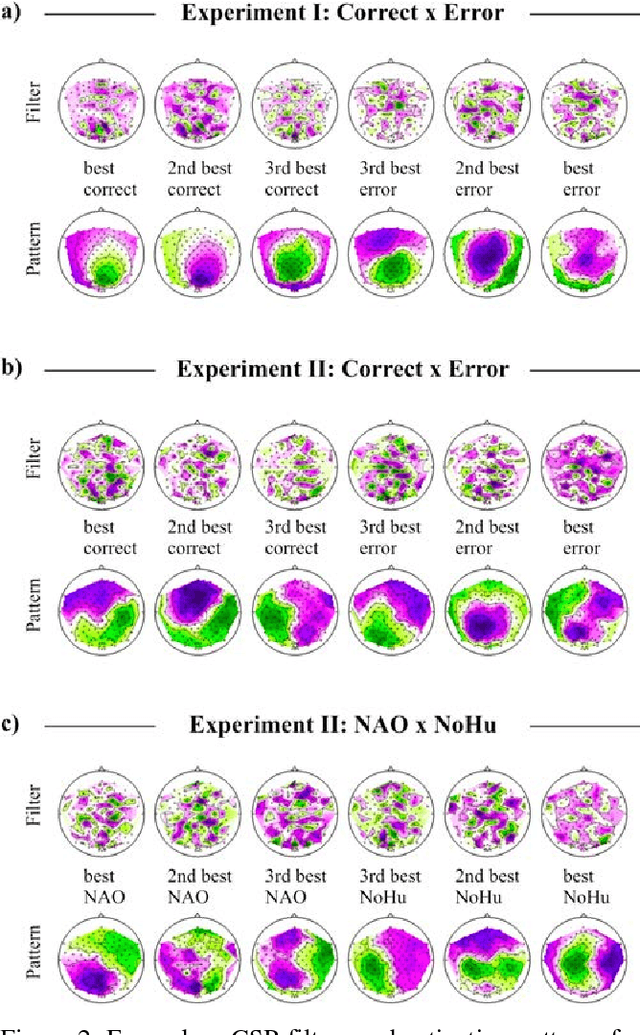

Brain Responses During Robot-Error Observation

Aug 16, 2017

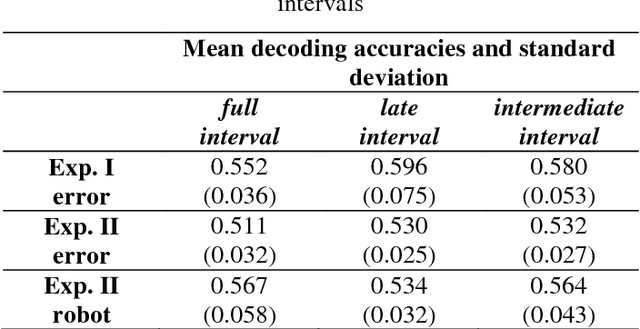

Abstract:Brain-controlled robots are a promising new type of assistive device for severely impaired persons. Little is however known about how to optimize the interaction of humans and brain-controlled robots. Information about the human's perceived correctness of robot performance might provide a useful teaching signal for adaptive control algorithms and thus help enhancing robot control. Here, we studied whether watching robots perform erroneous vs. correct action elicits differential brain responses that can be decoded from single trials of electroencephalographic (EEG) recordings, and whether brain activity during human-robot interaction is modulated by the robot's visual similarity to a human. To address these topics, we designed two experiments. In experiment I, participants watched a robot arm pour liquid into a cup. The robot performed the action either erroneously or correctly, i.e. it either spilled some liquid or not. In experiment II, participants observed two different types of robots, humanoid and non-humanoid, grabbing a ball. The robots either managed to grab the ball or not. We recorded high-resolution EEG during the observation tasks in both experiments to train a Filter Bank Common Spatial Pattern (FBCSP) pipeline on the multivariate EEG signal and decode for the correctness of the observed action, and for the type of the observed robot. Our findings show that it was possible to decode both correctness and robot type for the majority of participants significantly, although often just slightly, above chance level. Our findings suggest that non-invasive recordings of brain responses elicited when observing robots indeed contain decodable information about the correctness of the robot's action and the type of observed robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge