Oliver Bimber

Through-Foliage Surface-Temperature Reconstruction for early Wildfire Detection

Nov 16, 2025Abstract:We introduce a novel method for reconstructing surface temperatures through occluding forest vegetation by combining signal processing and machine learning. Our goal is to enable fully automated aerial wildfire monitoring using autonomous drones, allowing for the early detection of ground fires before smoke or flames are visible. While synthetic aperture (SA) sensing mitigates occlusion from the canopy and sunlight, it introduces thermal blur that obscures the actual surface temperatures. To address this, we train a visual state space model to recover the subtle thermal signals of partially occluded soil and fire hotspots from this blurred data. A key challenge was the scarcity of real-world training data. We overcome this by integrating a latent diffusion model into a vector quantized to generated a large volume of realistic surface temperature simulations from real wildfire recordings, which we further expanded through temperature augmentation and procedural thermal forest simulation. On simulated data across varied ambient and surface temperatures, forest densities, and sunlight conditions, our method reduced the RMSE by a factor of 2 to 2.5 compared to conventional thermal and uncorrected SA imaging. In field experiments focused on high-temperature hotspots, the improvement was even more significant, with a 12.8-fold RMSE gain over conventional thermal and a 2.6-fold gain over uncorrected SA images. We also demonstrate our model's generalization to other thermal signals, such as human signatures for search and rescue. Since simple thresholding is frequently inadequate for detecting subtle thermal signals, the morphological characteristics are equally essential for accurate classification. Our experiments demonstrated another clear advantage: we reconstructed the complete morphology of fire and human signatures, whereas conventional imaging is defeated by partial occlusion.

DeepForest: Sensing Into Self-Occluding Volumes of Vegetation With Aerial Imaging

Feb 04, 2025

Abstract:Access to below-canopy volumetric vegetation data is crucial for understanding ecosystem dynamics. We address the long-standing limitation of remote sensing to penetrate deep into dense canopy layers. LiDAR and radar are currently considered the primary options for measuring 3D vegetation structures, while cameras can only extract the reflectance and depth of top layers. Using conventional, high-resolution aerial images, our approach allows sensing deep into self-occluding vegetation volumes, such as forests. It is similar in spirit to the imaging process of wide-field microscopy, but can handle much larger scales and strong occlusion. We scan focal stacks by synthetic-aperture imaging with drones and reduce out-of-focus signal contributions using pre-trained 3D convolutional neural networks. The resulting volumetric reflectance stacks contain low-frequency representations of the vegetation volume. Combining multiple reflectance stacks from various spectral channels provides insights into plant health, growth, and environmental conditions throughout the entire vegetation volume.

An Autonomous Drone Swarm for Detecting and Tracking Anomalies among Dense Vegetation

Jul 15, 2024Abstract:Swarms of drones offer an increased sensing aperture, and having them mimic behaviors of natural swarms enhances sampling by adapting the aperture to local conditions. We demonstrate that such an approach makes detecting and tracking heavily occluded targets practically feasible. While object classification applied to conventional aerial images generalizes poorly the randomness of occlusion and is therefore inefficient even under lightly occluded conditions, anomaly detection applied to synthetic aperture integral images is robust for dense vegetation, such as forests, and is independent of pre-trained classes. Our autonomous swarm searches the environment for occurrences of the unknown or unexpected, tracking them while continuously adapting its sampling pattern to optimize for local viewing conditions. In our real-life field experiments with a swarm of six drones, we achieved an average positional accuracy of 0.39 m with an average precision of 93.2% and an average recall of 95.9%. Here, adapted particle swarm optimization considers detection confidences and predicted target appearance. We show that sensor noise can effectively be included in the synthetic aperture image integration process, removing the need for a computationally costly optimization of high-dimensional parameter spaces. Finally, we present a complete hard- and software framework that supports low-latency transmission (approx. 80 ms round-trip time) and fast processing (approx. 600 ms per formation step) of extensive (70-120 Mbit/s) video and telemetry data, and swarm control for swarms of up to ten drones.

Reciprocal Visibility

Feb 10, 2024Abstract:We propose a guidance strategy to optimize real-time synthetic aperture sampling for occlusion removal with drones by pre-scanned point-cloud data. Depth information can be used to compute visibility of points on the ground for individual drone positions in the air. Inspired by Helmholtz reciprocity, we introduce reciprocal visibility to determine the dual situation - the visibility of potential sampling position in the air from given points of interest on the ground. The resulting visibility map encodes which point on the ground is visible by which magnitude from any position in the air. Based on such a map, we demonstrate a first greedy sampling optimization.

Fusion of Single and Integral Multispectral Aerial Images

Nov 29, 2023

Abstract:We present a novel hybrid (model- and learning-based) architecture for fusing the most significant features from conventional aerial images and integral aerial images that result from synthetic aperture sensing for removing occlusion caused by dense vegetation. It combines the environment's spatial references with features of unoccluded targets. Our method out-beats the state-of-the-art, does not require manually tuned parameters, can be extended to an arbitrary number and combinations of spectral channels, and is reconfigurable to address different use-cases.

Stereoscopic Depth Perception Through Foliage

Oct 24, 2023Abstract:Both humans and computational methods struggle to discriminate the depths of objects hidden beneath foliage. However, such discrimination becomes feasible when we combine computational optical synthetic aperture sensing with the human ability to fuse stereoscopic images. For object identification tasks, as required in search and rescue, wildlife observation, surveillance, and early wildfire detection, depth assists in differentiating true from false findings, such as people, animals, or vehicles vs. sun-heated patches at the ground level or in the tree crowns, or ground fires vs. tree trunks. We used video captured by a drone above dense woodland to test users' ability to discriminate depth. We found that this is impossible when viewing monoscopic video and relying on motion parallax. The same was true with stereoscopic video because of the occlusions caused by foliage. However, when synthetic aperture sensing was used to reduce occlusions and disparity-scaled stereoscopic video was presented, whereas computational (stereoscopic matching) methods were unsuccessful, human observers successfully discriminated depth. This shows the potential of systems which exploit the synergy between computational methods and human vision to perform tasks that neither can perform alone.

Synthetic Aperture Anomaly Imaging

Apr 26, 2023Abstract:Previous research has shown that in the presence of foliage occlusion, anomaly detection performs significantly better in integral images resulting from synthetic aperture imaging compared to applying it to conventional aerial images. In this article, we hypothesize and demonstrate that integrating detected anomalies is even more effective than detecting anomalies in integrals. This results in enhanced occlusion removal, outlier suppression, and higher chances of visually as well as computationally detecting targets that are otherwise occluded. Our hypothesis was validated through both: simulations and field experiments. We also present a real-time application that makes our findings practically available for blue-light organizations and others using commercial drone platforms. It is designed to address use-cases that suffer from strong occlusion caused by vegetation, such as search and rescue, wildlife observation, early wildfire detection, and sur-veillance.

Synthetic Aperture Sensing for Occlusion Removal with Drone Swarms

Dec 30, 2022Abstract:We demonstrate how efficient autonomous drone swarms can be in detecting and tracking occluded targets in densely forested areas, such as lost people during search and rescue missions. Exploration and optimization of local viewing conditions, such as occlusion density and target view obliqueness, provide much faster and much more reliable results than previous, blind sampling strategies that are based on pre-defined waypoints. An adapted real-time particle swarm optimization and a new objective function are presented that are able to deal with dynamic and highly random through-foliage conditions. Synthetic aperture sensing is our fundamental sampling principle, and drone swarms are employed to approximate the optical signals of extremely wide and adaptable airborne lenses.

Evaluation of Color Anomaly Detection in Multispectral Images For Synthetic Aperture Sensing

Nov 08, 2022Abstract:In this article, we evaluate unsupervised anomaly detection methods in multispectral images obtained with a wavelength-independent synthetic aperture sensing technique, called Airborne Optical Sectioning (AOS). With a focus on search and rescue missions that apply drones to locate missing or injured persons in dense forest and require real-time operation, we evaluate runtime vs. quality of these methods. Furthermore, we show that color anomaly detection methods that normally operate in the visual range always benefit from an additional far infrared (thermal) channel. We also show that, even without additional thermal bands, the choice of color space in the visual range already has an impact on the detection results. Color spaces like HSV and HLS have the potential to outperform the widely used RGB color space, especially when color anomaly detection is used for forest-like environments.

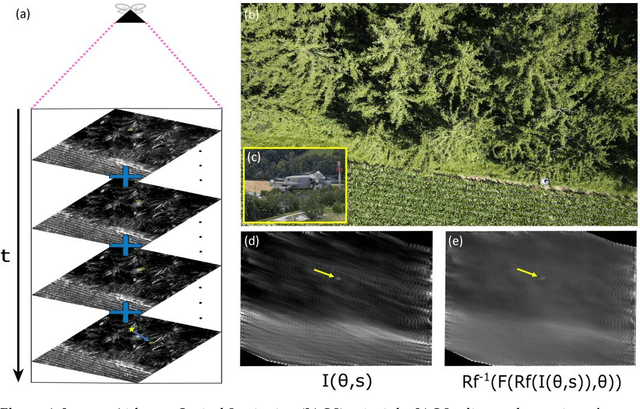

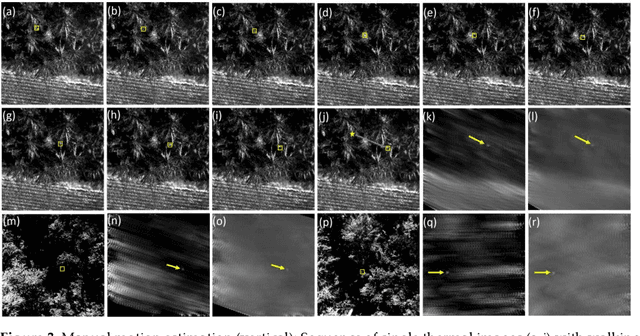

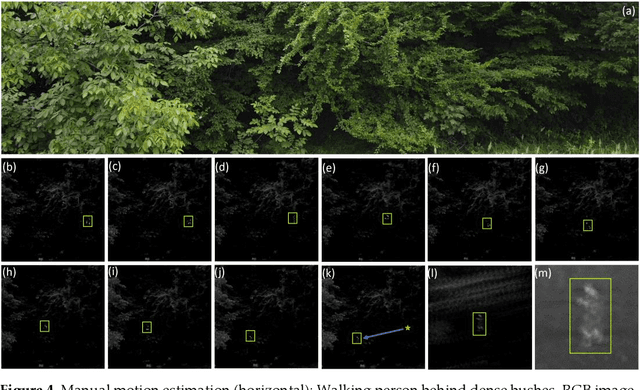

Inverse Airborne Optical Sectioning

Jul 27, 2022

Abstract:We present Inverse Airborne Optical Sectioning (IAOS) an optical analogy to Inverse Synthetic Aperture Radar (ISAR). Moving targets, such as walking people, that are heavily occluded by vegetation can be made visible and tracked with a stationary optical sensor (e.g., a hovering camera drone above forest). We introduce the principles of IAOS (i.e., inverse synthetic aperture imaging), explain how the signal of occluders can be further suppressed by filtering the Radon transform of the image integral, and present how targets motion parameters can be estimated manually and automatically. Finally, we show that while tracking occluded targets in conventional aerial images is infeasible, it becomes efficiently possible in integral images that result from IAOS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge