Indrajit Kurmi

Synthetic Aperture Sensing for Occlusion Removal with Drone Swarms

Dec 30, 2022Abstract:We demonstrate how efficient autonomous drone swarms can be in detecting and tracking occluded targets in densely forested areas, such as lost people during search and rescue missions. Exploration and optimization of local viewing conditions, such as occlusion density and target view obliqueness, provide much faster and much more reliable results than previous, blind sampling strategies that are based on pre-defined waypoints. An adapted real-time particle swarm optimization and a new objective function are presented that are able to deal with dynamic and highly random through-foliage conditions. Synthetic aperture sensing is our fundamental sampling principle, and drone swarms are employed to approximate the optical signals of extremely wide and adaptable airborne lenses.

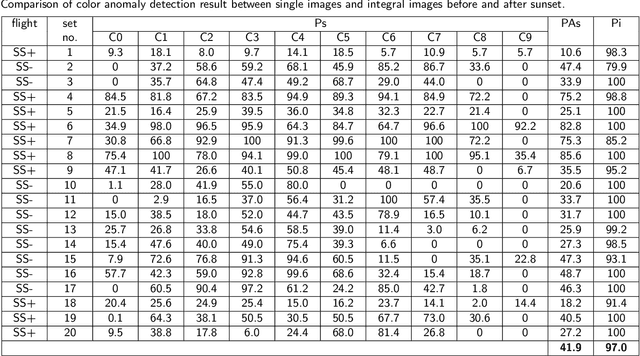

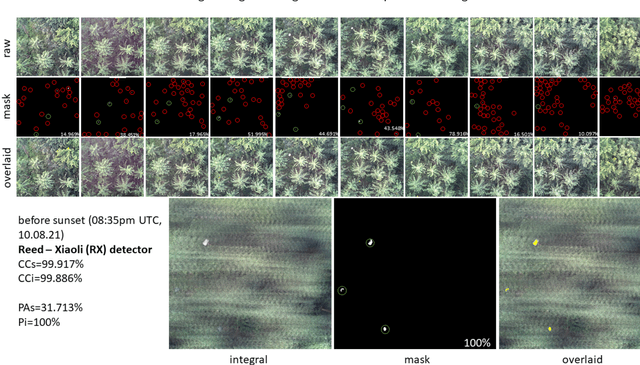

Evaluation of Color Anomaly Detection in Multispectral Images For Synthetic Aperture Sensing

Nov 08, 2022Abstract:In this article, we evaluate unsupervised anomaly detection methods in multispectral images obtained with a wavelength-independent synthetic aperture sensing technique, called Airborne Optical Sectioning (AOS). With a focus on search and rescue missions that apply drones to locate missing or injured persons in dense forest and require real-time operation, we evaluate runtime vs. quality of these methods. Furthermore, we show that color anomaly detection methods that normally operate in the visual range always benefit from an additional far infrared (thermal) channel. We also show that, even without additional thermal bands, the choice of color space in the visual range already has an impact on the detection results. Color spaces like HSV and HLS have the potential to outperform the widely used RGB color space, especially when color anomaly detection is used for forest-like environments.

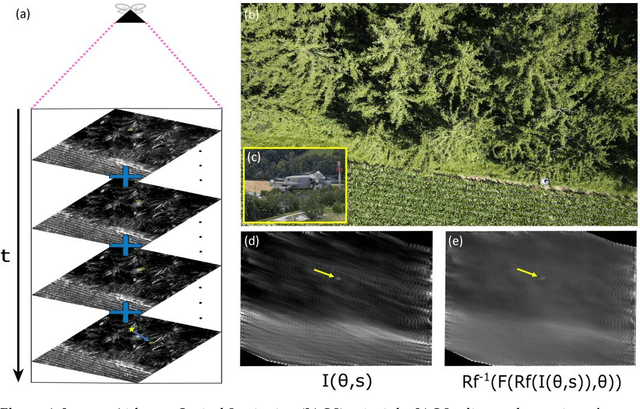

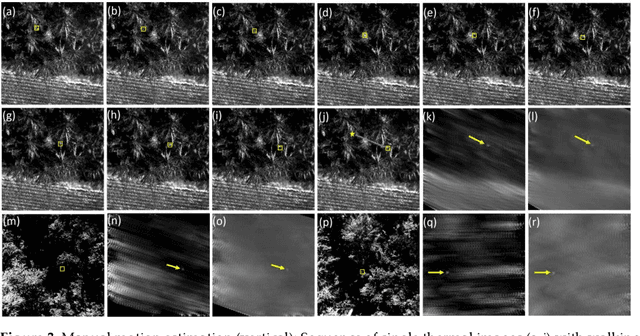

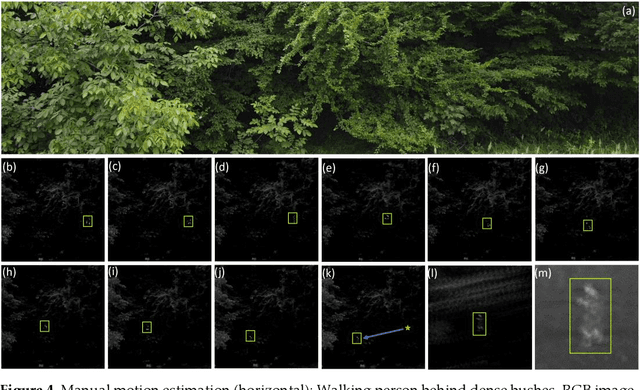

Inverse Airborne Optical Sectioning

Jul 27, 2022

Abstract:We present Inverse Airborne Optical Sectioning (IAOS) an optical analogy to Inverse Synthetic Aperture Radar (ISAR). Moving targets, such as walking people, that are heavily occluded by vegetation can be made visible and tracked with a stationary optical sensor (e.g., a hovering camera drone above forest). We introduce the principles of IAOS (i.e., inverse synthetic aperture imaging), explain how the signal of occluders can be further suppressed by filtering the Radon transform of the image integral, and present how targets motion parameters can be estimated manually and automatically. Finally, we show that while tracking occluded targets in conventional aerial images is infeasible, it becomes efficiently possible in integral images that result from IAOS.

On the Role of Field of View for Occlusion Removal with Airborne Optical Sectioning

Apr 28, 2022

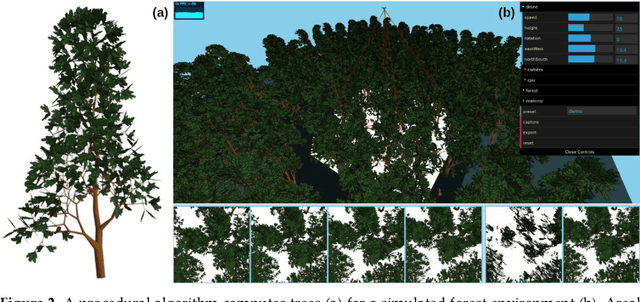

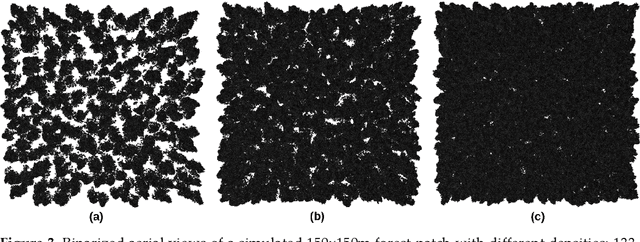

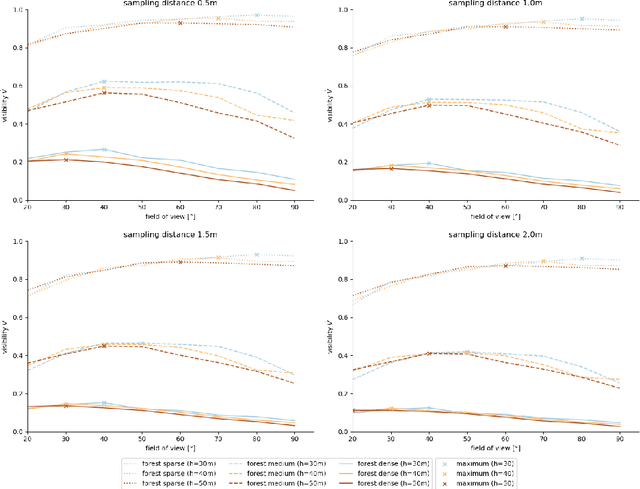

Abstract:Occlusion caused by vegetation is an essential problem for remote sensing applications in areas, such as search and rescue, wildfire detection, wildlife observation, surveillance, border control, and others. Airborne Optical Sectioning (AOS) is an optical, wavelength-independent synthetic aperture imaging technique that supports computational occlusion removal in real-time. It can be applied with manned or unmanned aircrafts, such as drones. In this article, we demonstrate a relationship between forest density and field of view (FOV) of applied imaging systems. This finding was made with the help of a simulated procedural forest model which offers the consideration of more realistic occlusion properties than our previous statistical model. While AOS has been explored with automatic and autonomous research prototypes in the past, we present a free AOS integration for DJI systems. It enables bluelight organizations and others to use and explore AOS with compatible, manually operated, off-the-shelf drones. The (digitally cropped) default FOV for this implementation was chosen based on our new finding.

Through-Foliage Tracking with Airborne Optical Sectioning

Nov 30, 2021

Abstract:Detecting and tracking moving targets through foliage is difficult, and for many cases even impossible in regular aerial images and videos. We present an initial light-weight and drone-operated 1D camera array that supports parallel synthetic aperture aerial imaging. Our main finding is that color anomaly detection benefits significantly from image integration when compared to conventional raw images or video frames (on average 97% vs. 42% in precision in our field experiments). We demonstrate, that these two contributions can lead to the detection and tracking of moving people through densely occluding forest.

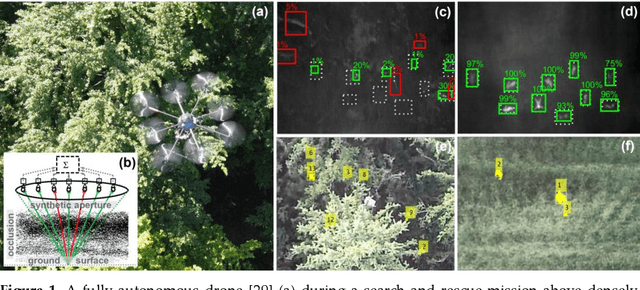

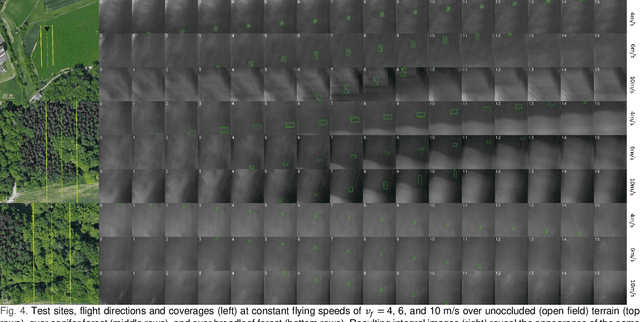

Combined Person Classification with Airborne Optical Sectioning

Jun 18, 2021

Abstract:Fully autonomous drones have been demonstrated to find lost or injured persons under strongly occluding forest canopy. Airborne Optical Sectioning (AOS), a novel synthetic aperture imaging technique, together with deep-learning-based classification enables high detection rates under realistic search-and-rescue conditions. We demonstrate that false detections can be significantly suppressed and true detections boosted by combining classifications from multiple AOS rather than single integral images. This improves classification rates especially in the presence of occlusion. To make this possible, we modified the AOS imaging process to support large overlaps between subsequent integrals, enabling real-time and on-board scanning and processing of groundspeeds up to 10 m/s.

Pose Error Reduction for Focus Enhancement in Thermal Synthetic Aperture Visualization

Dec 15, 2020Abstract:Airborne optical sectioning, an effective aerial synthetic aperture imaging technique for revealing artifacts occluded by forests, requires precise measurements of drone poses. In this article we present a new approach for reducing pose estimation errors beyond the possibilities of conventional Perspective-n-Point solutions by considering the underlying optimization as a focusing problem. We present an efficient image integration technique, which also reduces the parameter search space to achieve realistic processing times, and improves the quality of resulting synthetic integral images.

Search and Rescue with Airborne Optical Sectioning

Sep 18, 2020

Abstract:We show that automated person detection under occlusion conditions can be significantly improved by combining multi-perspective images before classification. Here, we employed image integration by Airborne Optical Sectioning (AOS)---a synthetic aperture imaging technique that uses camera drones to capture unstructured thermal light fields---to achieve this with a precision/recall of 96/93%. Finding lost or injured people in dense forests is not generally feasible with thermal recordings, but becomes practical with use of AOS integral images. Our findings lay the foundation for effective future search and rescue technologies that can be applied in combination with autonomous or manned aircraft. They can also be beneficial for other fields that currently suffer from inaccurate classification of partially occluded people, animals, or objects.

Fast Automatic Visibility Optimization for Thermal Synthetic Aperture Visualization

May 08, 2020

Abstract:In this article, we describe and validate the first fully automatic parameter optimization for thermal synthetic aperture visualization. It replaces previous manual exploration of the parameter space, which is time consuming and error prone. We prove that the visibility of targets in thermal integral images is proportional to the variance of the targets' image. Since this is invariant to occlusion it represents a suitable objective function for optimization. Our findings have the potential to enable fully autonomous search and recuse operations with camera drones.

A Statistical View on Synthetic Aperture Imaging for Occlusion Removal

Jun 15, 2019

Abstract:Synthetic apertures find applications in many fields, such as radar, radio telescopes, microscopy, sonar, ultrasound, LiDAR, and optical imaging. They approximate the signal of a single hypothetical wide aperture sensor with either an array of static small aperture sensors or a single moving small aperture sensor. Common sense in synthetic aperture sampling is that a dense sampling pattern within a wide aperture is required to reconstruct a clear signal. In this article we show that there exists practical limits to both, synthetic aperture size and number of samples for the application of occlusion removal. This leads to an understanding on how to design synthetic aperture sampling patterns and sensors in a most optimal and practically efficient way. We apply our findings to airborne optical sectioning which uses camera drones and synthetic aperture imaging to computationally remove occluding vegetation or trees for inspecting ground surfaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge