Nisansa de Silva

Department of Computer Science & Engineering, University of Moratuwa, Sri Lanka

Sinhala Physical Common Sense Reasoning Dataset for Global PIQA

Feb 02, 2026Abstract:This paper presents the first-ever Sinhala physical common sense reasoning dataset created as part of Global PIQA. It contains 110 human-created and verified data samples, where each sample consists of a prompt, the corresponding correct answer, and a wrong answer. Most of the questions refer to the Sri Lankan context, where Sinhala is an official language.

How Good is BLI as an Alignment Measure: A Study in Word Embedding Paradigm

Nov 17, 2025Abstract:Sans a dwindling number of monolingual embedding studies originating predominantly from the low-resource domains, it is evident that multilingual embedding has become the de facto choice due to its adaptability to the usage of code-mixed languages, granting the ability to process multilingual documents in a language-agnostic manner, as well as removing the difficult task of aligning monolingual embeddings. But is this victory complete? Are the multilingual models better than aligned monolingual models in every aspect? Can the higher computational cost of multilingual models always be justified? Or is there a compromise between the two extremes? Bilingual Lexicon Induction is one of the most widely used metrics in terms of evaluating the degree of alignment between two embedding spaces. In this study, we explore the strengths and limitations of BLI as a measure to evaluate the degree of alignment of two embedding spaces. Further, we evaluate how well traditional embedding alignment techniques, novel multilingual models, and combined alignment techniques perform BLI tasks in the contexts of both high-resource and low-resource languages. In addition to that, we investigate the impact of the language families to which the pairs of languages belong. We identify that BLI does not measure the true degree of alignment in some cases and we propose solutions for them. We propose a novel stem-based BLI approach to evaluate two aligned embedding spaces that take into account the inflected nature of languages as opposed to the prevalent word-based BLI techniques. Further, we introduce a vocabulary pruning technique that is more informative in showing the degree of the alignment, especially performing BLI on multilingual embedding models. Often, combined embedding alignment techniques perform better while in certain cases multilingual embeddings perform better (mainly low-resource language cases).

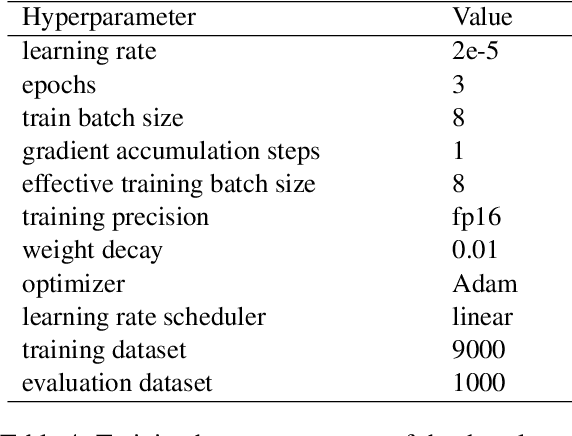

DESS: DeBERTa Enhanced Syntactic-Semantic Aspect Sentiment Triplet Extraction

Nov 13, 2025Abstract:Fine-grained sentiment analysis faces ongoing challenges in Aspect Sentiment Triple Extraction (ASTE), particularly in accurately capturing the relationships between aspects, opinions, and sentiment polarities. While researchers have made progress using BERT and Graph Neural Networks, the full potential of advanced language models in understanding complex language patterns remains unexplored. We introduce DESS, a new approach that builds upon previous work by integrating DeBERTa's enhanced attention mechanism to better understand context and relationships in text. Our framework maintains a dual-channel structure, where DeBERTa works alongside an LSTM channel to process both meaning and grammatical patterns in text. We have carefully refined how these components work together, paying special attention to how different types of language information interact. When we tested DESS on standard datasets, it showed meaningful improvements over current methods, with F1-score increases of 4.85, 8.36, and 2.42 in identifying aspect opinion pairs and determining sentiment accurately. Looking deeper into the results, we found that DeBERTa's sophisticated attention system helps DESS handle complicated sentence structures better, especially when important words are far apart. Our findings suggest that upgrading to more advanced language models when thoughtfully integrated, can lead to real improvements in how well we can analyze sentiments in text. The implementation of our approach is publicly available at: https://github.com/VishalRepos/DESS.

Utilizing Multilingual Encoders to Improve Large Language Models for Low-Resource Languages

Aug 12, 2025Abstract:Large Language Models (LLMs) excel in English, but their performance degrades significantly on low-resource languages (LRLs) due to English-centric training. While methods like LangBridge align LLMs with multilingual encoders such as the Massively Multilingual Text-to-Text Transfer Transformer (mT5), they typically use only the final encoder layer. We propose a novel architecture that fuses all intermediate layers, enriching the linguistic information passed to the LLM. Our approach features two strategies: (1) a Global Softmax weighting for overall layer importance, and (2) a Transformer Softmax model that learns token-specific weights. The fused representations are mapped into the LLM's embedding space, enabling it to process multilingual inputs. The model is trained only on English data, without using any parallel or multilingual data. Evaluated on XNLI, IndicXNLI, Sinhala News Classification, and Amazon Reviews, our Transformer Softmax model significantly outperforms the LangBridge baseline. We observe strong performance gains in LRLs, improving Sinhala classification accuracy from 71.66% to 75.86% and achieving clear improvements across Indic languages such as Tamil, Bengali, and Malayalam. These specific gains contribute to an overall boost in average XNLI accuracy from 70.36% to 71.50%. This approach offers a scalable, data-efficient path toward more capable and equitable multilingual LLMs.

SinLlama -- A Large Language Model for Sinhala

Aug 12, 2025Abstract:Low-resource languages such as Sinhala are often overlooked by open-source Large Language Models (LLMs). In this research, we extend an existing multilingual LLM (Llama-3-8B) to better serve Sinhala. We enhance the LLM tokenizer with Sinhala specific vocabulary and perform continual pre-training on a cleaned 10 million Sinhala corpus, resulting in the SinLlama model. This is the very first decoder-based open-source LLM with explicit Sinhala support. When SinLlama was instruction fine-tuned for three text classification tasks, it outperformed base and instruct variants of Llama-3-8B by a significant margin.

Improving the quality of Web-mined Parallel Corpora of Low-Resource Languages using Debiasing Heuristics

Feb 26, 2025

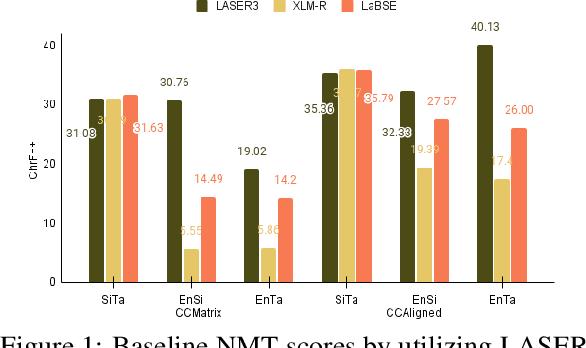

Abstract:Parallel Data Curation (PDC) techniques aim to filter out noisy parallel sentences from the web-mined corpora. Prior research has demonstrated that ranking sentence pairs using similarity scores on sentence embeddings derived from Pre-trained Multilingual Language Models (multiPLMs) and training the NMT systems with the top-ranked samples, produces superior NMT performance than when trained using the full dataset. However, previous research has shown that the choice of multiPLM significantly impacts the ranking quality. This paper investigates the reasons behind this disparity across multiPLMs. Using the web-mined corpora CCMatrix and CCAligned for En$\rightarrow$Si, En$\rightarrow$Ta and Si$\rightarrow$Ta, we show that different multiPLMs (LASER3, XLM-R, and LaBSE) are biased towards certain types of sentences, which allows noisy sentences to creep into the top-ranked samples. We show that by employing a series of heuristics, this noise can be removed to a certain extent. This results in improving the results of NMT systems trained with web-mined corpora and reduces the disparity across multiPLMs.

Linguistic Analysis of Sinhala YouTube Comments on Sinhala Music Videos: A Dataset Study

Jan 28, 2025

Abstract:This research investigates the area of Music Information Retrieval (MIR) and Music Emotion Recognition (MER) in relation to Sinhala songs, an underexplored field in music studies. The purpose of this study is to analyze the behavior of Sinhala comments on YouTube Sinhala song videos using social media comments as primary data sources. These included comments from 27 YouTube videos containing 20 different Sinhala songs, which were carefully selected so that strict linguistic reliability would be maintained and relevancy ensured. This process led to a total of 93,116 comments being gathered upon which the dataset was refined further by advanced filtering methods and transliteration mechanisms resulting into 63,471 Sinhala comments. Additionally, 964 stop-words specific for the Sinhala language were algorithmically derived out of which 182 matched exactly with English stop-words from NLTK corpus once translated. Also, comparisons were made between general domain corpora in Sinhala against the YouTube Comment Corpus in Sinhala confirming latter as good representation of general domain. The meticulously curated data set as well as the derived stop-words form important resources for future research in the fields of MIR and MER, since they could be used and demonstrate that there are possibilities with computational techniques to solve complex musical experiences across varied cultural traditions

Sinhala Transliteration: A Comparative Analysis Between Rule-based and Seq2Seq Approaches

Dec 31, 2024

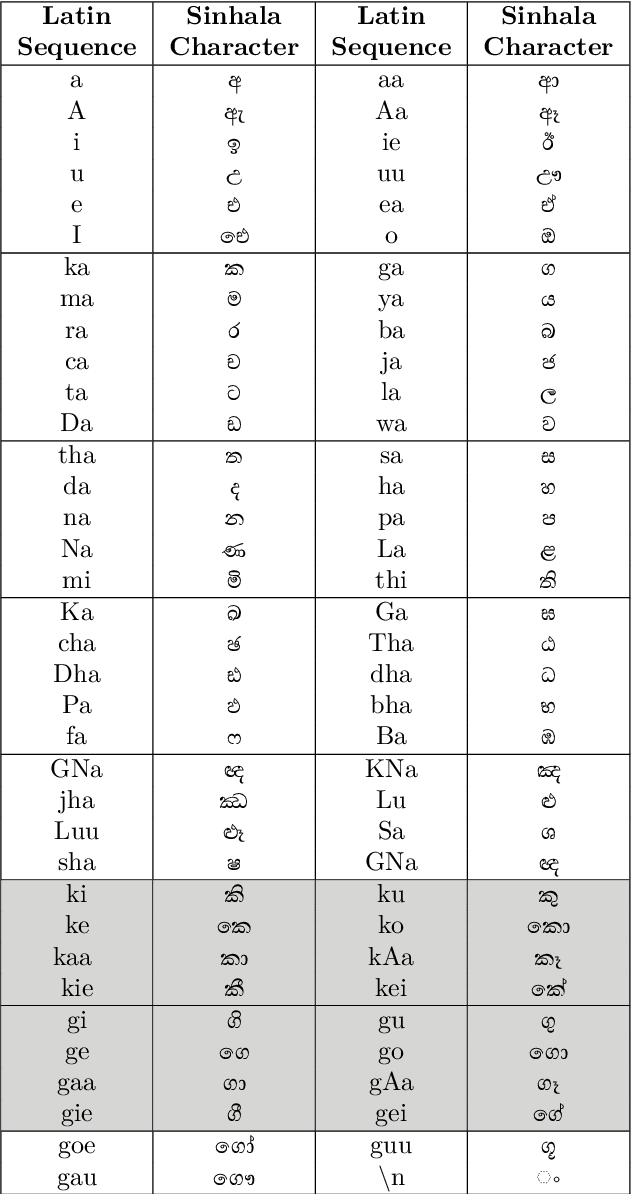

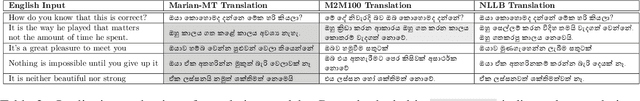

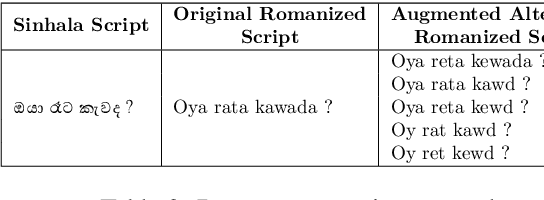

Abstract:Due to reasons of convenience and lack of tech literacy, transliteration (i.e., Romanizing native scripts instead of using localization tools) is eminently prevalent in the context of low-resource languages such as Sinhala, which have their own writing script. In this study, our focus is on Romanized Sinhala transliteration. We propose two methods to address this problem: Our baseline is a rule-based method, which is then compared against our second method where we approach the transliteration problem as a sequence-to-sequence task akin to the established Neural Machine Translation (NMT) task. For the latter, we propose a Transformer-based Encode-Decoder solution. We witnessed that the Transformer-based method could grab many ad-hoc patterns within the Romanized scripts compared to the rule-based method. The code base associated with this paper is available on GitHub - https://github.com/kasunw22/Sinhala-Transliterator/

Instruct-DeBERTa: A Hybrid Approach for Aspect-based Sentiment Analysis on Textual Reviews

Aug 23, 2024Abstract:Aspect-based Sentiment Analysis (ABSA) is a critical task in Natural Language Processing (NLP) that focuses on extracting sentiments related to specific aspects within a text, offering deep insights into customer opinions. Traditional sentiment analysis methods, while useful for determining overall sentiment, often miss the implicit opinions about particular product or service features. This paper presents a comprehensive review of the evolution of ABSA methodologies, from lexicon-based approaches to machine learning and deep learning techniques. We emphasize the recent advancements in Transformer-based models, particularly Bidirectional Encoder Representations from Transformers (BERT) and its variants, which have set new benchmarks in ABSA tasks. We focused on finetuning Llama and Mistral models, building hybrid models using the SetFit framework, and developing our own model by exploiting the strengths of state-of-the-art (SOTA) Transformer-based models for aspect term extraction (ATE) and aspect sentiment classification (ASC). Our hybrid model Instruct - DeBERTa uses SOTA InstructABSA for aspect extraction and DeBERTa-V3-baseabsa-V1 for aspect sentiment classification. We utilize datasets from different domains to evaluate our model's performance. Our experiments indicate that the proposed hybrid model significantly improves the accuracy and reliability of sentiment analysis across all experimented domains. As per our findings, our hybrid model Instruct - DeBERTa is the best-performing model for the joint task of ATE and ASC for both SemEval restaurant 2014 and SemEval laptop 2014 datasets separately. By addressing the limitations of existing methodologies, our approach provides a robust solution for understanding detailed consumer feedback, thus offering valuable insights for businesses aiming to enhance customer satisfaction and product development.

M2DS: Multilingual Dataset for Multi-document Summarisation

Jul 17, 2024Abstract:In the rapidly evolving digital era, there is an increasing demand for concise information as individuals seek to distil key insights from various sources. Recent attention from researchers on Multi-document Summarisation (MDS) has resulted in diverse datasets covering customer reviews, academic papers, medical and legal documents, and news articles. However, the English-centric nature of these datasets has created a conspicuous void for multilingual datasets in today's globalised digital landscape, where linguistic diversity is celebrated. Media platforms such as British Broadcasting Corporation (BBC) have disseminated news in 20+ languages for decades. With only 380 million people speaking English natively as their first language, accounting for less than 5% of the global population, the vast majority primarily relies on other languages. These facts underscore the need for inclusivity in MDS research, utilising resources from diverse languages. Recognising this gap, we present the Multilingual Dataset for Multi-document Summarisation (M2DS), which, to the best of our knowledge, is the first dataset of its kind. It includes document-summary pairs in five languages from BBC articles published during the 2010-2023 period. This paper introduces M2DS, emphasising its unique multilingual aspect, and includes baseline scores from state-of-the-art MDS models evaluated on our dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge