Nimrod Dorfman

Schema Networks: Zero-shot Transfer with a Generative Causal Model of Intuitive Physics

Aug 17, 2017

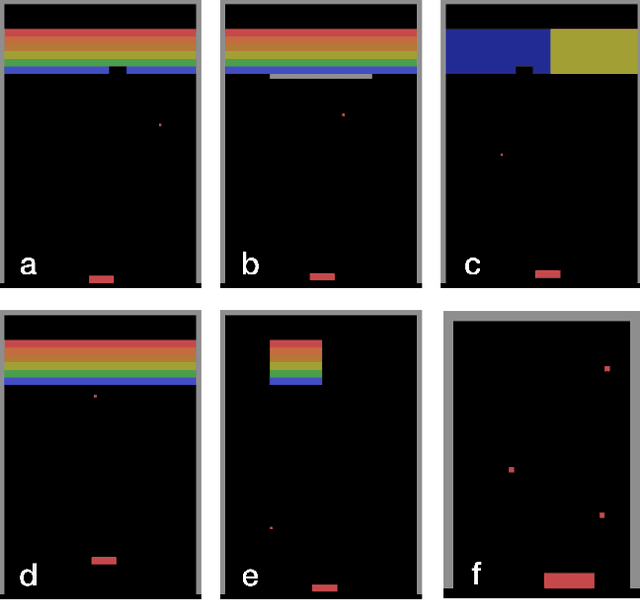

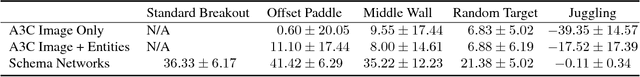

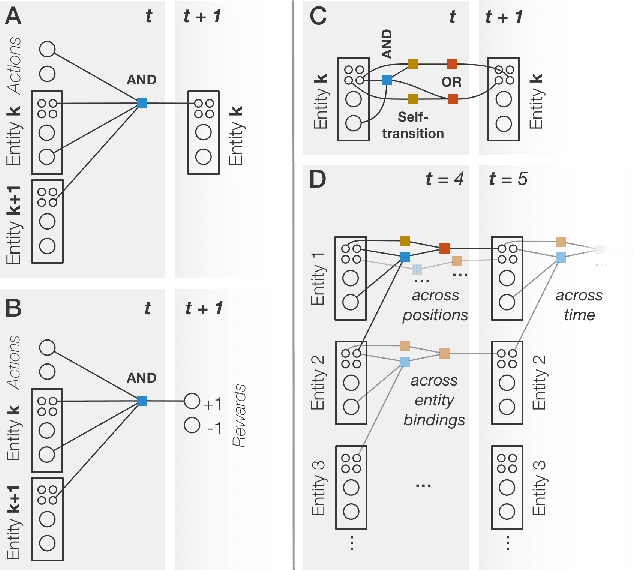

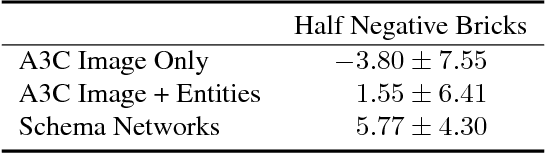

Abstract:The recent adaptation of deep neural network-based methods to reinforcement learning and planning domains has yielded remarkable progress on individual tasks. Nonetheless, progress on task-to-task transfer remains limited. In pursuit of efficient and robust generalization, we introduce the Schema Network, an object-oriented generative physics simulator capable of disentangling multiple causes of events and reasoning backward through causes to achieve goals. The richly structured architecture of the Schema Network can learn the dynamics of an environment directly from data. We compare Schema Networks with Asynchronous Advantage Actor-Critic and Progressive Networks on a suite of Breakout variations, reporting results on training efficiency and zero-shot generalization, consistently demonstrating faster, more robust learning and better transfer. We argue that generalizing from limited data and learning causal relationships are essential abilities on the path toward generally intelligent systems.

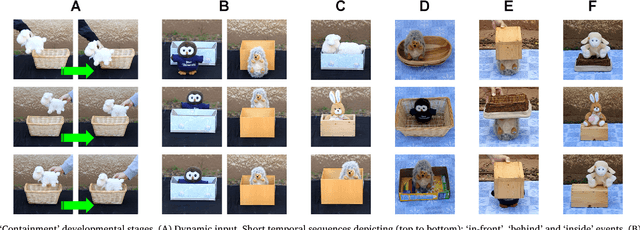

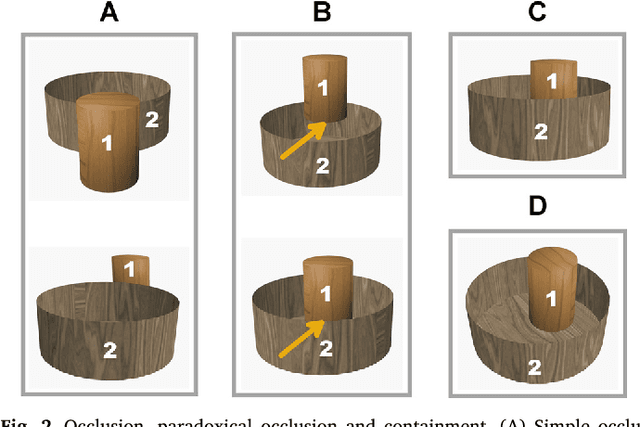

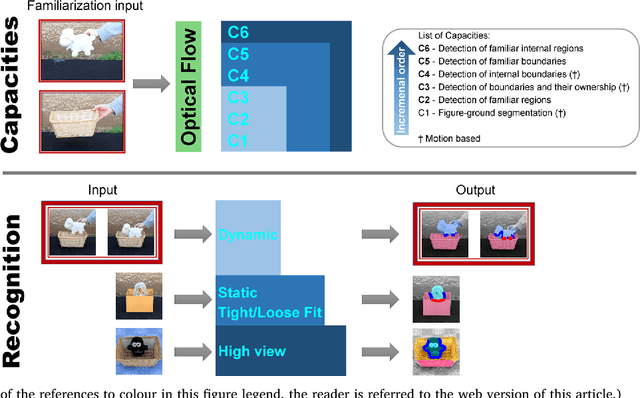

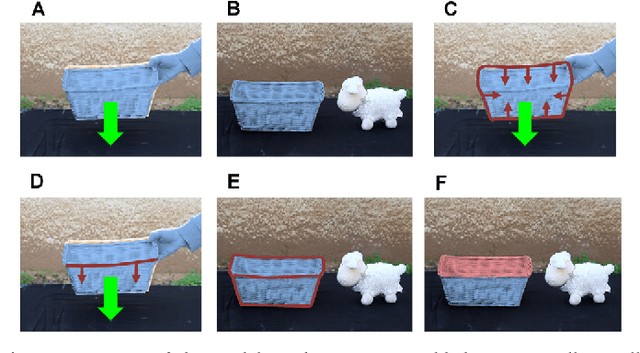

Discovering containment: from infants to machines

Oct 30, 2016

Abstract:Current artificial learning systems can recognize thousands of visual categories, or play Go at a champion"s level, but cannot explain infants learning, in particular the ability to learn complex concepts without guidance, in a specific order. A notable example is the category of 'containers' and the notion of containment, one of the earliest spatial relations to be learned, starting already at 2.5 months, and preceding other common relations (e.g., support). Such spontaneous unsupervised learning stands in contrast with current highly successful computational models, which learn in a supervised manner, that is, by using large data sets of labeled examples. How can meaningful concepts be learned without guidance, and what determines the trajectory of infant learning, making some notions appear consistently earlier than others?

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge