Niels da Vitoria Lobo

MMFT-BERT: Multimodal Fusion Transformer with BERT Encodings for Visual Question Answering

Oct 27, 2020

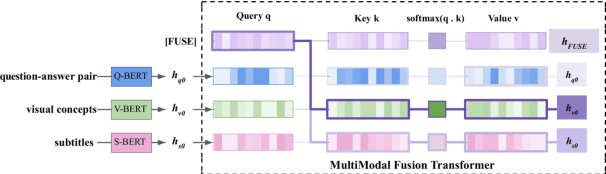

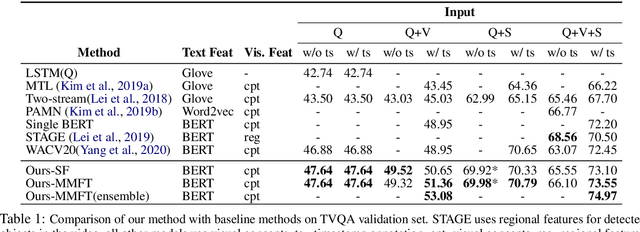

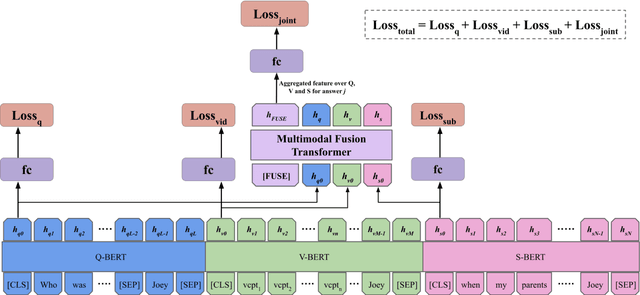

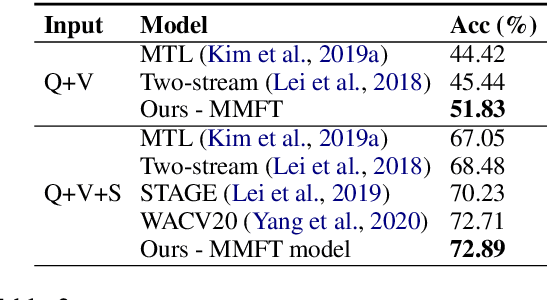

Abstract:We present MMFT-BERT(MultiModal Fusion Transformer with BERT encodings), to solve Visual Question Answering (VQA) ensuring individual and combined processing of multiple input modalities. Our approach benefits from processing multimodal data (video and text) adopting the BERT encodings individually and using a novel transformer-based fusion method to fuse them together. Our method decomposes the different sources of modalities, into different BERT instances with similar architectures, but variable weights. This achieves SOTA results on the TVQA dataset. Additionally, we provide TVQA-Visual, an isolated diagnostic subset of TVQA, which strictly requires the knowledge of visual (V) modality based on a human annotator's judgment. This set of questions helps us to study the model's behavior and the challenges TVQA poses to prevent the achievement of super human performance. Extensive experiments show the effectiveness and superiority of our method.

Text Synopsis Generation for Egocentric Videos

May 08, 2020

Abstract:Mass utilization of body-worn cameras has led to a huge corpus of available egocentric video. Existing video summarization algorithms can accelerate browsing such videos by selecting (visually) interesting shots from them. Nonetheless, since the system user still has to watch the summary videos, browsing large video databases remain a challenge. Hence, in this work, we propose to generate a textual synopsis, consisting of a few sentences describing the most important events in a long egocentric videos. Users can read the short text to gain insight about the video, and more importantly, efficiently search through the content of a large video database using text queries. Since egocentric videos are long and contain many activities and events, using video-to-text algorithms results in thousands of descriptions, many of which are incorrect. Therefore, we propose a multi-task learning scheme to simultaneously generate descriptions for video segments and summarize the resulting descriptions in an end-to-end fashion. We Input a set of video shots and the network generates a text description for each shot. Next, visual-language content matching unit that is trained with a weakly supervised objective, identifies the correct descriptions. Finally, the last component of our network, called purport network, evaluates the descriptions all together to select the ones containing crucial information. Out of thousands of descriptions generated for the video, a few informative sentences are returned to the user. We validate our framework on the challenging UT Egocentric video dataset, where each video is between 3 to 5 hours long, associated with over 3000 textual descriptions on average. The generated textual summaries, including only 5 percent (or less) of the generated descriptions, are compared to groundtruth summaries in text domain using well-established metrics in natural language processing.

Understanding Trajectory Behavior: A Motion Pattern Approach

Jan 04, 2015

Abstract:Mining the underlying patterns in gigantic and complex data is of great importance to data analysts. In this paper, we propose a motion pattern approach to mine frequent behaviors in trajectory data. Motion patterns, defined by a set of highly similar flow vector groups in a spatial locality, have been shown to be very effective in extracting dominant motion behaviors in video sequences. Inspired by applications and properties of motion patterns, we have designed a framework that successfully solves the general task of trajectory clustering. Our proposed algorithm consists of four phases: flow vector computation, motion component extraction, motion component's reachability set creation, and motion pattern formation. For the first phase, we break down trajectories into flow vectors that indicate instantaneous movements. In the second phase, via a Kmeans clustering approach, we create motion components by clustering the flow vectors with respect to their location and velocity. Next, we create motion components' reachability set in terms of spatial proximity and motion similarity. Finally, for the fourth phase, we cluster motion components using agglomerative clustering with the weighted Jaccard distance between the motion components' signatures, a set created using path reachability. We have evaluated the effectiveness of our proposed method in an extensive set of experiments on diverse datasets. Further, we have shown how our proposed method handles difficulties in the general task of trajectory clustering that challenge the existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge