Nese Alyuz

Unobtrusive and Multimodal Approach for Behavioral Engagement Detection of Students

Jan 16, 2019

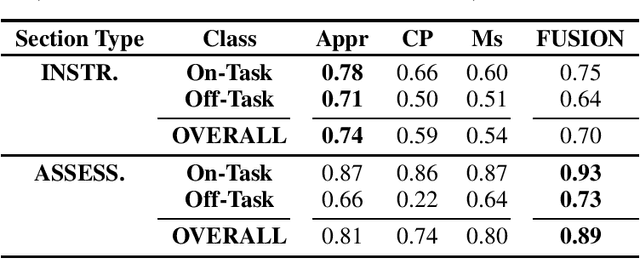

Abstract:We propose a multimodal approach for detection of students' behavioral engagement states (i.e., On-Task vs. Off-Task), based on three unobtrusive modalities: Appearance, Context-Performance, and Mouse. Final behavioral engagement states are achieved by fusing modality-specific classifiers at the decision level. Various experiments were conducted on a student dataset collected in an authentic classroom.

Detecting Behavioral Engagement of Students in the Wild Based on Contextual and Visual Data

Jan 15, 2019

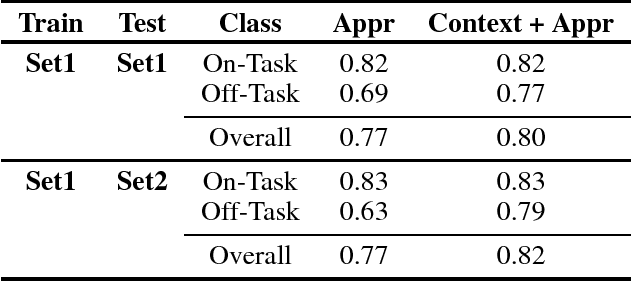

Abstract:To investigate the detection of students' behavioral engagement (On-Task vs. Off-Task), we propose a two-phase approach in this study. In Phase 1, contextual logs (URLs) are utilized to assess active usage of the content platform. If there is active use, the appearance information is utilized in Phase 2 to infer behavioral engagement. Incorporating the contextual information improved the overall F1-scores from 0.77 to 0.82. Our cross-classroom and cross-platform experiments showed the proposed generic and multi-modal behavioral engagement models' applicability to a different set of students or different subject areas.

The Importance of Socio-Cultural Differences for Annotating and Detecting the Affective States of Students

Jan 12, 2019

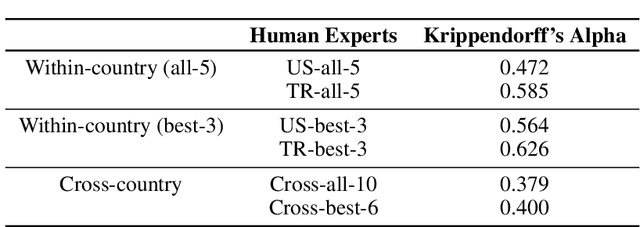

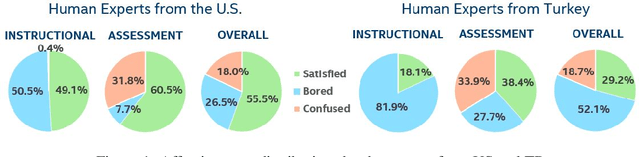

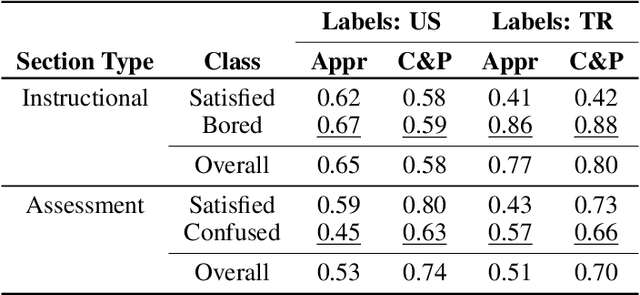

Abstract:The development of real-time affect detection models often depends upon obtaining annotated data for supervised learning by employing human experts to label the student data. One open question in annotating affective data for affect detection is whether the labelers (i.e., human experts) need to be socio-culturally similar to the students being labeled, as this impacts the cost feasibility of obtaining the labels. In this study, we investigate the following research questions: For affective state annotation, how does the socio-cultural background of human expert labelers, compared to the subjects, impact the degree of consensus and distribution of affective states obtained? Secondly, how do differences in labeler background impact the performance of affect detection models that are trained using these labels?

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge