Nayantara Mudur

FEABench: Evaluating Language Models on Multiphysics Reasoning Ability

Apr 08, 2025Abstract:Building precise simulations of the real world and invoking numerical solvers to answer quantitative problems is an essential requirement in engineering and science. We present FEABench, a benchmark to evaluate the ability of large language models (LLMs) and LLM agents to simulate and solve physics, mathematics and engineering problems using finite element analysis (FEA). We introduce a comprehensive evaluation scheme to investigate the ability of LLMs to solve these problems end-to-end by reasoning over natural language problem descriptions and operating COMSOL Multiphysics$^\circledR$, an FEA software, to compute the answers. We additionally design a language model agent equipped with the ability to interact with the software through its Application Programming Interface (API), examine its outputs and use tools to improve its solutions over multiple iterations. Our best performing strategy generates executable API calls 88% of the time. LLMs that can successfully interact with and operate FEA software to solve problems such as those in our benchmark would push the frontiers of automation in engineering. Acquiring this capability would augment LLMs' reasoning skills with the precision of numerical solvers and advance the development of autonomous systems that can tackle complex problems in the real world. The code is available at https://github.com/google/feabench

Diffusion-HMC: Parameter Inference with Diffusion Model driven Hamiltonian Monte Carlo

May 08, 2024

Abstract:Diffusion generative models have excelled at diverse image generation and reconstruction tasks across fields. A less explored avenue is their application to discriminative tasks involving regression or classification problems. The cornerstone of modern cosmology is the ability to generate predictions for observed astrophysical fields from theory and constrain physical models from observations using these predictions. This work uses a single diffusion generative model to address these interlinked objectives -- as a surrogate model or emulator for cold dark matter density fields conditional on input cosmological parameters, and as a parameter inference model that solves the inverse problem of constraining the cosmological parameters of an input field. The model is able to emulate fields with summary statistics consistent with those of the simulated target distribution. We then leverage the approximate likelihood of the diffusion generative model to derive tight constraints on cosmology by using the Hamiltonian Monte Carlo method to sample the posterior on cosmological parameters for a given test image. Finally, we demonstrate that this parameter inference approach is more robust to the addition of noise than baseline parameter inference networks.

Quantum Many-Body Physics Calculations with Large Language Models

Mar 05, 2024

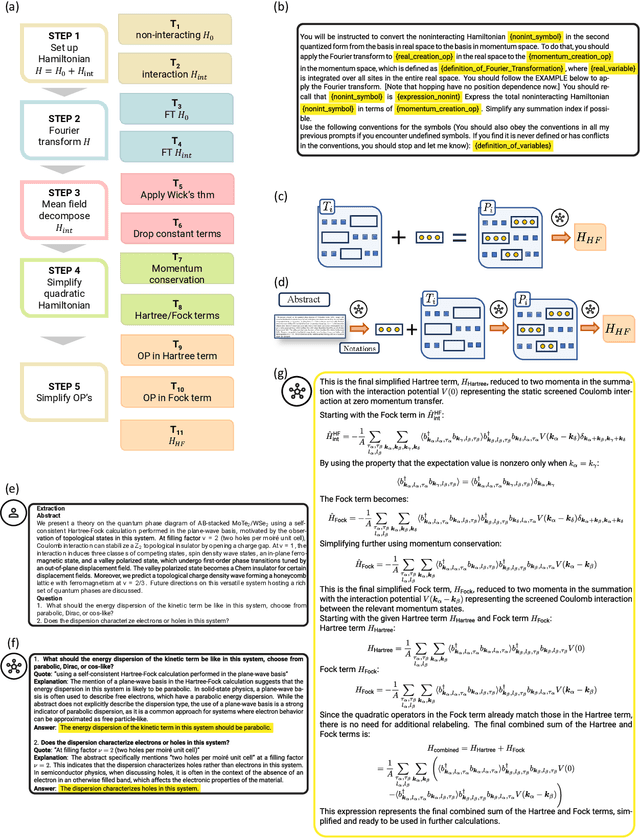

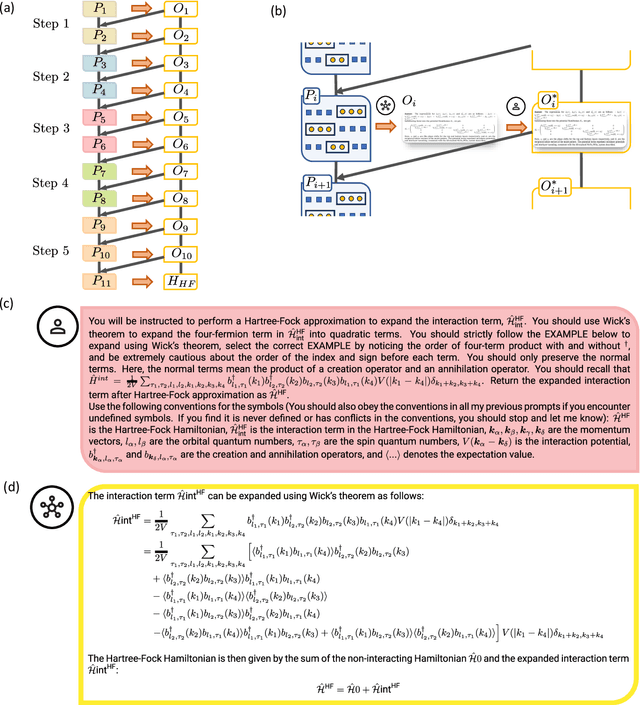

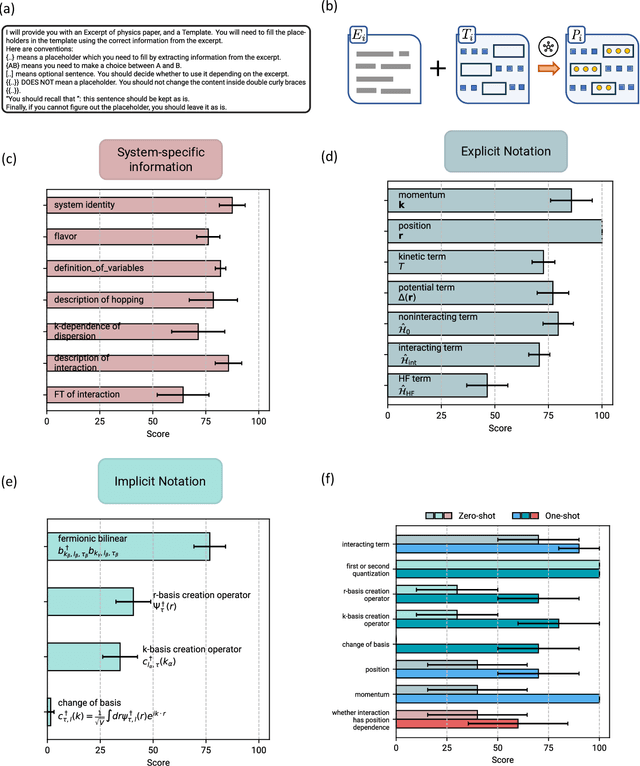

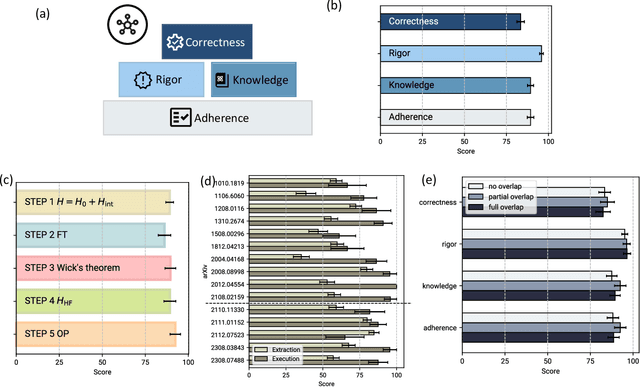

Abstract:Large language models (LLMs) have demonstrated an unprecedented ability to perform complex tasks in multiple domains, including mathematical and scientific reasoning. We demonstrate that with carefully designed prompts, LLMs can accurately carry out key calculations in research papers in theoretical physics. We focus on a broadly used approximation method in quantum physics: the Hartree-Fock method, requiring an analytic multi-step calculation deriving approximate Hamiltonian and corresponding self-consistency equations. To carry out the calculations using LLMs, we design multi-step prompt templates that break down the analytic calculation into standardized steps with placeholders for problem-specific information. We evaluate GPT-4's performance in executing the calculation for 15 research papers from the past decade, demonstrating that, with correction of intermediate steps, it can correctly derive the final Hartree-Fock Hamiltonian in 13 cases and makes minor errors in 2 cases. Aggregating across all research papers, we find an average score of 87.5 (out of 100) on the execution of individual calculation steps. Overall, the requisite skill for doing these calculations is at the graduate level in quantum condensed matter theory. We further use LLMs to mitigate the two primary bottlenecks in this evaluation process: (i) extracting information from papers to fill in templates and (ii) automatic scoring of the calculation steps, demonstrating good results in both cases. The strong performance is the first step for developing algorithms that automatically explore theoretical hypotheses at an unprecedented scale.

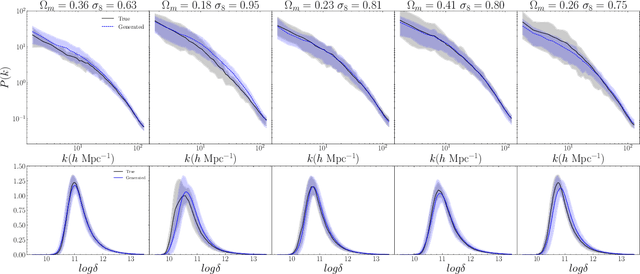

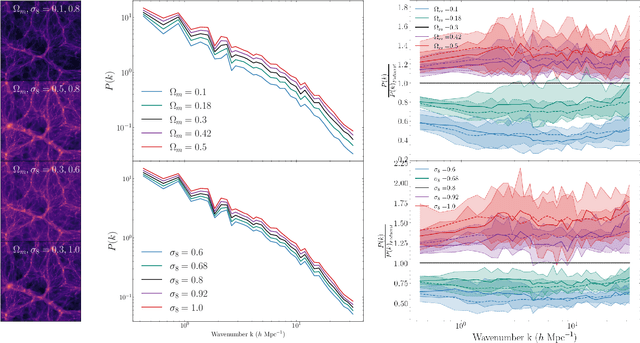

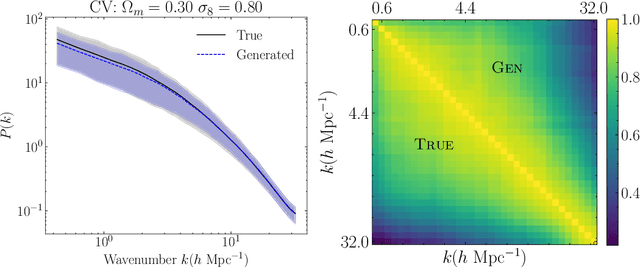

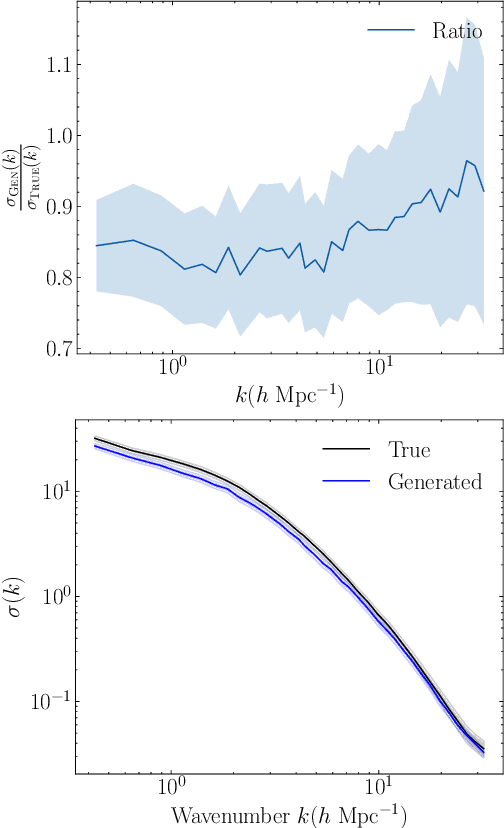

Cosmological Field Emulation and Parameter Inference with Diffusion Models

Dec 12, 2023Abstract:Cosmological simulations play a crucial role in elucidating the effect of physical parameters on the statistics of fields and on constraining parameters given information on density fields. We leverage diffusion generative models to address two tasks of importance to cosmology -- as an emulator for cold dark matter density fields conditional on input cosmological parameters $\Omega_m$ and $\sigma_8$, and as a parameter inference model that can return constraints on the cosmological parameters of an input field. We show that the model is able to generate fields with power spectra that are consistent with those of the simulated target distribution, and capture the subtle effect of each parameter on modulations in the power spectrum. We additionally explore their utility as parameter inference models and find that we can obtain tight constraints on cosmological parameters.

Probabilistic reconstruction of Dark Matter fields from biased tracers using diffusion models

Nov 14, 2023

Abstract:Galaxies are biased tracers of the underlying cosmic web, which is dominated by dark matter components that cannot be directly observed. The relationship between dark matter density fields and galaxy distributions can be sensitive to assumptions in cosmology and astrophysical processes embedded in the galaxy formation models, that remain uncertain in many aspects. Based on state-of-the-art galaxy formation simulation suites with varied cosmological parameters and sub-grid astrophysics, we develop a diffusion generative model to predict the unbiased posterior distribution of the underlying dark matter fields from the given stellar mass fields, while being able to marginalize over the uncertainties in cosmology and galaxy formation.

Can denoising diffusion probabilistic models generate realistic astrophysical fields?

Nov 22, 2022Abstract:Score-based generative models have emerged as alternatives to generative adversarial networks (GANs) and normalizing flows for tasks involving learning and sampling from complex image distributions. In this work we investigate the ability of these models to generate fields in two astrophysical contexts: dark matter mass density fields from cosmological simulations and images of interstellar dust. We examine the fidelity of the sampled cosmological fields relative to the true fields using three different metrics, and identify potential issues to address. We demonstrate a proof-of-concept application of the model trained on dust in denoising dust images. To our knowledge, this is the first application of this class of models to the interstellar medium.

Supervised Deep Similarity Matching

Mar 10, 2020

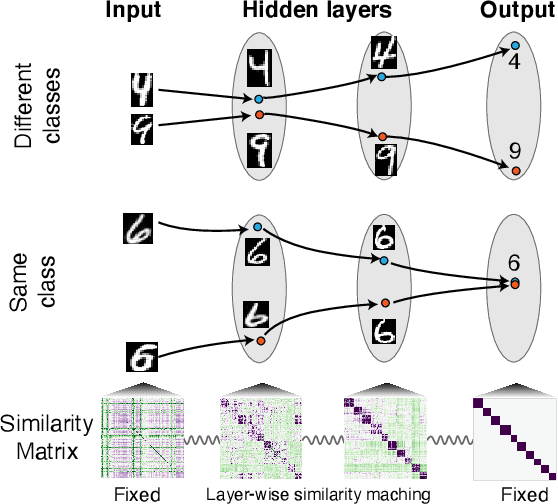

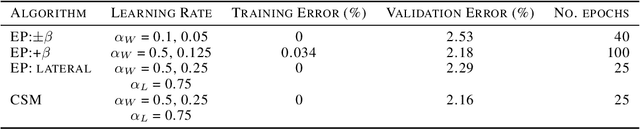

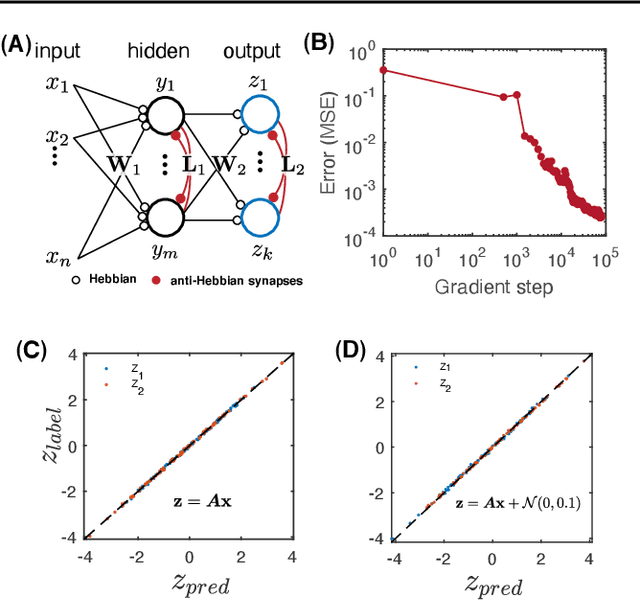

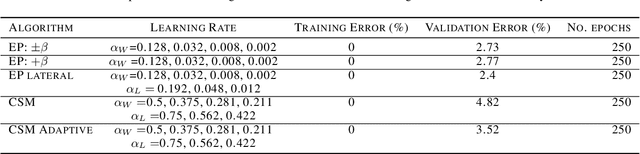

Abstract:We propose a novel biologically-plausible solution to the credit assignment problem, being motivated by observations in the ventral visual pathway and trained deep neural networks. In both, representations of objects in the same category become progressively more similar, while objects belonging to different categories becomes less similar. We use this observation to motivate a layer-specific learning goal in a deep network: each layer aims to learn a representational similarity matrix that interpolates between previous and later layers. We formulate this idea using a supervised deep similarity matching cost function and derive from it deep neural networks with feedforward, lateral and feedback connections, and neurons that exhibit biologically-plausible Hebbian and anti-Hebbian plasticity. Supervised deep similarity matching can be interpreted as an energy-based learning algorithm, but with significant differences from others in how a contrastive function is constructed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge