Naveen Sharma

Community Needs and Assets: A Computational Analysis of Community Conversations

Mar 20, 2024

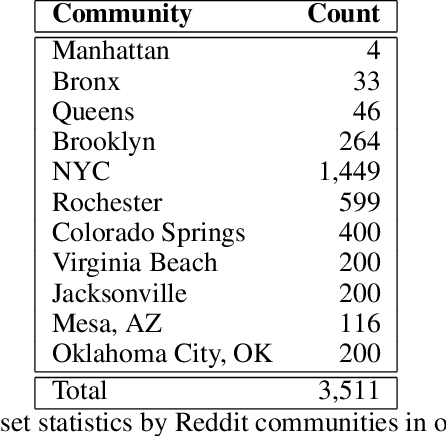

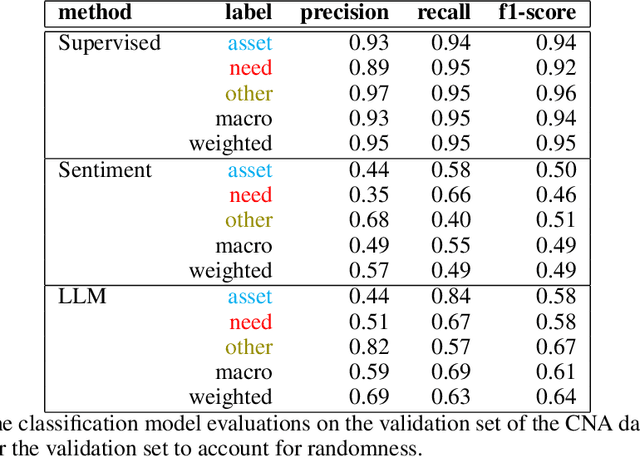

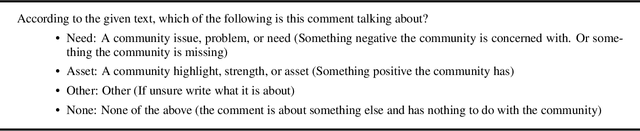

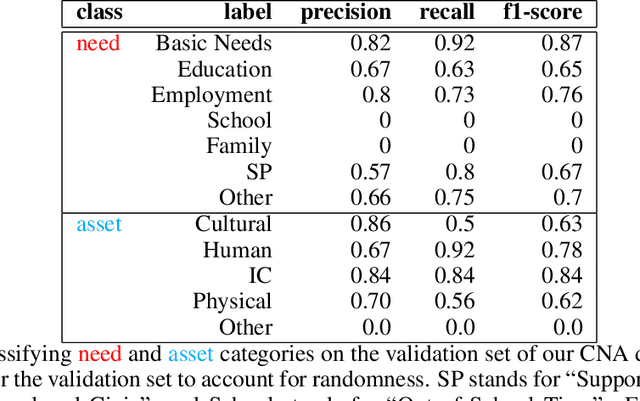

Abstract:A community needs assessment is a tool used by non-profits and government agencies to quantify the strengths and issues of a community, allowing them to allocate their resources better. Such approaches are transitioning towards leveraging social media conversations to analyze the needs of communities and the assets already present within them. However, manual analysis of exponentially increasing social media conversations is challenging. There is a gap in the present literature in computationally analyzing how community members discuss the strengths and needs of the community. To address this gap, we introduce the task of identifying, extracting, and categorizing community needs and assets from conversational data using sophisticated natural language processing methods. To facilitate this task, we introduce the first dataset about community needs and assets consisting of 3,511 conversations from Reddit, annotated using crowdsourced workers. Using this dataset, we evaluate an utterance-level classification model compared to sentiment classification and a popular large language model (in a zero-shot setting), where we find that our model outperforms both baselines at an F1 score of 94% compared to 49% and 61% respectively. Furthermore, we observe through our study that conversations about needs have negative sentiments and emotions, while conversations about assets focus on location and entities. The dataset is available at https://github.com/towhidabsar/CommunityNeeds.

Infrastructure Ombudsman: Mining Future Failure Concerns from Structural Disaster Response

Feb 22, 2024Abstract:Current research concentrates on studying discussions on social media related to structural failures to improve disaster response strategies. However, detecting social web posts discussing concerns about anticipatory failures is under-explored. If such concerns are channeled to the appropriate authorities, it can aid in the prevention and mitigation of potential infrastructural failures. In this paper, we develop an infrastructure ombudsman -- that automatically detects specific infrastructure concerns. Our work considers several recent structural failures in the US. We present a first-of-its-kind dataset of 2,662 social web instances for this novel task mined from Reddit and YouTube.

Improved Inference of Human Intent by Combining Plan Recognition and Language Feedback

Oct 03, 2023

Abstract:Conversational assistive robots can aid people, especially those with cognitive impairments, to accomplish various tasks such as cooking meals, performing exercises, or operating machines. However, to interact with people effectively, robots must recognize human plans and goals from noisy observations of human actions, even when the user acts sub-optimally. Previous works on Plan and Goal Recognition (PGR) as planning have used hierarchical task networks (HTN) to model the actor/human. However, these techniques are insufficient as they do not have user engagement via natural modes of interaction such as language. Moreover, they have no mechanisms to let users, especially those with cognitive impairments, know of a deviation from their original plan or about any sub-optimal actions taken towards their goal. We propose a novel framework for plan and goal recognition in partially observable domains -- Dialogue for Goal Recognition (D4GR) enabling a robot to rectify its belief in human progress by asking clarification questions about noisy sensor data and sub-optimal human actions. We evaluate the performance of D4GR over two simulated domains -- kitchen and blocks domain. With language feedback and the world state information in a hierarchical task model, we show that D4GR framework for the highest sensor noise performs 1% better than HTN in goal accuracy in both domains. For plan accuracy, D4GR outperforms by 4% in the kitchen domain and 2% in the blocks domain in comparison to HTN. The ALWAYS-ASK oracle outperforms our policy by 3% in goal recognition and 7%in plan recognition. D4GR does so by asking 68% fewer questions than an oracle baseline. We also demonstrate a real-world robot scenario in the kitchen domain, validating the improved plan and goal recognition of D4GR in a realistic setting.

Community Learning: Understanding A Community Through NLP for Positive Impact

Oct 11, 2022Abstract:A post-pandemic world resulted in economic upheaval, particularly for the cities' communities. While significant work in NLP4PI focuses on national and international events, there is a gap in bringing such state-of-the-art methods into the community development field. In order to help with community development, we must learn about the communities we develop. To that end, we propose the task of community learning as a computational task of extracting natural language data about the community, transforming and loading it into a suitable knowledge graph structure for further downstream applications. We study two particular cases of homelessness and education in showing the visualization capabilities of a knowledge graph, and also discuss other usefulness such a model can provide.

LotRec: A Recommender for Urban Vacant Lot Conversion

Feb 05, 2022

Abstract:Vacant lots are neglected properties in a city that lead to environmental hazards and poor standard of living for the community. Thus, reclaiming vacant lots and putting them to productive use is an important consideration for many cities. Given a large number of vacant lots and resource constraints for conversion, two key questions for a city are (1) whether to convert a vacant lot or not; and (2) what to convert a vacant lot as. We seek to provide computational support to answer these questions. To this end, we identify the determinants of a vacant lot conversion and build a recommender based on those determinants. We evaluate our models on real-world vacant lot datasets from the US cities of Philadelphia,PA and Baltimore, MD. Our results indicate that our recommender yields mean F-measures of (1) 90% in predicting whether a vacant lot should be converted or not within a single city, (2) 91% in predicting what a vacant lot should be converted to, within a single city and, (3) 85% in predicting whether a vacant lot should be converted or not across two cities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge