Nariman Habili

Automatic Illumination Spectrum Recovery

May 31, 2023

Abstract:We develop a deep learning network to estimate the illumination spectrum of hyperspectral images under various lighting conditions. To this end, a dataset, IllumNet, was created. Images were captured using a Specim IQ camera under various illumination conditions, both indoor and outdoor. Outdoor images were captured in sunny, overcast, and shady conditions and at different times of the day. For indoor images, halogen and LED light sources were used, as well as mixed light sources, mainly halogen or LED and fluorescent. The ResNet18 network was employed in this study, but with the 2D kernel changed to a 3D kernel to suit the spectral nature of the data. As well as fitting the actual illumination spectrum well, the predicted illumination spectrum should also be smooth, and this is achieved by the cubic smoothing spline error cost function. Experimental results indicate that the trained model can infer an accurate estimate of the illumination spectrum.

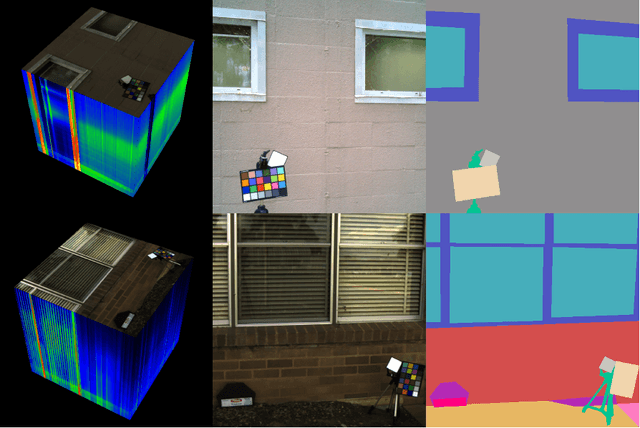

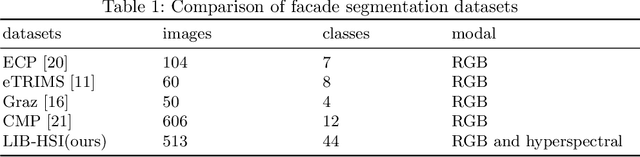

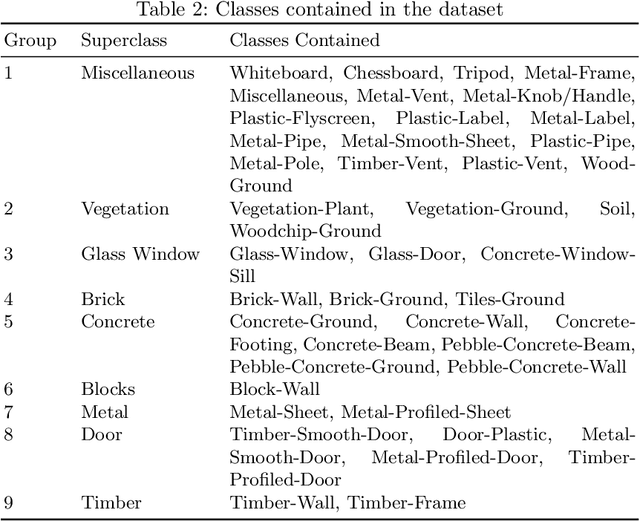

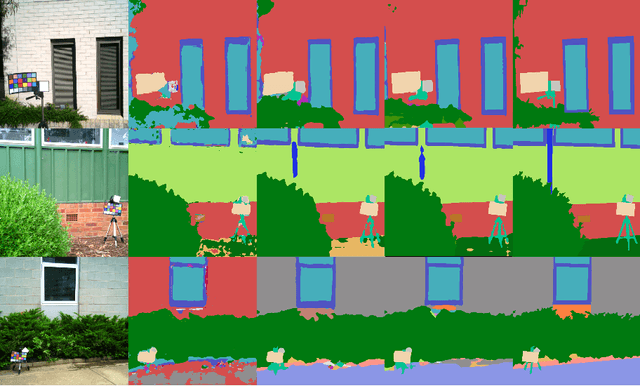

A Hyperspectral and RGB Dataset for Building Facade Segmentation

Dec 06, 2022

Abstract:Hyperspectral Imaging (HSI) provides detailed spectral information and has been utilised in many real-world applications. This work introduces an HSI dataset of building facades in a light industry environment with the aim of classifying different building materials in a scene. The dataset is called the Light Industrial Building HSI (LIB-HSI) dataset. This dataset consists of nine categories and 44 classes. In this study, we investigated deep learning based semantic segmentation algorithms on RGB and hyperspectral images to classify various building materials, such as timber, brick and concrete.

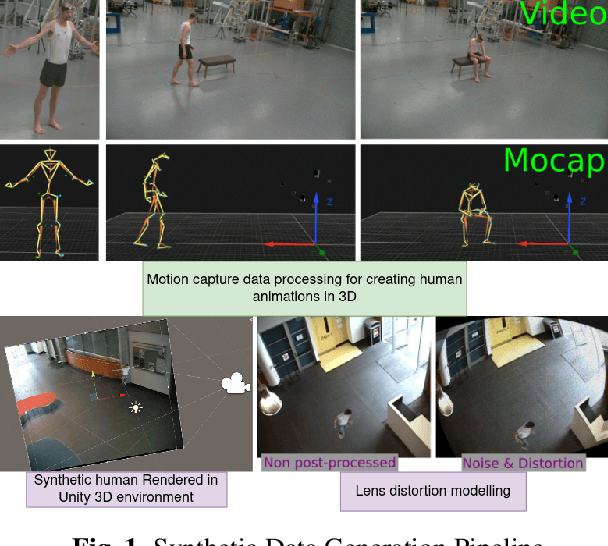

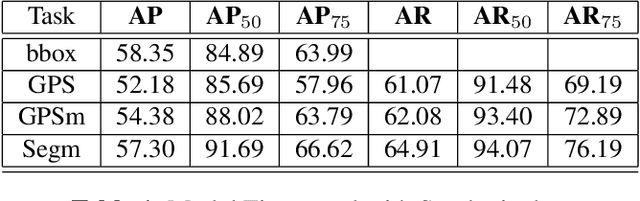

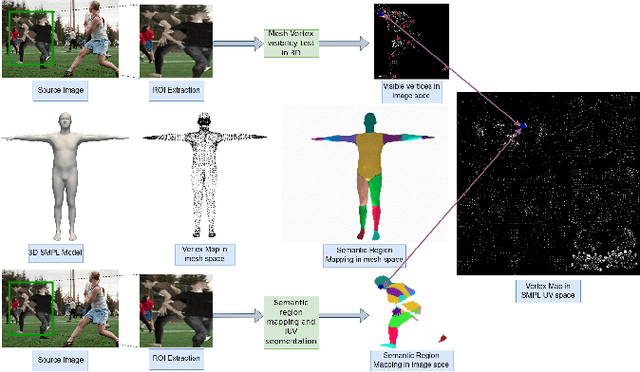

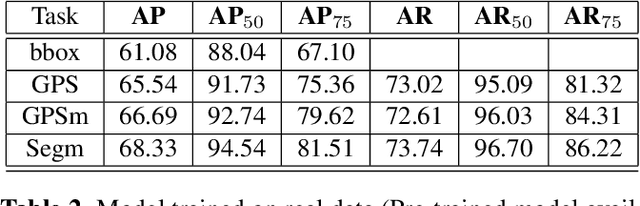

Learning Dense Correspondence from Synthetic Environments

Mar 24, 2022

Abstract:Estimation of human shape and pose from a single image is a challenging task. It is an even more difficult problem to map the identified human shape onto a 3D human model. Existing methods map manually labelled human pixels in real 2D images onto the 3D surface, which is prone to human error, and the sparsity of available annotated data often leads to sub-optimal results. We propose to solve the problem of data scarcity by training 2D-3D human mapping algorithms using automatically generated synthetic data for which exact and dense 2D-3D correspondence is known. Such a learning strategy using synthetic environments has a high generalisation potential towards real-world data. Using different camera parameter variations, background and lighting settings, we created precise ground truth data that constitutes a wider distribution. We evaluate the performance of models trained on synthetic using the COCO dataset and validation framework. Results show that training 2D-3D mapping network models on synthetic data is a viable alternative to using real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge