Muhayyuddin Ahmed

Evaluating Deep Learning Assisted Automated Aquaculture Net Pens Inspection Using ROV

Aug 26, 2023Abstract:In marine aquaculture, inspecting sea cages is an essential activity for managing both the facilities' environmental impact and the quality of the fish development process. Fish escape from fish farms into the open sea due to net damage, which can result in significant financial losses and compromise the nearby marine ecosystem. The traditional inspection system in use relies on visual inspection by expert divers or ROVs, which is not only laborious, time-consuming, and inaccurate but also largely dependent on the level of knowledge of the operator and has a poor degree of verifiability. This article presents a robotic-based automatic net defect detection system for aquaculture net pens oriented to on-ROV processing and real-time detection. The proposed system takes a video stream from an onboard camera of the ROV, employs a deep learning detector, and segments the defective part of the image from the background under different underwater conditions. The system was first tested using a set of collected images for comparison with the state-of-the-art approaches and then using the ROV inspection sequences to evaluate its effectiveness in real-world scenarios. Results show that our approach presents high levels of accuracy even for adverse scenarios and is adequate for real-time processing on embedded platforms.

Autonomous Underwater Robotic System for Aquaculture Applications

Aug 26, 2023Abstract:Aquaculture is a thriving food-producing sector producing over half of the global fish consumption. However, these aquafarms pose significant challenges such as biofouling, vegetation, and holes within their net pens and have a profound effect on the efficiency and sustainability of fish production. Currently, divers and/or remotely operated vehicles are deployed for inspecting and maintaining aquafarms; this approach is expensive and requires highly skilled human operators. This work aims to develop a robotic-based automatic net defect detection system for aquaculture net pens oriented to on- ROV processing and real-time detection of different aqua-net defects such as biofouling, vegetation, net holes, and plastic. The proposed system integrates both deep learning-based methods for aqua-net defect detection and feedback control law for the vehicle movement around the aqua-net to obtain a clear sequence of net images and inspect the status of the net via performing the inspection tasks. This work contributes to the area of aquaculture inspection, marine robotics, and deep learning aiming to reduce cost, improve quality, and ease of operation.

Vision-Based Autonomous Navigation for Unmanned Surface Vessel in Extreme Marine Conditions

Aug 08, 2023

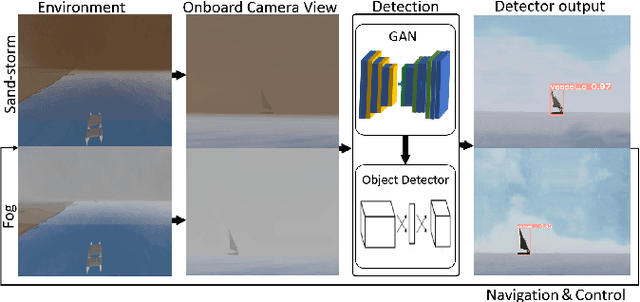

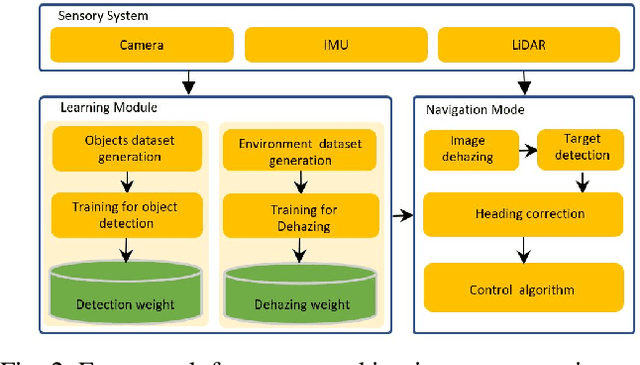

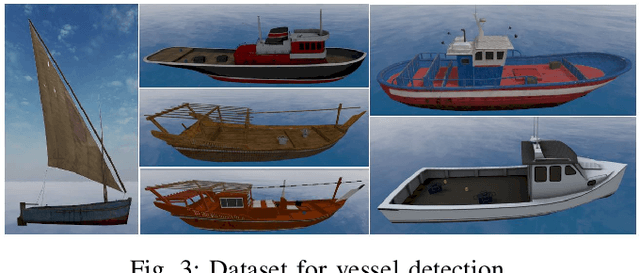

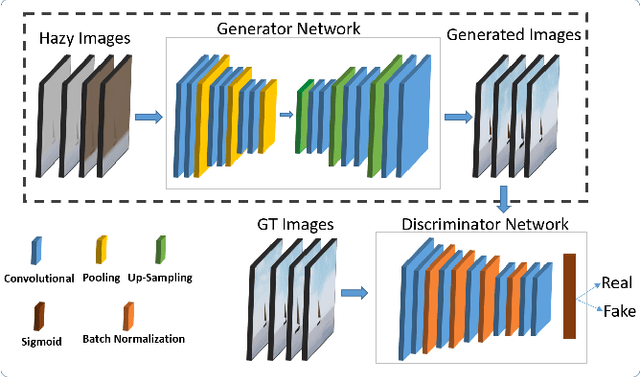

Abstract:Visual perception is an important component for autonomous navigation of unmanned surface vessels (USV), particularly for the tasks related to autonomous inspection and tracking. These tasks involve vision-based navigation techniques to identify the target for navigation. Reduced visibility under extreme weather conditions in marine environments makes it difficult for vision-based approaches to work properly. To overcome these issues, this paper presents an autonomous vision-based navigation framework for tracking target objects in extreme marine conditions. The proposed framework consists of an integrated perception pipeline that uses a generative adversarial network (GAN) to remove noise and highlight the object features before passing them to the object detector (i.e., YOLOv5). The detected visual features are then used by the USV to track the target. The proposed framework has been thoroughly tested in simulation under extremely reduced visibility due to sandstorms and fog. The results are compared with state-of-the-art de-hazing methods across the benchmarked MBZIRC simulation dataset, on which the proposed scheme has outperformed the existing methods across various metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge