Muhammad Majid

Upper Limb Movement Execution Classification using Electroencephalography for Brain Computer Interface

Apr 01, 2023Abstract:An accurate classification of upper limb movements using electroencephalography (EEG) signals is gaining significant importance in recent years due to the prevalence of brain-computer interfaces. The upper limbs in the human body are crucial since different skeletal segments combine to make a range of motion that helps us in our trivial daily tasks. Decoding EEG-based upper limb movements can be of great help to people with spinal cord injury (SCI) or other neuro-muscular diseases such as amyotrophic lateral sclerosis (ALS), primary lateral sclerosis, and periodic paralysis. This can manifest in a loss of sensory and motor function, which could make a person reliant on others to provide care in day-to-day activities. We can detect and classify upper limb movement activities, whether they be executed or imagined using an EEG-based brain-computer interface (BCI). Toward this goal, we focus our attention on decoding movement execution (ME) of the upper limb in this study. For this purpose, we utilize a publicly available EEG dataset that contains EEG signal recordings from fifteen subjects acquired using a 61-channel EEG device. We propose a method to classify four ME classes for different subjects using spectrograms of the EEG data through pre-trained deep learning (DL) models. Our proposed method of using EEG spectrograms for the classification of ME has shown significant results, where the highest average classification accuracy (for four ME classes) obtained is 87.36%, with one subject achieving the best classification accuracy of 97.03%.

Motor imagery classification using EEG spectrograms

Nov 15, 2022Abstract:The loss of limb motion arising from damage to the spinal cord is a disability that could effect people while performing their day-to-day activities. The restoration of limb movement would enable people with spinal cord injury to interact with their environment more naturally and this is where a brain-computer interface (BCI) system could be beneficial. The detection of limb movement imagination (MI) could be significant for such a BCI, where the detected MI can guide the computer system. Using MI detection through electroencephalography (EEG), we can recognize the imagination of movement in a user and translate this into a physical movement. In this paper, we utilize pre-trained deep learning (DL) algorithms for the classification of imagined upper limb movements. We use a publicly available EEG dataset with data representing seven classes of limb movements. We compute the spectrograms of the time series EEG signal and use them as an input to the DL model for MI classification. Our novel approach for the classification of upper limb movements using pre-trained DL algorithms and spectrograms has achieved significantly improved results for seven movement classes. When compared with the recently proposed state-of-the-art methods, our algorithm achieved a significant average accuracy of 84.9% for classifying seven movements.

Mental Stress Detection using Data from Wearable and Non-wearable Sensors: A Review

Feb 07, 2022Abstract:This paper presents a comprehensive review of methods covering significant subjective and objective human stress detection techniques available in the literature. The methods for measuring human stress responses could include subjective questionnaires (developed by psychologists) and objective markers observed using data from wearable and non-wearable sensors. In particular, wearable sensor-based methods commonly use data from electroencephalography, electrocardiogram, galvanic skin response, electromyography, electrodermal activity, heart rate, heart rate variability, and photoplethysmography both individually and in multimodal fusion strategies. Whereas, methods based on non-wearable sensors include strategies such as analyzing pupil dilation and speech, smartphone data, eye movement, body posture, and thermal imaging. Whenever a stressful situation is encountered by an individual, physiological, physical, or behavioral changes are induced which help in coping with the challenge at hand. A wide range of studies has attempted to establish a relationship between these stressful situations and the response of human beings by using different kinds of psychological, physiological, physical, and behavioral measures. Inspired by the lack of availability of a definitive verdict about the relationship of human stress with these different kinds of markers, a detailed survey about human stress detection methods is conducted in this paper. In particular, we explore how stress detection methods can benefit from artificial intelligence utilizing relevant data from various sources. This review will prove to be a reference document that would provide guidelines for future research enabling effective detection of human stress conditions.

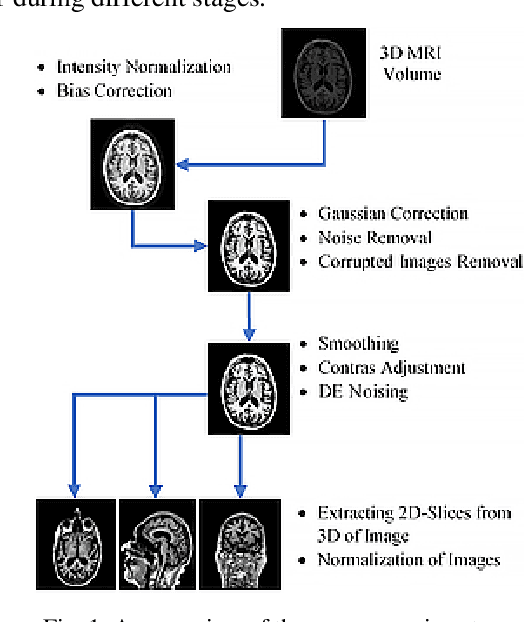

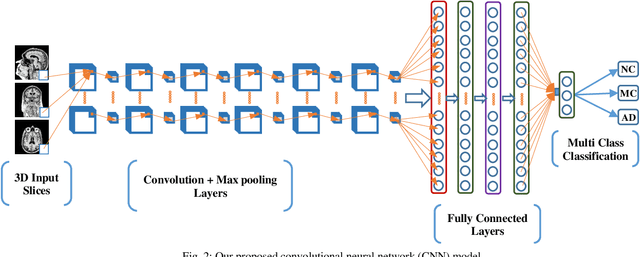

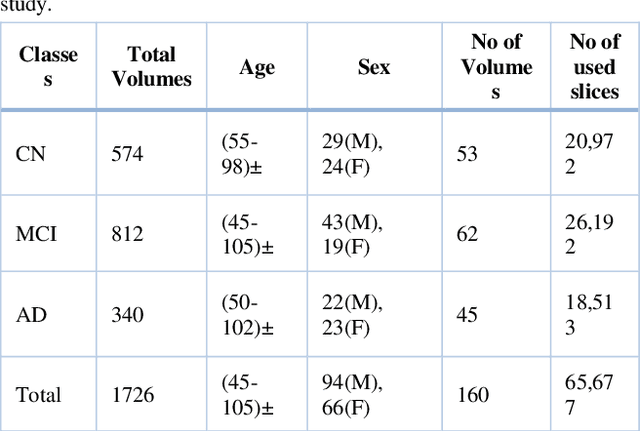

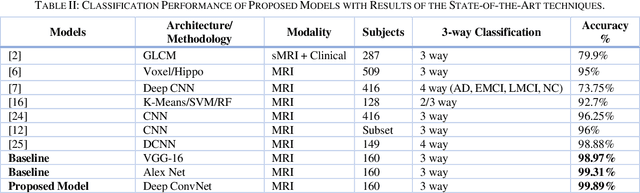

Deep Convolutional Neural Network based Classification of Alzheimer's Disease using MRI data

Jan 08, 2021

Abstract:Alzheimer's disease (AD) is a progressive and incurable neurodegenerative disease which destroys brain cells and causes loss to patient's memory. An early detection can prevent the patient from further damage of the brain cells and hence avoid permanent memory loss. In past few years, various automatic tools and techniques have been proposed for diagnosis of AD. Several methods focus on fast, accurate and early detection of the disease to minimize the loss to patients mental health. Although machine learning and deep learning techniques have significantly improved medical imaging systems for AD by providing diagnostic performance close to human level. But the main problem faced during multi-class classification is the presence of highly correlated features in the brain structure. In this paper, we have proposed a smart and accurate way of diagnosing AD based on a two-dimensional deep convolutional neural network (2D-DCNN) using imbalanced three-dimensional MRI dataset. Experimental results on Alzheimer Disease Neuroimaging Initiative magnetic resonance imaging (MRI) dataset confirms that the proposed 2D-DCNN model is superior in terms of accuracy, efficiency, and robustness. The model classifies MRI into three categories: AD, mild cognitive impairment, and normal control: and has achieved 99.89% classification accuracy with imbalanced classes. The proposed model exhibits noticeable improvement in accuracy as compared to the state-fo-the-art methods.

Electroencephalography based Classification of Long-term Stress using Psychological Labeling

Jul 17, 2019

Abstract:Stress research is a rapidly emerging area in thefield of electroencephalography (EEG) based signal processing.The use of EEG as an objective measure for cost effective andpersonalized stress management becomes important in particularsituations such as the non-availability of mental health facilities.In this study, long-term stress is classified using baseline EEGsignal recordings. The labelling for the stress and control groupsis performed using two methods (i) the perceived stress scalescore and (ii) expert evaluation. The frequency domain featuresare extracted from five-channel EEG recordings in addition tothe frontal and temporal alpha and beta asymmetries. The alphaasymmetry is computed from four channels and used as a feature.Feature selection is also performed using a t-test to identifystatistically significant features for both stress and control groups.We found that support vector machine is best suited to classifylong-term human stress when used with alpha asymmetry asa feature. It is observed that expert evaluation based labellingmethod has improved the classification accuracy up to 85.20%.Based on these results, it is concluded that alpha asymmetry maybe used as a potential bio-marker for stress classification, when labels are assigned using expert evaluation.

Classification of Perceived Human Stress using Physiological Signals

May 13, 2019

Abstract:In this paper, we present an experimental study for the classification of perceived human stress using non-invasive physiological signals. These include electroencephalography (EEG), galvanic skin response (GSR), and photoplethysmography (PPG). We conducted experiments consisting of steps including data acquisition, feature extraction, and perceived human stress classification. The physiological data of $28$ participants are acquired in an open eye condition for a duration of three minutes. Four different features are extracted in time domain from EEG, GSR and PPG signals and classification is performed using multiple classifiers including support vector machine, the Naive Bayes, and multi-layer perceptron (MLP). The best classification accuracy of 75% is achieved by using MLP classifier. Our experimental results have shown that our proposed scheme outperforms existing perceived stress classification methods, where no stress inducers are used.

Emotion Classification in Response to Tactile Enhanced Multimedia using Frequency Domain Features of Brain Signals

May 13, 2019

Abstract:Tactile enhanced multimedia is generated by synchronizing traditional multimedia clips, to generate hot and cold air effect, with an electric heater and a fan. This objective is to give viewers a more realistic and immersing feel of the multimedia content. The response to this enhanced multimedia content (mulsemedia) is evaluated in terms of the appreciation/emotion by using human brain signals. We observe and record electroencephalography (EEG) data using a commercially available four channel MUSE headband. A total of 21 participants voluntarily participated in this study for EEG recordings. We extract frequency domain features from five different bands of each EEG channel. Four emotions namely: happy, relaxed, sad, and angry are classified using a support vector machine in response to the tactile enhanced multimedia. An increased accuracy of 76:19% is achieved when compared to 63:41% by using the time domain features. Our results show that the selected frequency domain features could be better suited for emotion classification in mulsemedia studies.

Medical Image Analysis using Convolutional Neural Networks: A Review

Sep 04, 2017

Abstract:Medical image analysis is the science of analyzing or solving medical problems using different image analysis techniques for affective and efficient extraction of information. It has emerged as one of the top research area in the field of engineering and medicine. Recent years have witnessed rapid use of machine learning algorithms in medical image analysis. These machine learning techniques are used to extract compact information for improved performance of medical image analysis system, when compared to the traditional methods that use extraction of handcrafted features. Deep learning is a breakthrough in machine learning techniques that has overwhelmed the field of pattern recognition and computer vision research by providing state-of-the-art results. Deep learning provides different machine learning algorithms that model high level data abstractions and do not rely on handcrafted features. Recently, deep learning methods utilizing deep convolutional neural networks have been applied to medical image analysis providing promising results. The application area covers the whole spectrum of medical image analysis including detection, segmentation, classification, and computer aided diagnosis. This paper presents a review of the state-of-the-art convolutional neural network based techniques used for medical image analysis.

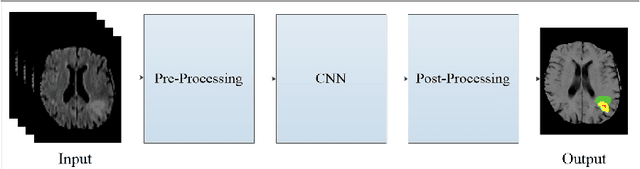

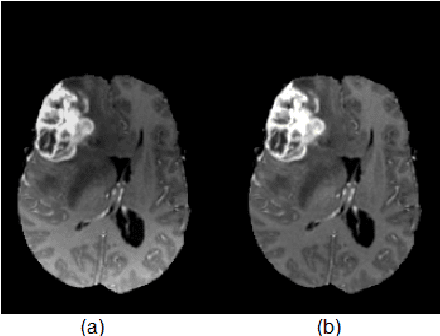

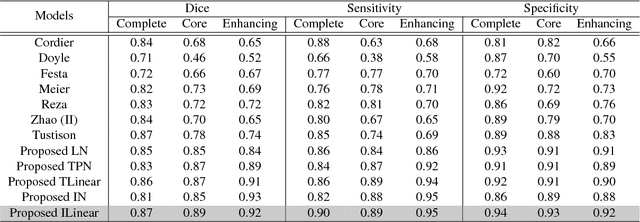

Segmentation of Glioma Tumors in Brain Using Deep Convolutional Neural Network

Aug 01, 2017

Abstract:Detection of brain tumor using a segmentation based approach is critical in cases, where survival of a subject depends on an accurate and timely clinical diagnosis. Gliomas are the most commonly found tumors having irregular shape and ambiguous boundaries, making them one of the hardest tumors to detect. The automation of brain tumor segmentation remains a challenging problem mainly due to significant variations in its structure. An automated brain tumor segmentation algorithm using deep convolutional neural network (DCNN) is presented in this paper. A patch based approach along with an inception module is used for training the deep network by extracting two co-centric patches of different sizes from the input images. Recent developments in deep neural networks such as drop-out, batch normalization, non-linear activation and inception module are used to build a new ILinear nexus architecture. The module overcomes the over-fitting problem arising due to scarcity of data using drop-out regularizer. Images are normalized and bias field corrected in the pre-processing step and then extracted patches are passed through a DCNN, which assigns an output label to the central pixel of each patch. Morphological operators are used for post-processing to remove small false positives around the edges. A two-phase weighted training method is introduced and evaluated using BRATS 2013 and BRATS 2015 datasets, where it improves the performance parameters of state-of-the-art techniques under similar settings.

* Submitted to Neurocomputing

Medical Image Retrieval using Deep Convolutional Neural Network

Mar 24, 2017

Abstract:With a widespread use of digital imaging data in hospitals, the size of medical image repositories is increasing rapidly. This causes difficulty in managing and querying these large databases leading to the need of content based medical image retrieval (CBMIR) systems. A major challenge in CBMIR systems is the semantic gap that exists between the low level visual information captured by imaging devices and high level semantic information perceived by human. The efficacy of such systems is more crucial in terms of feature representations that can characterize the high-level information completely. In this paper, we propose a framework of deep learning for CBMIR system by using deep Convolutional Neural Network (CNN) that is trained for classification of medical images. An intermodal dataset that contains twenty four classes and five modalities is used to train the network. The learned features and the classification results are used to retrieve medical images. For retrieval, best results are achieved when class based predictions are used. An average classification accuracy of 99.77% and a mean average precision of 0.69 is achieved for retrieval task. The proposed method is best suited to retrieve multimodal medical images for different body organs.

* Submitted to Neurocomputing

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge