Mudit Agarwal

Delaytron: Efficient Learning of Multiclass Classifiers with Delayed Bandit Feedbacks

May 17, 2022

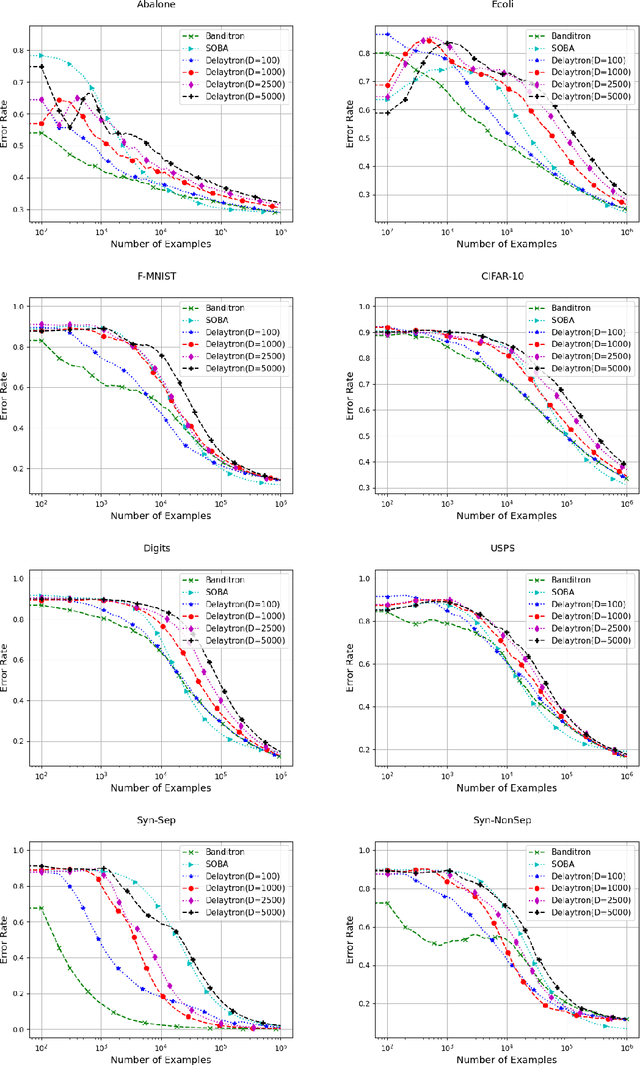

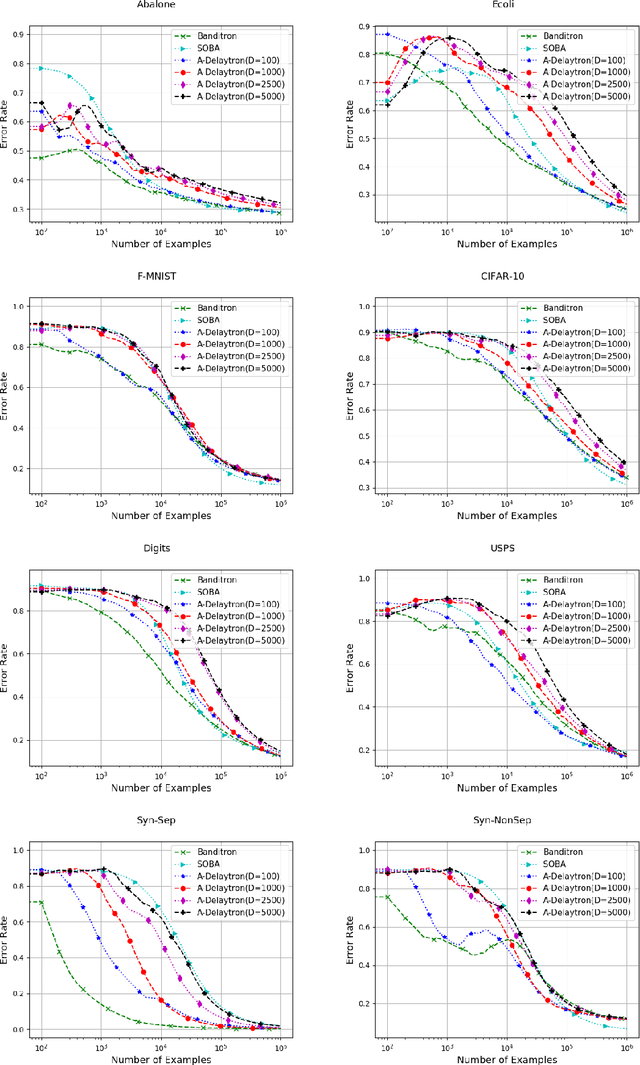

Abstract:In this paper, we present online algorithm called {\it Delaytron} for learning multi class classifiers using delayed bandit feedbacks. The sequence of feedback delays $\{d_t\}_{t=1}^T$ is unknown to the algorithm. At the $t$-th round, the algorithm observes an example $\mathbf{x}_t$ and predicts a label $\tilde{y}_t$ and receives the bandit feedback $\mathbb{I}[\tilde{y}_t=y_t]$ only $d_t$ rounds later. When $t+d_t>T$, we consider that the feedback for the $t$-th round is missing. We show that the proposed algorithm achieves regret of $\mathcal{O}\left(\sqrt{\frac{2 K}{\gamma}\left[\frac{T}{2}+\left(2+\frac{L^2}{R^2\Vert \W\Vert_F^2}\right)\sum_{t=1}^Td_t\right]}\right)$ when the loss for each missing sample is upper bounded by $L$. In the case when the loss for missing samples is not upper bounded, the regret achieved by Delaytron is $\mathcal{O}\left(\sqrt{\frac{2 K}{\gamma}\left[\frac{T}{2}+2\sum_{t=1}^Td_t+\vert \mathcal{M}\vert T\right]}\right)$ where $\mathcal{M}$ is the set of missing samples in $T$ rounds. These bounds were achieved with a constant step size which requires the knowledge of $T$ and $\sum_{t=1}^Td_t$. For the case when $T$ and $\sum_{t=1}^Td_t$ are unknown, we use a doubling trick for online learning and proposed Adaptive Delaytron. We show that Adaptive Delaytron achieves a regret bound of $\mathcal{O}\left(\sqrt{T+\sum_{t=1}^Td_t}\right)$. We show the effectiveness of our approach by experimenting on various datasets and comparing with state-of-the-art approaches.

Learning Multiclass Classifier Under Noisy Bandit Feedback

Jun 05, 2020

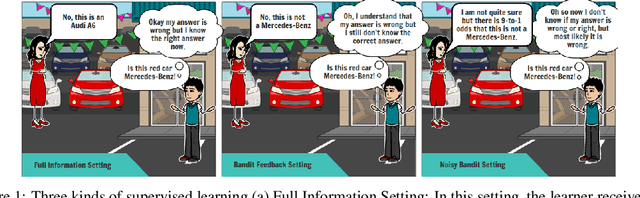

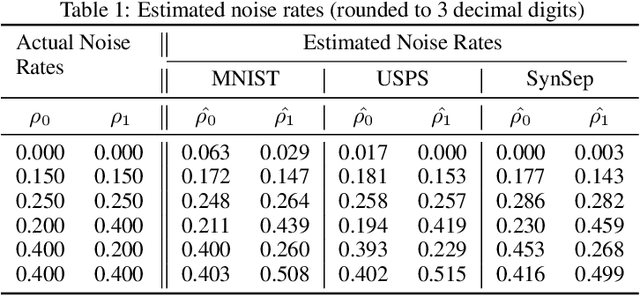

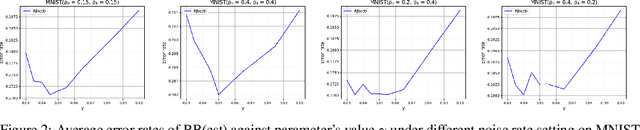

Abstract:This paper addresses the problem of multiclass classification with corrupted or noisy bandit feedback. In this setting, the learner may not receive true feedback. Instead, it receives feedback that has been flipped with some non-zero probability. We propose a novel approach to deal with noisy bandit feedback, based on the unbiased estimator technique. We further propose an approach that can efficiently estimate the noise rates, and thus providing an end-to-end framework. The proposed algorithm enjoys mistake bound of the order of $O(\sqrt{T})$. We provide a theoretical mistake bound for our proposal. We also carry out extensive experiments on several benchmark datasets to demonstrate that our proposed approach successfully learns the underlying classifier even using noisy bandit feedbacks

A Machine Learning Application for Raising WASH Awareness in the Times of COVID-19 Pandemic

Apr 18, 2020

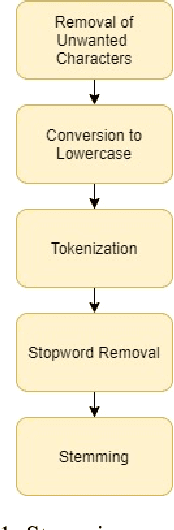

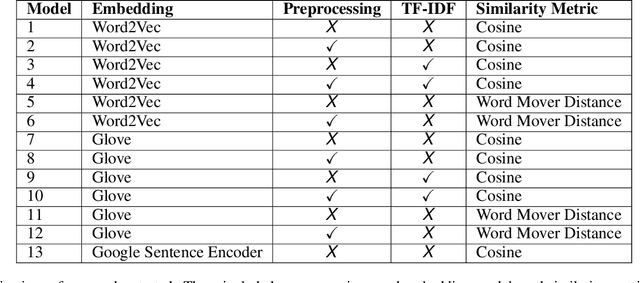

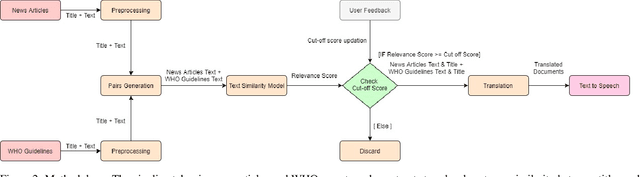

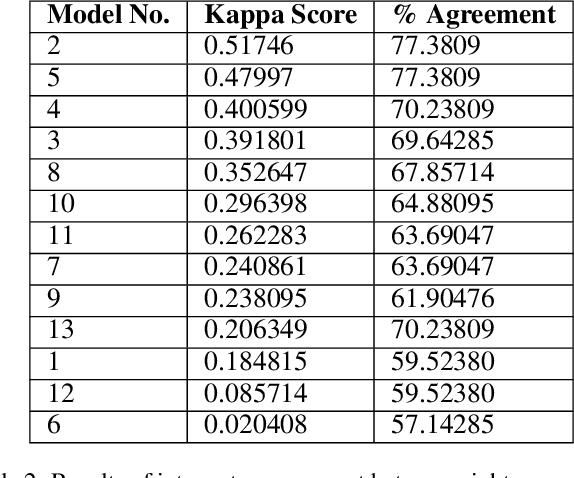

Abstract:Proactive management of an Infodemic that grows faster than the underlying epidemic is a modern-day challenge. This requires raising awareness and sensitization with the correct information in order to prevent and contain outbreaks such as the ongoing COVID-19 pandemic. Therefore, there is a fine balance between continuous awareness-raising by providing new information and the risk of misinformation. In this work, we address this gap by creating a life-long learning application that delivers authentic information to users in Hindi and English, the most widely used languages in India. It does this by matching sources of verified and authentic information such as the WHO reports against daily news by using machine learning and natural language processing. It delivers the narrated content in Hindi by using state-of-the-art text to speech engines. Finally, the approach allows user input for continuous improvement of news feed relevance daily. We demonstrate this approach for Water, Sanitation, Hygiene information for containment of the COVID-19 pandemic. Thirteen combinations of pre-processing strategies, word-embeddings, and similarity metrics were evaluated by eight human users via calculation of agreement statistics. The best performing combination achieved a Cohen's Kappa of 0.54 and was deployed as On AIr, WashKaro's AI-powered back-end. We introduced a novel way of contact tracing, deploying the Bluetooth sensors of an individual's smartphone and automatic recording of physical interactions with other users. Additionally, the application also features a symptom self-assessment tool based on WHO-approved guidelines, human-curated and vetted information to reach out to the community as audio-visual content in local languages. WashKaro - http://tiny.cc/WashKaro

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge