Learning Multiclass Classifier Under Noisy Bandit Feedback

Paper and Code

Jun 05, 2020

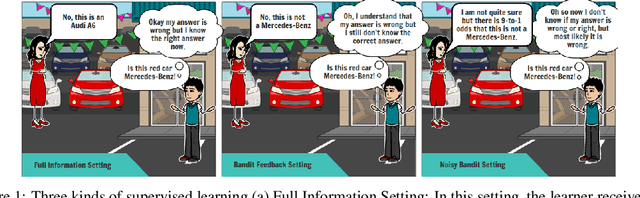

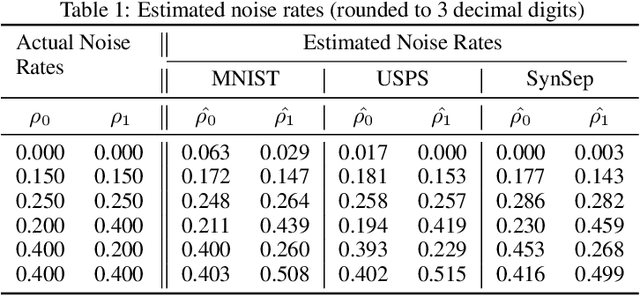

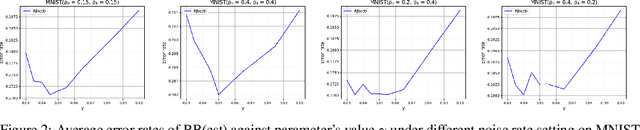

This paper addresses the problem of multiclass classification with corrupted or noisy bandit feedback. In this setting, the learner may not receive true feedback. Instead, it receives feedback that has been flipped with some non-zero probability. We propose a novel approach to deal with noisy bandit feedback, based on the unbiased estimator technique. We further propose an approach that can efficiently estimate the noise rates, and thus providing an end-to-end framework. The proposed algorithm enjoys mistake bound of the order of $O(\sqrt{T})$. We provide a theoretical mistake bound for our proposal. We also carry out extensive experiments on several benchmark datasets to demonstrate that our proposed approach successfully learns the underlying classifier even using noisy bandit feedbacks

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge