Mohan Gurusamy

National University of Singapore

UniNet: A Unified Multi-granular Traffic Modeling Framework for Network Security

Mar 06, 2025

Abstract:As modern networks grow increasingly complex--driven by diverse devices, encrypted protocols, and evolving threats--network traffic analysis has become critically important. Existing machine learning models often rely only on a single representation of packets or flows, limiting their ability to capture the contextual relationships essential for robust analysis. Furthermore, task-specific architectures for supervised, semi-supervised, and unsupervised learning lead to inefficiencies in adapting to varying data formats and security tasks. To address these gaps, we propose UniNet, a unified framework that introduces a novel multi-granular traffic representation (T-Matrix), integrating session, flow, and packet-level features to provide comprehensive contextual information. Combined with T-Attent, a lightweight attention-based model, UniNet efficiently learns latent embeddings for diverse security tasks. Extensive evaluations across four key network security and privacy problems--anomaly detection, attack classification, IoT device identification, and encrypted website fingerprinting--demonstrate UniNet's significant performance gain over state-of-the-art methods, achieving higher accuracy, lower false positive rates, and improved scalability. By addressing the limitations of single-level models and unifying traffic analysis paradigms, UniNet sets a new benchmark for modern network security.

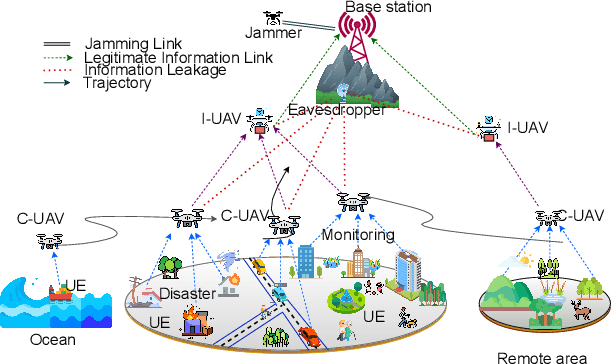

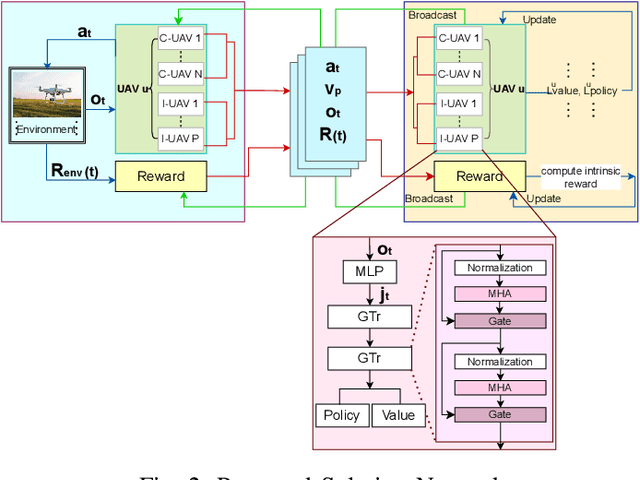

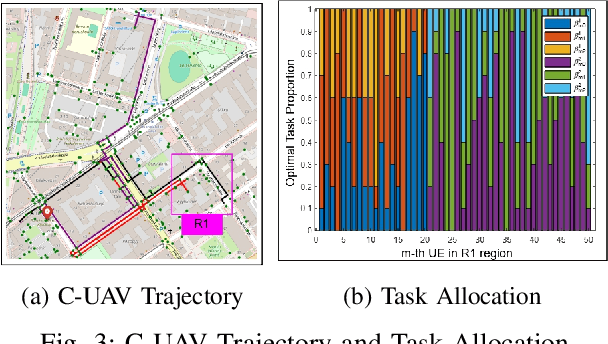

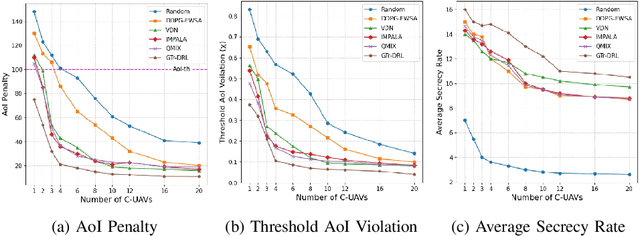

Securing the Skies: An IRS-Assisted AoI-Aware Secure Multi-UAV System with Efficient Task Offloading

Apr 06, 2024

Abstract:Unmanned Aerial Vehicles (UAVs) are integral in various sectors like agriculture, surveillance, and logistics, driven by advancements in 5G. However, existing research lacks a comprehensive approach addressing both data freshness and security concerns. In this paper, we address the intricate challenges of data freshness, and security, especially in the context of eavesdropping and jamming in modern UAV networks. Our framework incorporates exponential AoI metrics and emphasizes secrecy rate to tackle eavesdropping and jamming threats. We introduce a transformer-enhanced Deep Reinforcement Learning (DRL) approach to optimize task offloading processes. Comparative analysis with existing algorithms showcases the superiority of our scheme, indicating its promising advancements in UAV network management.

Reliable and Efficient Data Collection in UAV-based IoT Networks

Nov 09, 2023

Abstract:Internet of Things (IoT) involves sensors for monitoring and wireless networks for efficient communication. However, resource-constrained IoT devices and limitations in existing wireless technologies hinder its full potential. Integrating Unmanned Aerial Vehicles (UAVs) into IoT networks can address some challenges by expanding its' coverage, providing security, and bringing computing closer to IoT devices. Nevertheless, effective data collection in UAV-assisted IoT networks is hampered by factors, including dynamic UAV behavior, environmental variables, connectivity instability, and security considerations. In this survey, we first explore UAV-based IoT networks, focusing on communication and networking aspects. Next, we cover various UAV-based data collection methods their advantages and disadvantages, followed by a discussion on performance metrics for data collection. As this article primarily emphasizes reliable and efficient data collection in UAV-assisted IoT networks, we briefly discuss existing research on data accuracy and consistency, network connectivity, and data security and privacy to provide insights into reliable data collection. Additionally, we discuss efficient data collection strategies in UAV-based IoT networks, covering trajectory and path planning, collision avoidance, sensor network clustering, data aggregation, UAV swarm formations, and artificial intelligence for optimization. We also present two use cases of UAVs as a service for enhancing data collection reliability and efficiency. Finally, we discuss future challenges in data collection for UAV-assisted IoT networks.

ZEST: Attention-based Zero-Shot Learning for Unseen IoT Device Classification

Oct 12, 2023Abstract:Recent research works have proposed machine learning models for classifying IoT devices connected to a network. However, there is still a practical challenge of not having all devices (and hence their traffic) available during the training of a model. This essentially means, during the operational phase, we need to classify new devices not seen during the training phase. To address this challenge, we propose ZEST -- a ZSL (zero-shot learning) framework based on self-attention for classifying both seen and unseen devices. ZEST consists of i) a self-attention based network feature extractor, termed SANE, for extracting latent space representations of IoT traffic, ii) a generative model that trains a decoder using latent features to generate pseudo data, and iii) a supervised model that is trained on the generated pseudo data for classifying devices. We carry out extensive experiments on real IoT traffic data; our experiments demonstrate i) ZEST achieves significant improvement (in terms of accuracy) over the baselines; ii) ZEST is able to better extract meaningful representations than LSTM which has been commonly used for modeling network traffic.

GFCL: A GRU-based Federated Continual Learning Framework against Adversarial Attacks in IoV

Apr 23, 2022

Abstract:The integration of ML in 5G-based Internet of Vehicles (IoV) networks has enabled intelligent transportation and smart traffic management. Nonetheless, the security against adversarial attacks is also increasingly becoming a challenging task. Specifically, Deep Reinforcement Learning (DRL) is one of the widely used ML designs in IoV applications. The standard ML security techniques are not effective in DRL where the algorithm learns to solve sequential decision-making through continuous interaction with the environment, and the environment is time-varying, dynamic, and mobile. In this paper, we propose a Gated Recurrent Unit (GRU)-based federated continual learning (GFCL) anomaly detection framework against adversarial attacks in IoV. The objective is to present a lightweight and scalable framework that learns and detects the illegitimate behavior without having a-priori training dataset consisting of attack samples. We use GRU to predict a future data sequence to analyze and detect illegitimate behavior from vehicles in a federated learning-based distributed manner. We investigate the performance of our framework using real-world vehicle mobility traces. The results demonstrate the effectiveness of our proposed solution for different performance metrics.

Adversarial Attacks Against Deep Reinforcement Learning Framework in Internet of Vehicles

Aug 02, 2021

Abstract:Machine learning (ML) has made incredible impacts and transformations in a wide range of vehicular applications. As the use of ML in Internet of Vehicles (IoV) continues to advance, adversarial threats and their impact have become an important subject of research worth exploring. In this paper, we focus on Sybil-based adversarial threats against a deep reinforcement learning (DRL)-assisted IoV framework and more specifically, DRL-based dynamic service placement in IoV. We carry out an experimental study with real vehicle trajectories to analyze the impact on service delay and resource congestion under different attack scenarios for the DRL-based dynamic service placement application. We further investigate the impact of the proportion of Sybil-attacked vehicles in the network. The results demonstrate that the performance is significantly affected by Sybil-based data poisoning attacks when compared to adversary-free healthy network scenario.

DRLD-SP: A Deep Reinforcement Learning-based Dynamic Service Placement in Edge-Enabled Internet of Vehicles

Jun 11, 2021

Abstract:The growth of 5G and edge computing has enabled the emergence of Internet of Vehicles. It supports different types of services with different resource and service requirements. However, limited resources at the edge, high mobility of vehicles, increasing demand, and dynamicity in service request-types have made service placement a challenging task. A typical static placement solution is not effective as it does not consider the traffic mobility and service dynamics. Handling dynamics in IoV for service placement is an important and challenging problem which is the primary focus of our work in this paper. We propose a Deep Reinforcement Learning-based Dynamic Service Placement (DRLD-SP) framework with the objective of minimizing the maximum edge resource usage and service delay while considering the vehicle's mobility, varying demand, and dynamics in the requests for different types of services. We use SUMO and MATLAB to carry out simulation experiments. The experimental results show that the proposed DRLD-SP approach is effective and outperforms other static and dynamic placement approaches.

Reinforcement Learning-based Dynamic Service Placement in Vehicular Networks

Jun 01, 2021

Abstract:The emergence of technologies such as 5G and mobile edge computing has enabled provisioning of different types of services with different resource and service requirements to the vehicles in a vehicular network.The growing complexity of traffic mobility patterns and dynamics in the requests for different types of services has made service placement a challenging task. A typical static placement solution is not effective as it does not consider the traffic mobility and service dynamics. In this paper, we propose a reinforcement learning-based dynamic (RL-Dynamic) service placement framework to find the optimal placement of services at the edge servers while considering the vehicle's mobility and dynamics in the requests for different types of services. We use SUMO and MATLAB to carry out simulation experiments. In our learning framework, for the decision module, we consider two alternative objective functions-minimizing delay and minimizing edge server utilization. We developed an ILP based problem formulation for the two objective functions. The experimental results show that 1) compared to static service placement, RL-based dynamic service placement achieves fair utilization of edge server resources and low service delay, and 2) compared to delay-optimized placement, server utilization optimized placement utilizes resources more effectively, achieving higher fairness with lower edge-server utilization.

Machine Learning for Security in Vehicular Networks: A Comprehensive Survey

May 31, 2021

Abstract:Machine Learning (ML) has emerged as an attractive and viable technique to provide effective solutions for a wide range of application domains. An important application domain is vehicular networks wherein ML-based approaches are found to be very useful to address various problems. The use of wireless communication between vehicular nodes and/or infrastructure makes it vulnerable to different types of attacks. In this regard, ML and its variants are gaining popularity to detect attacks and deal with different kinds of security issues in vehicular communication. In this paper, we present a comprehensive survey of ML-based techniques for different security issues in vehicular networks. We first briefly introduce the basics of vehicular networks and different types of communications. Apart from the traditional vehicular networks, we also consider modern vehicular network architectures. We propose a taxonomy of security attacks in vehicular networks and discuss various security challenges and requirements. We classify the ML techniques developed in the literature according to their use in vehicular network applications. We explain the solution approaches and working principles of these ML techniques in addressing various security challenges and provide insightful discussion. The limitations and challenges in using ML-based methods in vehicular networks are discussed. Finally, we present observations and lessons learned before we conclude our work.

Cost-aware Feature Selection for IoT Device Classification

Sep 02, 2020

Abstract:Classification of IoT devices into different types is of paramount importance, from multiple perspectives, including security and privacy aspects. Recent works have explored machine learning techniques for fingerprinting (or classifying) IoT devices, with promising results. However, existing works have assumed that the features used for building the machine learning models are readily available or can be easily extracted from the network traffic; in other words, they do not consider the costs associated with feature extraction. In this work, we take a more realistic approach, and argue that feature extraction has a cost, and the costs are different for different features. We also take a step forward from the current practice of considering the misclassification loss as a binary value, and make a case for different losses based on the misclassification performance. Thereby, and more importantly, we introduce the notion of risk for IoT device classification. We define and formulate the problem of cost-aware IoT device classification. This being a combinatorial optimization problem, we develop a novel algorithm to solve it in a fast and effective way using the Cross-Entropy (CE) based stochastic optimization technique. Using traffic of real devices, we demonstrate the capability of the CE based algorithm in selecting features with minimal risk of misclassification while keeping the cost for feature extraction within a specified limit.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge