Mirko Raković

Learning Motor Resonance in Human-Human and Human-Robot Interaction with Coupled Dynamical System

May 10, 2019

Abstract:Human interaction involves very sophisticated non-verbal communication skills like understanding the goals and actions of others and coordinating our own actions accordingly. Neuroscience refers to this mechanism as motor resonance, in the sense that the perception of another person's actions and sensory experiences activates the observer's brain as if (s)he would be performing the same actions and having the same experiences. We analyze and model non-verbal cues (arm movements) exchanged between two humans that interact and execute handover actions. The contributions of this paper are the following: (i) computational models, using recorded motion data, describing the motor behaviour of each actor in action-in-interaction situations, (ii) a computational model that captures the behaviour if the "giver" and "receiver" during an object handover action, by coupling the arm motion of both actors, and (iii) embedded these models in the iCub robot for both action execution and recognition. Our results show that: (i) the robot can interpret the human arm motion and recognize handover actions; and (ii) behave in a "human-like" manner to receive the object of the recognized handover action.

Action Anticipation: Reading the Intentions of Humans and Robots

Aug 10, 2018

Abstract:Humans have the fascinating capacity of processing non-verbal visual cues to understand and anticipate the actions of other humans. This "intention reading" ability is underpinned by shared motor-repertoires and action-models, which we use to interpret the intentions of others as if they were our own. We investigate how the different cues contribute to the legibility of human actions during interpersonal interactions. Our first contribution is a publicly available dataset with recordings of human body-motion and eye-gaze, acquired in an experimental scenario with an actor interacting with three subjects. From these data, we conducted a human study to analyse the importance of the different non-verbal cues for action perception. As our second contribution, we used the motion/gaze recordings to build a computational model describing the interaction between two persons. As a third contribution, we embedded this model in the controller of an iCub humanoid robot and conducted a second human study, in the same scenario with the robot as an actor, to validate the model's "intention reading" capability. Our results show that it is possible to model (non-verbal) signals exchanged by humans during interaction, and how to incorporate such a mechanism in robotic systems with the twin goal of : (i) being able to "read" human action intentions, and (ii) acting in a way that is legible by humans.

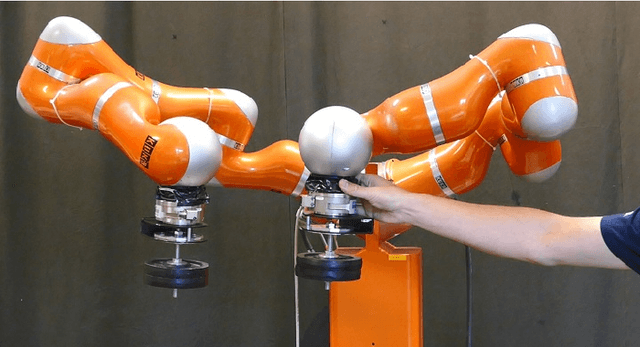

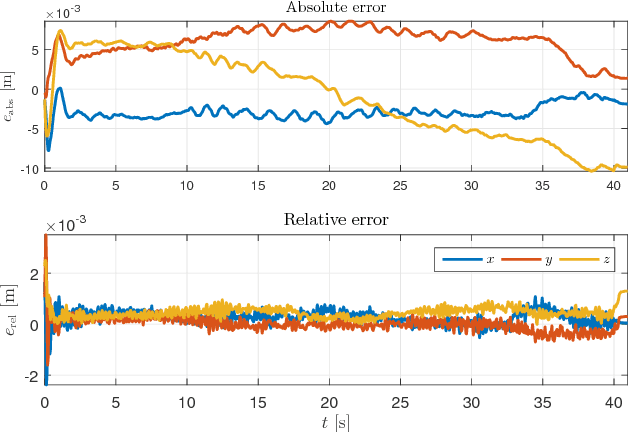

Compliant Movement Primitives in a Bimanual Setting

Jul 14, 2017

Abstract:Simultaneously achieving low trajectory errors and compliant control \emph{without} explicit models of the task was effectively addressed with Compliant Movement Primitives (CMP). For a single-robot task, this means that it is accurately following its trajectory, but also exhibits compliant behavior in case of perturbations. In this paper we extend this kind of behavior without explicit models to bimanual tasks. In the presence of an external perturbation on any of the robots, they will both move in synchrony in order to maintain their relative posture, and thus not exert force on the object they are carrying. Thus, they will act compliantly in their absolute task, but remain stiff in their relative task. To achieve compliant absolute behavior and stiff relative behavior, we combine joint-space CMPs with the well known symmetric control approach. To reduce the necessary feedback reaction of symmetric control, we further augment it with copying of a virtual force vector at the end-effector, calculated through the measured external joint torques. Real-world results on two Kuka LWR-4 robots in a bimanual setting confirm the applicability of the approach.

Increased Mobility in Presence of Multiple Contacts - Identifying Contact Configurations that Enable Arbitrary Acceleration of CoM

Aug 09, 2016

Abstract:Planning of any motion starts by planning the trajectory of the CoM. It is of the highest importance to ensure that the robot will be able to perform planned trajectory. With increasing capabilities of the humanoid robots, the case when contacts are spatially distributed should be considered. In this paper, it is shown that there are some contact configurations in which any acceleration of the center of mass (CoM) is feasible. The procedure for identifying such a configurations is presented, as well as its physical meaning. On the other hand, for the configurations in which the constraint on CoM movement exists, it will be shown how to find that linear constraint, which defines the space of feasible motion. The proposed algorithm has a low complexity and to speed up the procedure even further, it will be shown that the whole procedure needs to be run only once when contact configuration changes. As the CoM moves, the new constraints can be calculated from the initial one, thus yielding significant computation speedup. The methods are illustrated in two simulated scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge