Mirazul Haque

Perturb Your Data: Paraphrase-Guided Training Data Watermarking

Dec 18, 2025

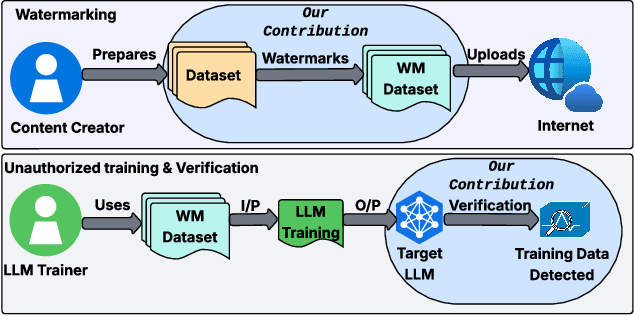

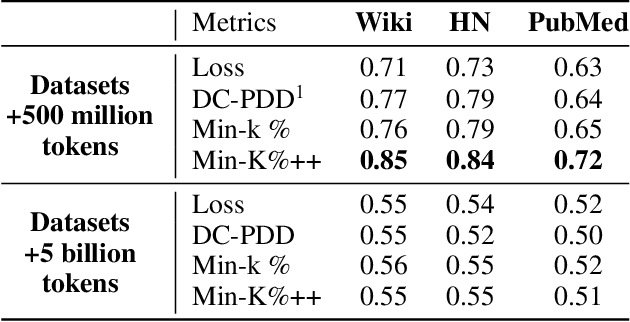

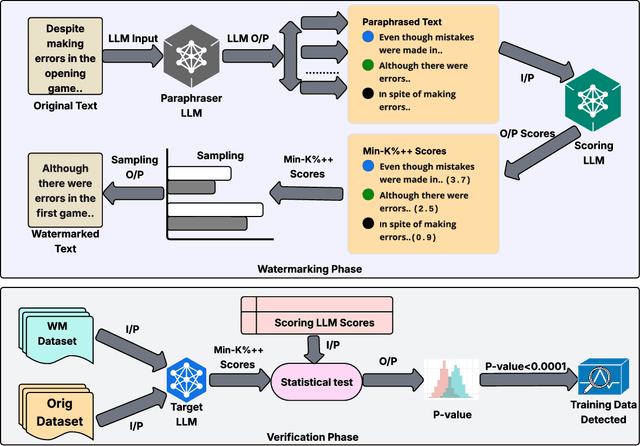

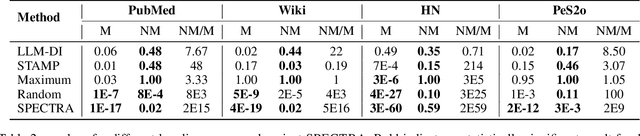

Abstract:Training data detection is critical for enforcing copyright and data licensing, as Large Language Models (LLM) are trained on massive text corpora scraped from the internet. We present SPECTRA, a watermarking approach that makes training data reliably detectable even when it comprises less than 0.001% of the training corpus. SPECTRA works by paraphrasing text using an LLM and assigning a score based on how likely each paraphrase is, according to a separate scoring model. A paraphrase is chosen so that its score closely matches that of the original text, to avoid introducing any distribution shifts. To test whether a suspect model has been trained on the watermarked data, we compare its token probabilities against those of the scoring model. We demonstrate that SPECTRA achieves a consistent p-value gap of over nine orders of magnitude when detecting data used for training versus data not used for training, which is greater than all baselines tested. SPECTRA equips data owners with a scalable, deploy-before-release watermark that survives even large-scale LLM training.

Efficiency Robustness of Dynamic Deep Learning Systems

Jun 12, 2025Abstract:Deep Learning Systems (DLSs) are increasingly deployed in real-time applications, including those in resourceconstrained environments such as mobile and IoT devices. To address efficiency challenges, Dynamic Deep Learning Systems (DDLSs) adapt inference computation based on input complexity, reducing overhead. While this dynamic behavior improves efficiency, such behavior introduces new attack surfaces. In particular, efficiency adversarial attacks exploit these dynamic mechanisms to degrade system performance. This paper systematically explores efficiency robustness of DDLSs, presenting the first comprehensive taxonomy of efficiency attacks. We categorize these attacks based on three dynamic behaviors: (i) attacks on dynamic computations per inference, (ii) attacks on dynamic inference iterations, and (iii) attacks on dynamic output production for downstream tasks. Through an in-depth evaluation, we analyze adversarial strategies that target DDLSs efficiency and identify key challenges in securing these systems. In addition, we investigate existing defense mechanisms, demonstrating their limitations against increasingly popular efficiency attacks and the necessity for novel mitigation strategies to secure future adaptive DDLSs.

Towards Effectively Leveraging Execution Traces for Program Repair with Code LLMs

May 07, 2025Abstract:Large Language Models (LLMs) show promising performance on various programming tasks, including Automatic Program Repair (APR). However, most approaches to LLM-based APR are limited to the static analysis of the programs, while disregarding their runtime behavior. Inspired by knowledge-augmented NLP, in this work, we aim to remedy this potential blind spot by augmenting standard APR prompts with program execution traces. We evaluate our approach using the GPT family of models on three popular APR datasets. Our findings suggest that simply incorporating execution traces into the prompt provides a limited performance improvement over trace-free baselines, in only 2 out of 6 tested dataset / model configurations. We further find that the effectiveness of execution traces for APR diminishes as their complexity increases. We explore several strategies for leveraging traces in prompts and demonstrate that LLM-optimized prompts help outperform trace-free prompts more consistently. Additionally, we show trace-based prompting to be superior to finetuning a smaller LLM on a small-scale dataset; and conduct probing studies reinforcing the notion that execution traces can complement the reasoning abilities of the LLMs.

Dynamic Neural Network is All You Need: Understanding the Robustness of Dynamic Mechanisms in Neural Networks

Aug 17, 2023Abstract:Deep Neural Networks (DNNs) have been used to solve different day-to-day problems. Recently, DNNs have been deployed in real-time systems, and lowering the energy consumption and response time has become the need of the hour. To address this scenario, researchers have proposed incorporating dynamic mechanism to static DNNs (SDNN) to create Dynamic Neural Networks (DyNNs) performing dynamic amounts of computation based on the input complexity. Although incorporating dynamic mechanism into SDNNs would be preferable in real-time systems, it also becomes important to evaluate how the introduction of dynamic mechanism impacts the robustness of the models. However, there has not been a significant number of works focusing on the robustness trade-off between SDNNs and DyNNs. To address this issue, we propose to investigate the robustness of dynamic mechanism in DyNNs and how dynamic mechanism design impacts the robustness of DyNNs. For that purpose, we evaluate three research questions. These evaluations are performed on three models and two datasets. Through the studies, we find that attack transferability from DyNNs to SDNNs is higher than attack transferability from SDNNs to DyNNs. Also, we find that DyNNs can be used to generate adversarial samples more efficiently than SDNNs. Then, through research studies, we provide insight into the design choices that can increase robustness of DyNNs against the attack generated using static model. Finally, we propose a novel attack to understand the additional attack surface introduced by the dynamic mechanism and provide design choices to improve robustness against the attack.

SlothSpeech: Denial-of-service Attack Against Speech Recognition Models

Jun 01, 2023

Abstract:Deep Learning (DL) models have been popular nowadays to execute different speech-related tasks, including automatic speech recognition (ASR). As ASR is being used in different real-time scenarios, it is important that the ASR model remains efficient against minor perturbations to the input. Hence, evaluating efficiency robustness of the ASR model is the need of the hour. We show that popular ASR models like Speech2Text model and Whisper model have dynamic computation based on different inputs, causing dynamic efficiency. In this work, we propose SlothSpeech, a denial-of-service attack against ASR models, which exploits the dynamic behaviour of the model. SlothSpeech uses the probability distribution of the output text tokens to generate perturbations to the audio such that efficiency of the ASR model is decreased. We find that SlothSpeech generated inputs can increase the latency up to 40X times the latency induced by benign input.

TestAug: A Framework for Augmenting Capability-based NLP Tests

Oct 14, 2022

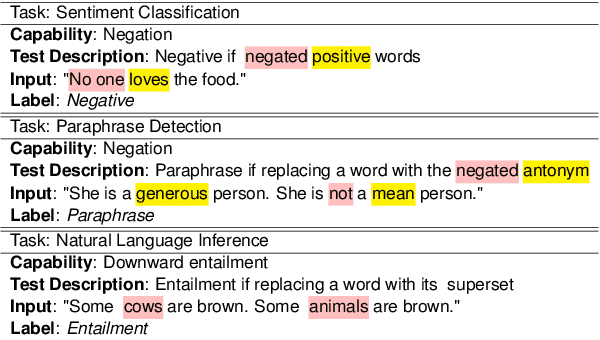

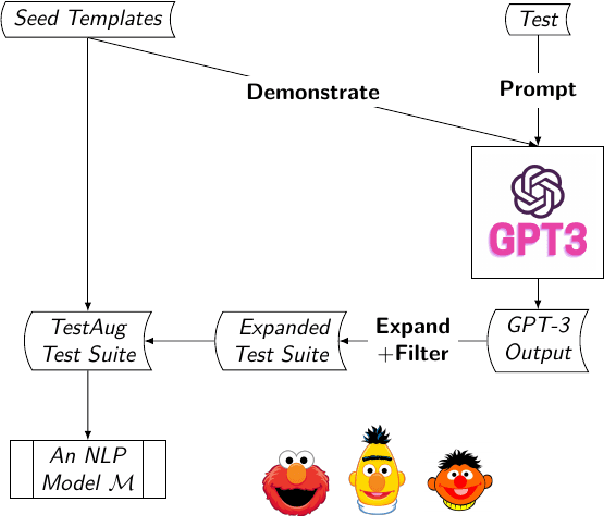

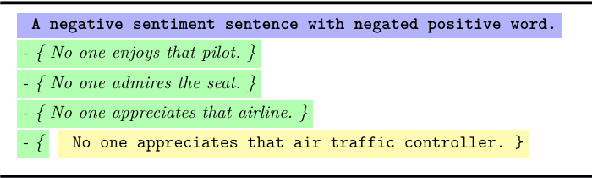

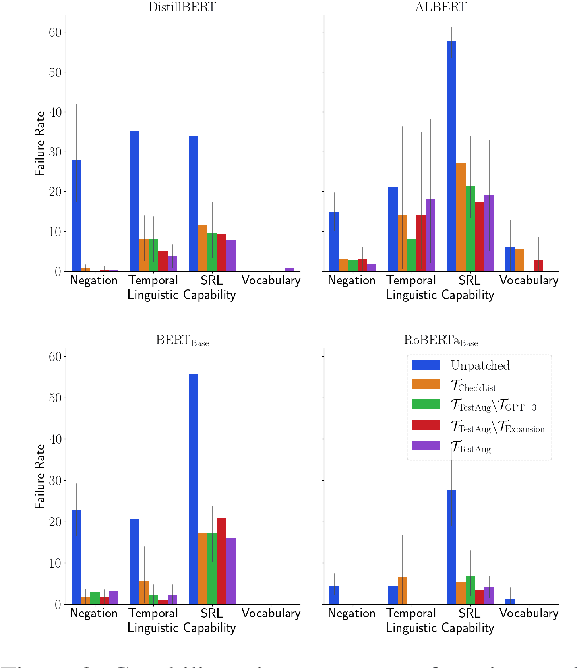

Abstract:The recently proposed capability-based NLP testing allows model developers to test the functional capabilities of NLP models, revealing functional failures that cannot be detected by the traditional heldout mechanism. However, existing work on capability-based testing requires extensive manual efforts and domain expertise in creating the test cases. In this paper, we investigate a low-cost approach for the test case generation by leveraging the GPT-3 engine. We further propose to use a classifier to remove the invalid outputs from GPT-3 and expand the outputs into templates to generate more test cases. Our experiments show that TestAug has three advantages over the existing work on behavioral testing: (1) TestAug can find more bugs than existing work; (2) The test cases in TestAug are more diverse; and (3) TestAug largely saves the manual efforts in creating the test suites. The code and data for TestAug can be found at our project website (https://guanqun-yang.github.io/testaug/) and GitHub (https://github.com/guanqun-yang/testaug).

DeepPerform: An Efficient Approach for Performance Testing of Resource-Constrained Neural Networks

Oct 10, 2022

Abstract:Today, an increasing number of Adaptive Deep Neural Networks (AdNNs) are being used on resource-constrained embedded devices. We observe that, similar to traditional software, redundant computation exists in AdNNs, resulting in considerable performance degradation. The performance degradation is dependent on the input and is referred to as input-dependent performance bottlenecks (IDPBs). To ensure an AdNN satisfies the performance requirements of resource-constrained applications, it is essential to conduct performance testing to detect IDPBs in the AdNN. Existing neural network testing methods are primarily concerned with correctness testing, which does not involve performance testing. To fill this gap, we propose DeepPerform, a scalable approach to generate test samples to detect the IDPBs in AdNNs. We first demonstrate how the problem of generating performance test samples detecting IDPBs can be formulated as an optimization problem. Following that, we demonstrate how DeepPerform efficiently handles the optimization problem by learning and estimating the distribution of AdNNs' computational consumption. We evaluate DeepPerform on three widely used datasets against five popular AdNN models. The results show that DeepPerform generates test samples that cause more severe performance degradation (FLOPs: increase up to 552\%). Furthermore, DeepPerform is substantially more efficient than the baseline methods in generating test inputs(runtime overhead: only 6-10 milliseconds).

NMTSloth: Understanding and Testing Efficiency Degradation of Neural Machine Translation Systems

Oct 07, 2022

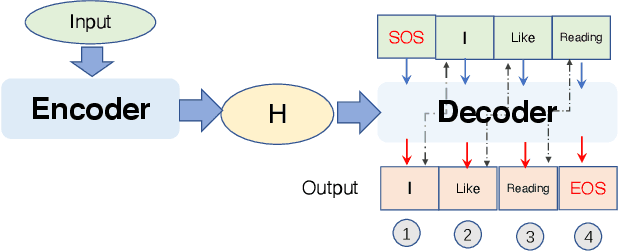

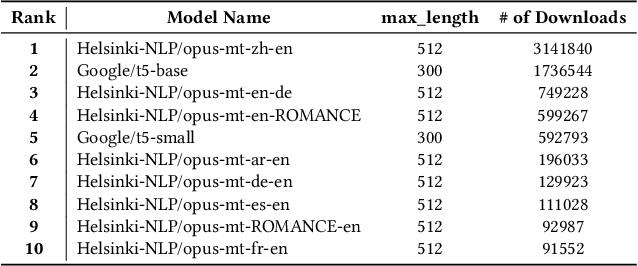

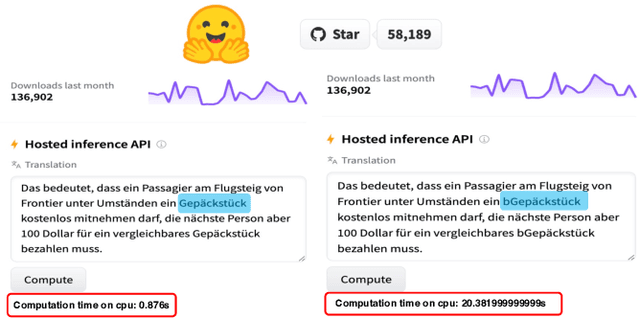

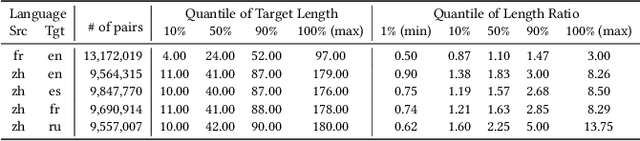

Abstract:Neural Machine Translation (NMT) systems have received much recent attention due to their human-level accuracy. While existing works mostly focus on either improving accuracy or testing accuracy robustness, the computation efficiency of NMT systems, which is of paramount importance due to often vast translation demands and real-time requirements, has surprisingly received little attention. In this paper, we make the first attempt to understand and test potential computation efficiency robustness in state-of-the-art NMT systems. By analyzing the working mechanism and implementation of 1455 public-accessible NMT systems, we observe a fundamental property in NMT systems that could be manipulated in an adversarial manner to reduce computation efficiency significantly. Our key motivation is to generate test inputs that could sufficiently delay the generation of EOS such that NMT systems would have to go through enough iterations to satisfy the pre-configured threshold. We present NMTSloth, which develops a gradient-guided technique that searches for a minimal and unnoticeable perturbation at character-level, token-level, and structure-level, which sufficiently delays the appearance of EOS and forces these inputs to reach the naturally-unreachable threshold. To demonstrate the effectiveness of NMTSloth, we conduct a systematic evaluation on three public-available NMT systems: Google T5, AllenAI WMT14, and Helsinki-NLP translators. Experimental results show that NMTSloth can increase NMT systems' response latency and energy consumption by 85% to 3153% and 86% to 3052%, respectively, by perturbing just one character or token in the input sentence. Our case study shows that inputs generated by NMTSloth significantly affect the battery power in real-world mobile devices (i.e., drain more than 30 times battery power than normal inputs).

CorrGAN: Input Transformation Technique Against Natural Corruptions

Apr 19, 2022

Abstract:Because of the increasing accuracy of Deep Neural Networks (DNNs) on different tasks, a lot of real times systems are utilizing DNNs. These DNNs are vulnerable to adversarial perturbations and corruptions. Specifically, natural corruptions like fog, blur, contrast etc can affect the prediction of DNN in an autonomous vehicle. In real time, these corruptions are needed to be detected and also the corrupted inputs are needed to be de-noised to be predicted correctly. In this work, we propose CorrGAN approach, which can generate benign input when a corrupted input is provided. In this framework, we train Generative Adversarial Network (GAN) with novel intermediate output-based loss function. The GAN can denoise the corrupted input and generate benign input. Through experimentation, we show that up to 75.2% of the corrupted misclassified inputs can be classified correctly by DNN using CorrGAN.

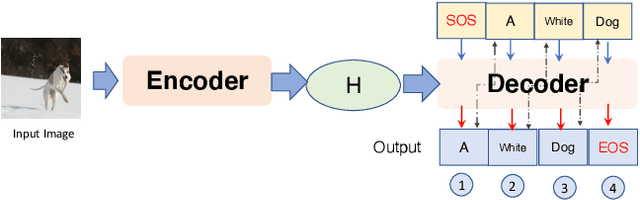

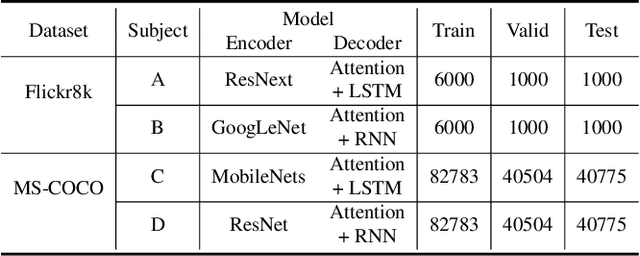

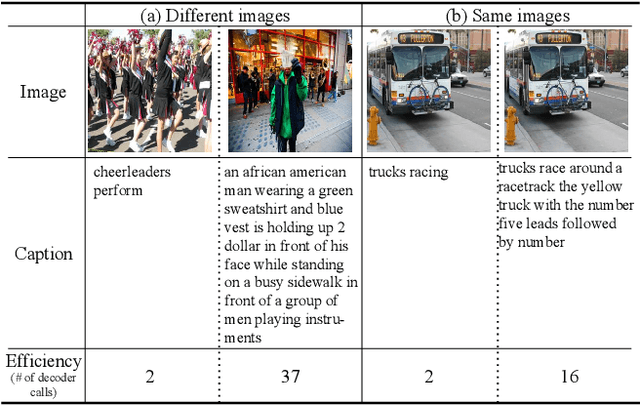

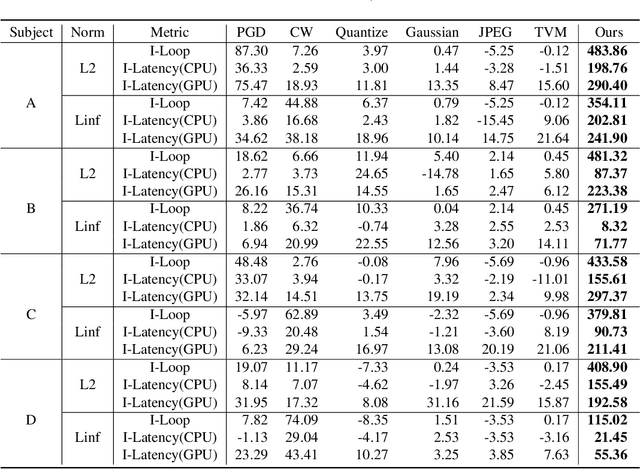

NICGSlowDown: Evaluating the Efficiency Robustness of Neural Image Caption Generation Models

Mar 29, 2022

Abstract:Neural image caption generation (NICG) models have received massive attention from the research community due to their excellent performance in visual understanding. Existing work focuses on improving NICG model accuracy while efficiency is less explored. However, many real-world applications require real-time feedback, which highly relies on the efficiency of NICG models. Recent research observed that the efficiency of NICG models could vary for different inputs. This observation brings in a new attack surface of NICG models, i.e., An adversary might be able to slightly change inputs to cause the NICG models to consume more computational resources. To further understand such efficiency-oriented threats, we propose a new attack approach, NICGSlowDown, to evaluate the efficiency robustness of NICG models. Our experimental results show that NICGSlowDown can generate images with human-unnoticeable perturbations that will increase the NICG model latency up to 483.86%. We hope this research could raise the community's concern about the efficiency robustness of NICG models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge