Minwook Kim

CAST: Cluster-Aware Self-Training for Tabular Data

Oct 10, 2023

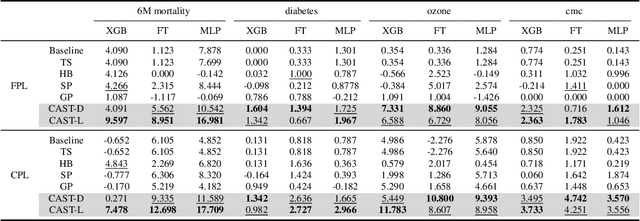

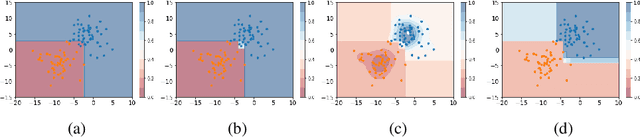

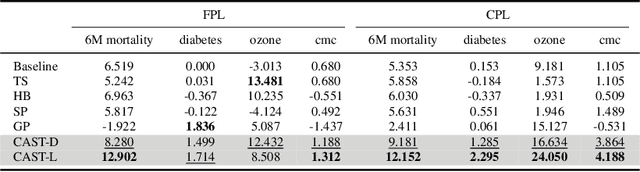

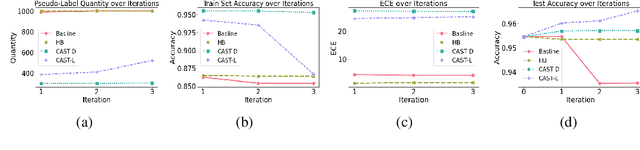

Abstract:Self-training has gained attraction because of its simplicity and versatility, yet it is vulnerable to noisy pseudo-labels. Several studies have proposed successful approaches to tackle this issue, but they have diminished the advantages of self-training because they require specific modifications in self-training algorithms or model architectures. Furthermore, most of them are incompatible with gradient boosting decision trees, which dominate the tabular domain. To address this, we revisit the cluster assumption, which states that data samples that are close to each other tend to belong to the same class. Inspired by the assumption, we propose Cluster-Aware Self-Training (CAST) for tabular data. CAST is a simple and universally adaptable approach for enhancing existing self-training algorithms without significant modifications. Concretely, our method regularizes the confidence of the classifier, which represents the value of the pseudo-label, forcing the pseudo-labels in low-density regions to have lower confidence by leveraging prior knowledge for each class within the training data. Extensive empirical evaluations on up to 20 real-world datasets confirm not only the superior performance of CAST but also its robustness in various setups in self-training contexts.

Revisiting Self-Training with Regularized Pseudo-Labeling for Tabular Data

Mar 13, 2023Abstract:Recent progress in semi- and self-supervised learning has caused a rift in the long-held belief about the need for an enormous amount of labeled data for machine learning and the irrelevancy of unlabeled data. Although it has been successful in various data, there is no dominant semi- and self-supervised learning method that can be generalized for tabular data (i.e. most of the existing methods require appropriate tabular datasets and architectures). In this paper, we revisit self-training which can be applied to any kind of algorithm including the most widely used architecture, gradient boosting decision tree, and introduce curriculum pseudo-labeling (a state-of-the-art pseudo-labeling technique in image) for a tabular domain. Furthermore, existing pseudo-labeling techniques do not assure the cluster assumption when computing confidence scores of pseudo-labels generated from unlabeled data. To overcome this issue, we propose a novel pseudo-labeling approach that regularizes the confidence scores based on the likelihoods of the pseudo-labels so that more reliable pseudo-labels which lie in high density regions can be obtained. We exhaustively validate the superiority of our approaches using various models and tabular datasets.

3D Dual-Fusion: Dual-Domain Dual-Query Camera-LiDAR Fusion for 3D Object Detection

Nov 24, 2022Abstract:Fusing data from cameras and LiDAR sensors is an essential technique to achieve robust 3D object detection. One key challenge in camera-LiDAR fusion involves mitigating the large domain gap between the two sensors in terms of coordinates and data distribution when fusing their features. In this paper, we propose a novel camera-LiDAR fusion architecture called, 3D Dual-Fusion, which is designed to mitigate the gap between the feature representations of camera and LiDAR data. The proposed method fuses the features of the camera-view and 3D voxel-view domain and models their interactions through deformable attention. We redesign the transformer fusion encoder to aggregate the information from the two domains. Two major changes include 1) dual query-based deformable attention to fuse the dual-domain features interactively and 2) 3D local self-attention to encode the voxel-domain queries prior to dual-query decoding. The results of an experimental evaluation show that the proposed camera-LiDAR fusion architecture achieved competitive performance on the KITTI and nuScenes datasets, with state-of-the-art performances in some 3D object detection benchmarks categories.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge