Minhong Zhu

Multiplex Graph Contrastive Learning with Soft Negatives

Sep 12, 2024Abstract:Graph Contrastive Learning (GCL) seeks to learn nodal or graph representations that contain maximal consistent information from graph-structured data. While node-level contrasting modes are dominating, some efforts commence to explore consistency across different scales. Yet, they tend to lose consistent information and be contaminated by disturbing features. Here, we introduce MUX-GCL, a novel cross-scale contrastive learning paradigm that utilizes multiplex representations as effective patches. While this learning mode minimizes contaminating noises, a commensurate contrasting strategy using positional affinities further avoids information loss by correcting false negative pairs across scales. Extensive downstream experiments demonstrate that MUX-GCL yields multiple state-of-the-art results on public datasets. Our theoretical analysis further guarantees the new objective function as a stricter lower bound of mutual information of raw input features and output embeddings, which rationalizes this paradigm. Code is available at https://github.com/MUX-GCL/Code.

Boosting MLPs with a Coarsening Strategy for Long-Term Time Series Forecasting

May 06, 2024

Abstract:Deep learning methods have been exerting their strengths in long-term time series forecasting. However, they often struggle to strike a balance between expressive power and computational efficiency. Here, we propose the Coarsened Perceptron Network (CP-Net), a novel architecture that efficiently enhances the predictive capability of MLPs while maintains a linear computational complexity. It utilizes a coarsening strategy as the backbone that leverages two-stage convolution-based sampling blocks. Based purely on convolution, they provide the functionality of extracting short-term semantic and contextual patterns, which is relatively deficient in the global point-wise projection of the MLP layer. With the architectural simplicity and low runtime, our experiments on seven time series forecasting benchmarks demonstrate that CP-Net achieves an improvement of 4.1% compared to the SOTA method. The model further shows effective utilization of the exposed information with a consistent improvement as the look-back window expands.

Hybrid Focal and Full-Range Attention Based Graph Transformers

Nov 08, 2023

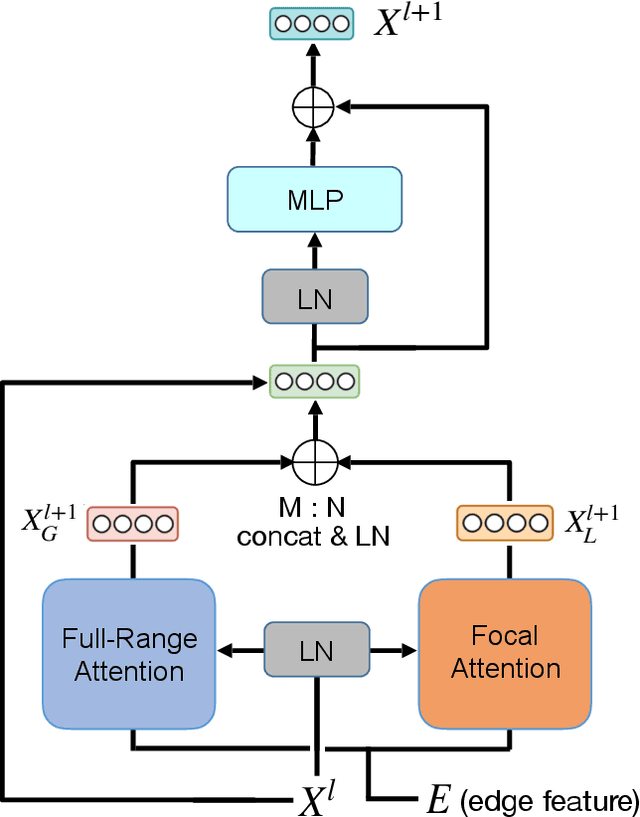

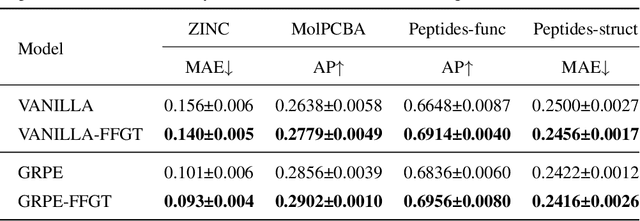

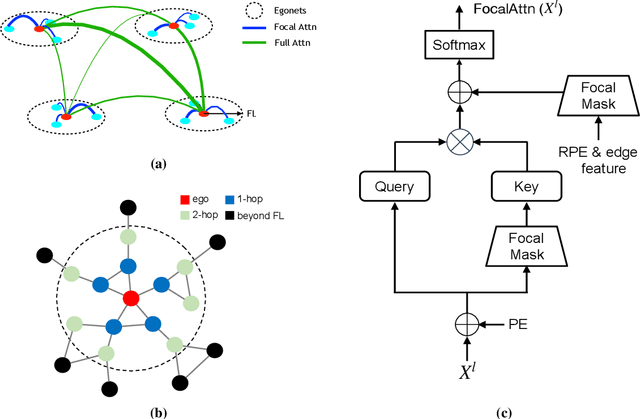

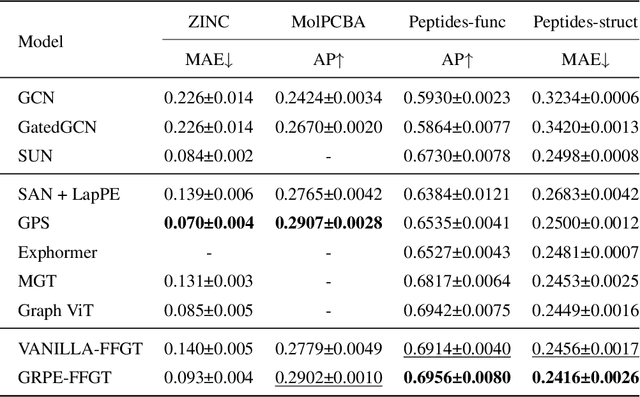

Abstract:The paradigm of Transformers using the self-attention mechanism has manifested its advantage in learning graph-structured data. Yet, Graph Transformers are capable of modeling full range dependencies but are often deficient in extracting information from locality. A common practice is to utilize Message Passing Neural Networks (MPNNs) as an auxiliary to capture local information, which however are still inadequate for comprehending substructures. In this paper, we present a purely attention-based architecture, namely Focal and Full-Range Graph Transformer (FFGT), which can mitigate the loss of local information in learning global correlations. The core component of FFGT is a new mechanism of compound attention, which combines the conventional full-range attention with K-hop focal attention on ego-nets to aggregate both global and local information. Beyond the scope of canonical Transformers, the FFGT has the merit of being more substructure-aware. Our approach enhances the performance of existing Graph Transformers on various open datasets, while achieves compatible SOTA performance on several Long-Range Graph Benchmark (LRGB) datasets even with a vanilla transformer. We further examine influential factors on the optimal focal length of attention via introducing a novel synthetic dataset based on SBM-PATTERN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge