Mingbin Feng

Towards Effective Open-set Graph Class-incremental Learning

Jul 23, 2025Abstract:Graph class-incremental learning (GCIL) allows graph neural networks (GNNs) to adapt to evolving graph analytical tasks by incrementally learning new class knowledge while retaining knowledge of old classes. Existing GCIL methods primarily focus on a closed-set assumption, where all test samples are presumed to belong to previously known classes. Such an assumption restricts their applicability in real-world scenarios, where unknown classes naturally emerge during inference, and are absent during training. In this paper, we explore a more challenging open-set graph class-incremental learning scenario with two intertwined challenges: catastrophic forgetting of old classes, which impairs the detection of unknown classes, and inadequate open-set recognition, which destabilizes the retention of learned knowledge. To address the above problems, a novel OGCIL framework is proposed, which utilizes pseudo-sample embedding generation to effectively mitigate catastrophic forgetting and enable robust detection of unknown classes. To be specific, a prototypical conditional variational autoencoder is designed to synthesize node embeddings for old classes, enabling knowledge replay without storing raw graph data. To handle unknown classes, we employ a mixing-based strategy to generate out-of-distribution (OOD) samples from pseudo in-distribution and current node embeddings. A novel prototypical hypersphere classification loss is further proposed, which anchors in-distribution embeddings to their respective class prototypes, while repelling OOD embeddings away. Instead of assigning all unknown samples into one cluster, our proposed objective function explicitly models them as outliers through prototype-aware rejection regions, ensuring a robust open-set recognition. Extensive experiments on five benchmarks demonstrate the effectiveness of OGCIL over existing GCIL and open-set GNN methods.

Semi-supervised Anomaly Detection with Extremely Limited Labels in Dynamic Graphs

Jan 25, 2025Abstract:Semi-supervised graph anomaly detection (GAD) has recently received increasing attention, which aims to distinguish anomalous patterns from graphs under the guidance of a moderate amount of labeled data and a large volume of unlabeled data. Although these proposed semi-supervised GAD methods have achieved great success, their superior performance will be seriously degraded when the provided labels are extremely limited due to some unpredictable factors. Besides, the existing methods primarily focus on anomaly detection in static graphs, and little effort was paid to consider the continuous evolution characteristic of graphs over time (dynamic graphs). To address these challenges, we propose a novel GAD framework (EL$^{2}$-DGAD) to tackle anomaly detection problem in dynamic graphs with extremely limited labels. Specifically, a transformer-based graph encoder model is designed to more effectively preserve evolving graph structures beyond the local neighborhood. Then, we incorporate an ego-context hypersphere classification loss to classify temporal interactions according to their structure and temporal neighborhoods while ensuring the normal samples are mapped compactly against anomalous data. Finally, the above loss is further augmented with an ego-context contrasting module which utilizes unlabeled data to enhance model generalization. Extensive experiments on four datasets and three label rates demonstrate the effectiveness of the proposed method in comparison to the existing GAD methods.

Towards Cross-domain Few-shot Graph Anomaly Detection

Oct 11, 2024

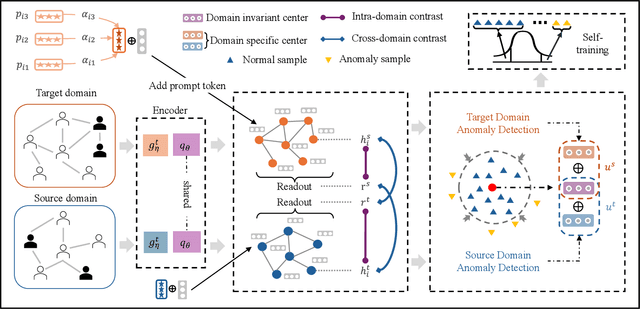

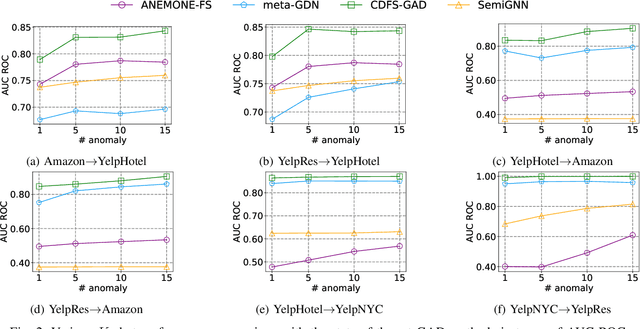

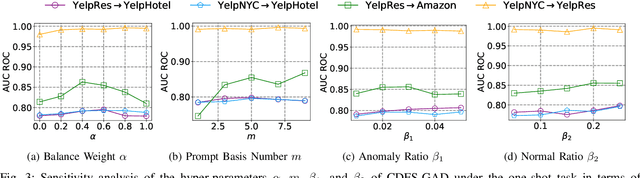

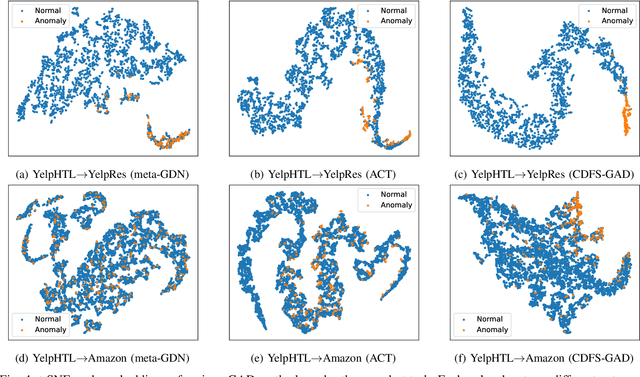

Abstract:Few-shot graph anomaly detection (GAD) has recently garnered increasing attention, which aims to discern anomalous patterns among abundant unlabeled test nodes under the guidance of a limited number of labeled training nodes. Existing few-shot GAD approaches typically adopt meta-training methods trained on richly labeled auxiliary networks to facilitate rapid adaptation to target networks that possess sparse labels. However, these proposed methods often assume that the auxiliary and target networks exist in the same data distributions-an assumption rarely holds in practical settings. This paper explores a more prevalent and complex scenario of cross-domain few-shot GAD, where the goal is to identify anomalies within sparsely labeled target graphs using auxiliary graphs from a related, yet distinct domain. The challenge here is nontrivial owing to inherent data distribution discrepancies between the source and target domains, compounded by the uncertainties of sparse labeling in the target domain. In this paper, we propose a simple and effective framework, termed CDFS-GAD, specifically designed to tackle the aforementioned challenges. CDFS-GAD first introduces a domain-adaptive graph contrastive learning module, which is aimed at enhancing cross-domain feature alignment. Then, a prompt tuning module is further designed to extract domain-specific features tailored to each domain. Moreover, a domain-adaptive hypersphere classification loss is proposed to enhance the discrimination between normal and anomalous instances under minimal supervision, utilizing domain-sensitive norms. Lastly, a self-training strategy is introduced to further refine the predicted scores, enhancing its reliability in few-shot settings. Extensive experiments on twelve real-world cross-domain data pairs demonstrate the effectiveness of the proposed CDFS-GAD framework in comparison to various existing GAD methods.

Time-series Anomaly Detection via Contextual Discriminative Contrastive Learning

Apr 16, 2023Abstract:Detecting anomalies in temporal data is challenging due to anomalies being dependent on temporal dynamics. One-class classification methods are commonly used for anomaly detection tasks, but they have limitations when applied to temporal data. In particular, mapping all normal instances into a single hypersphere to capture their global characteristics can lead to poor performance in detecting context-based anomalies where the abnormality is defined with respect to local information. To address this limitation, we propose a novel approach inspired by the loss function of DeepSVDD. Instead of mapping all normal instances into a single hypersphere center, each normal instance is pulled toward a recent context window. However, this approach is prone to a representation collapse issue where the neural network that encodes a given instance and its context is optimized towards a constant encoder solution. To overcome this problem, we combine our approach with a deterministic contrastive loss from Neutral AD, a promising self-supervised learning anomaly detection approach. We provide a theoretical analysis to demonstrate that the incorporation of the deterministic contrastive loss can effectively prevent the occurrence of a constant encoder solution. Experimental results show superior performance of our model over various baselines and model variants on real-world industrial datasets.

Multivariate Time Series Anomaly Detection via Dynamic Graph Forecasting

Feb 04, 2023Abstract:Anomalies in univariate time series often refer to abnormal values and deviations from the temporal patterns from majority of historical observations. In multivariate time series, anomalies also refer to abnormal changes in the inter-series relationship, such as correlation, over time. Existing studies have been able to model such inter-series relationships through graph neural networks. However, most works settle on learning a static graph globally or within a context window to assist a time series forecasting task or a reconstruction task, whose objective is not tailored to explicitly detect the abnormal relationship. Some other works detect anomalies based on reconstructing or forecasting a list of inter-series graphs, which inadvertently weakens their power to capture temporal patterns within the data due to the discrete nature of graphs. In this study, we propose DyGraphAD, a multivariate time series anomaly detection framework based upon a list of dynamic inter-series graphs. The core idea is to detect anomalies based on the deviation of inter-series relationships and intra-series temporal patterns from normal to anomalous states, by leveraging the evolving nature of the graphs in order to assist a graph forecasting task and a time series forecasting task simultaneously. Our numerical experiments on real-world datasets demonstrate that DyGraphAD has superior performance than baseline anomaly detection approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge