Milan Markovic

Enhancing Strawberry Yield Forecasting with Backcasted IoT Sensor Data and Machine Learning

Apr 25, 2025Abstract:Due to rapid population growth globally, digitally-enabled agricultural sectors are crucial for sustainable food production and making informed decisions about resource management for farmers and various stakeholders. The deployment of Internet of Things (IoT) technologies that collect real-time observations of various environmental (e.g., temperature, humidity, etc.) and operational factors (e.g., irrigation) influencing production is often seen as a critical step to enable additional novel downstream tasks, such as AI-based yield forecasting. However, since AI models require large amounts of data, this creates practical challenges in a real-world dynamic farm setting where IoT observations would need to be collected over a number of seasons. In this study, we deployed IoT sensors in strawberry production polytunnels for two growing seasons to collect environmental data, including water usage, external and internal temperature, external and internal humidity, soil moisture, soil temperature, and photosynthetically active radiation. The sensor observations were combined with manually provided yield records spanning a period of four seasons. To bridge the gap of missing IoT observations for two additional seasons, we propose an AI-based backcasting approach to generate synthetic sensor observations using historical weather data from a nearby weather station and the existing polytunnel observations. We built an AI-based yield forecasting model to evaluate our approach using the combination of real and synthetic observations. Our results demonstrated that incorporating synthetic data improved yield forecasting accuracy, with models incorporating synthetic data outperforming those trained only on historical yield, weather records, and real sensor data.

Pushing the Limits of Sparsity: A Bag of Tricks for Extreme Pruning

Nov 21, 2024

Abstract:Pruning of deep neural networks has been an effective technique for reducing model size while preserving most of the performance of dense networks, crucial for deploying models on memory and power-constrained devices. While recent sparse learning methods have shown promising performance up to moderate sparsity levels such as 95% and 98%, accuracy quickly deteriorates when pushing sparsities to extreme levels. Obtaining sparse networks at such extreme sparsity levels presents unique challenges, such as fragile gradient flow and heightened risk of layer collapse. In this work, we explore network performance beyond the commonly studied sparsities, and propose a collection of techniques that enable the continuous learning of networks without accuracy collapse even at extreme sparsities, including 99.90%, 99.95% and 99.99% on ResNet architectures. Our approach combines 1) Dynamic ReLU phasing, where DyReLU initially allows for richer parameter exploration before being gradually replaced by standard ReLU, 2) weight sharing which reuses parameters within a residual layer while maintaining the same number of learnable parameters, and 3) cyclic sparsity, where both sparsity levels and sparsity patterns evolve dynamically throughout training to better encourage parameter exploration. We evaluate our method, which we term Extreme Adaptive Sparse Training (EAST) at extreme sparsities using ResNet-34 and ResNet-50 on CIFAR-10, CIFAR-100, and ImageNet, achieving significant performance improvements over state-of-the-art methods we compared with.

Model Pruning Enables Localized and Efficient Federated Learning for Yield Forecasting and Data Sharing

Apr 19, 2023

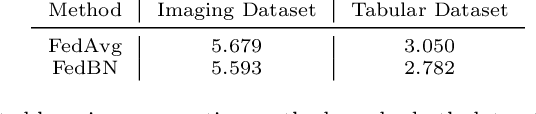

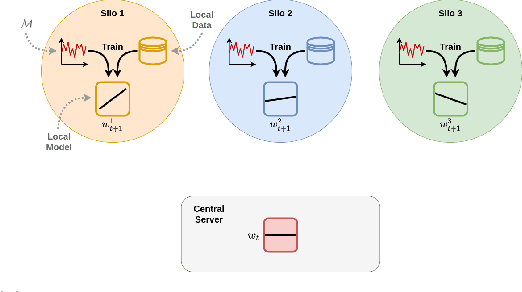

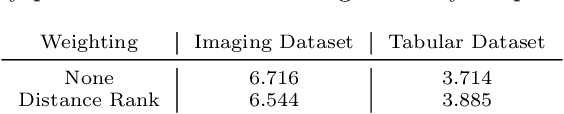

Abstract:Federated Learning (FL) presents a decentralized approach to model training in the agri-food sector and offers the potential for improved machine learning performance, while ensuring the safety and privacy of individual farms or data silos. However, the conventional FL approach has two major limitations. First, the heterogeneous data on individual silos can cause the global model to perform well for some clients but not all, as the update direction on some clients may hinder others after they are aggregated. Second, it is lacking with respect to the efficiency perspective concerning communication costs during FL and large model sizes. This paper proposes a new technical solution that utilizes network pruning on client models and aggregates the pruned models. This method enables local models to be tailored to their respective data distribution and mitigate the data heterogeneity present in agri-food data. Moreover, it allows for more compact models that consume less data during transmission. We experiment with a soybean yield forecasting dataset and find that this approach can improve inference performance by 15.5% to 20% compared to FedAvg, while reducing local model sizes by up to 84% and the data volume communicated between the clients and the server by 57.1% to 64.7%.

The Role of Cross-Silo Federated Learning in Facilitating Data Sharing in the Agri-Food Sector

Apr 14, 2021

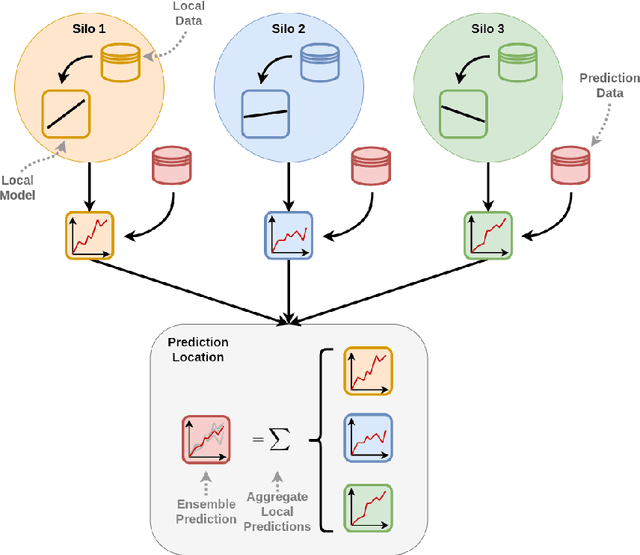

Abstract:Data sharing remains a major hindering factor when it comes to adopting emerging AI technologies in general, but particularly in the agri-food sector. Protectiveness of data is natural in this setting; data is a precious commodity for data owners, which if used properly can provide them with useful insights on operations and processes leading to a competitive advantage. Unfortunately, novel AI technologies often require large amounts of training data in order to perform well, something that in many scenarios is unrealistic. However, recent machine learning advances, e.g. federated learning and privacy-preserving technologies, can offer a solution to this issue via providing the infrastructure and underpinning technologies needed to use data from various sources to train models without ever sharing the raw data themselves. In this paper, we propose a technical solution based on federated learning that uses decentralized data, (i.e. data that are not exchanged or shared but remain with the owners) to develop a cross-silo machine learning model that facilitates data sharing across supply chains. We focus our data sharing proposition on improving production optimization through soybean yield prediction, and provide potential use-cases that such methods can assist in other problem settings. Our results demonstrate that our approach not only performs better than each of the models trained on an individual data source, but also that data sharing in the agri-food sector can be enabled via alternatives to data exchange, whilst also helping to adopt emerging machine learning technologies to boost productivity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge