Miguel Jaques

Vision-based system identification and 3D keypoint discovery using dynamics constraints

Sep 13, 2021

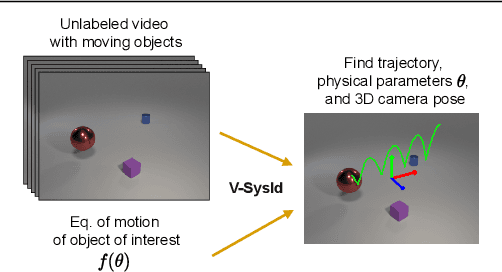

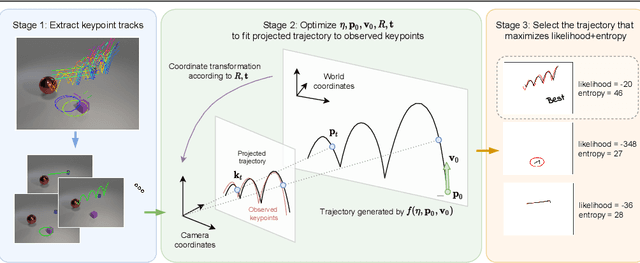

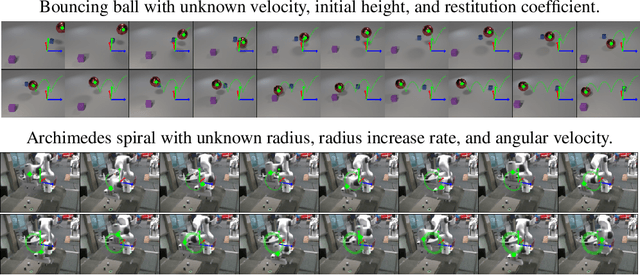

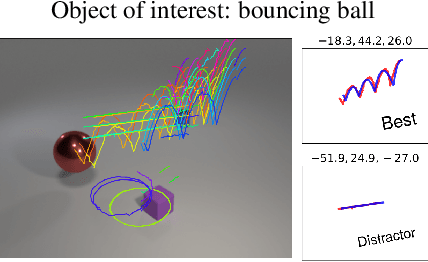

Abstract:This paper introduces V-SysId, a novel method that enables simultaneous keypoint discovery, 3D system identification, and extrinsic camera calibration from an unlabeled video taken from a static camera, using only the family of equations of motion of the object of interest as weak supervision. V-SysId takes keypoint trajectory proposals and alternates between maximum likelihood parameter estimation and extrinsic camera calibration, before applying a suitable selection criterion to identify the track of interest. This is then used to train a keypoint tracking model using supervised learning. Results on a range of settings (robotics, physics, physiology) highlight the utility of this approach.

NewtonianVAE: Proportional Control and Goal Identification from Pixels via Physical Latent Spaces

Jun 02, 2020

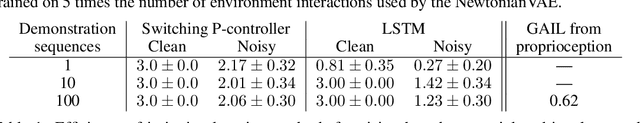

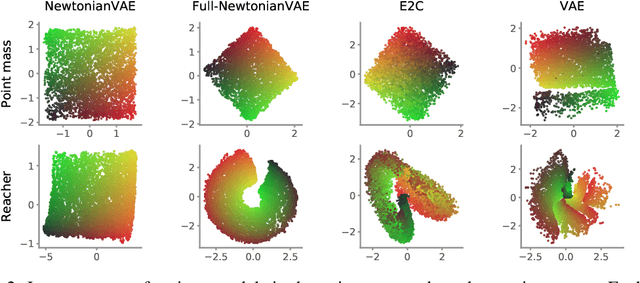

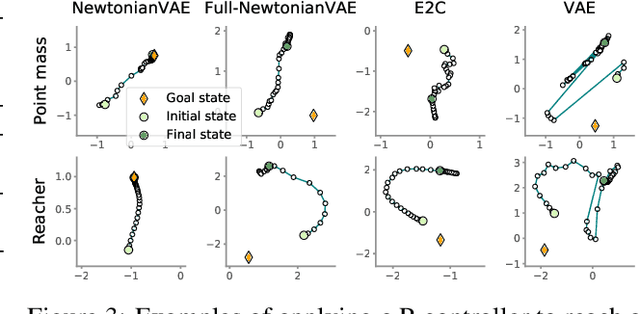

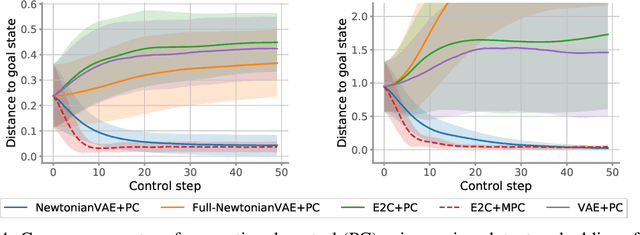

Abstract:Learning low-dimensional latent state space dynamics models has been a powerful paradigm for enabling vision-based planning and learning for control. We introduce a latent dynamics learning framework that is uniquely designed to induce proportional controlability in the latent space, thus enabling the use of much simpler controllers than prior work. We show that our learned dynamics model enables proportional control from pixels, dramatically simplifies and accelerates behavioural cloning of vision-based controllers, and provides interpretable goal discovery when applied to imitation learning of switching controllers from demonstration.

Physics-as-Inverse-Graphics: Joint Unsupervised Learning of Objects and Physics from Video

May 27, 2019

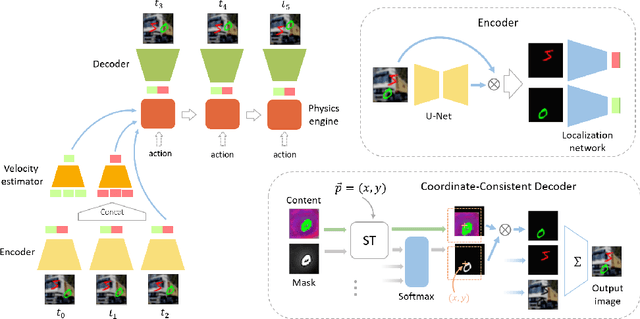

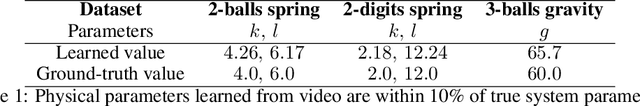

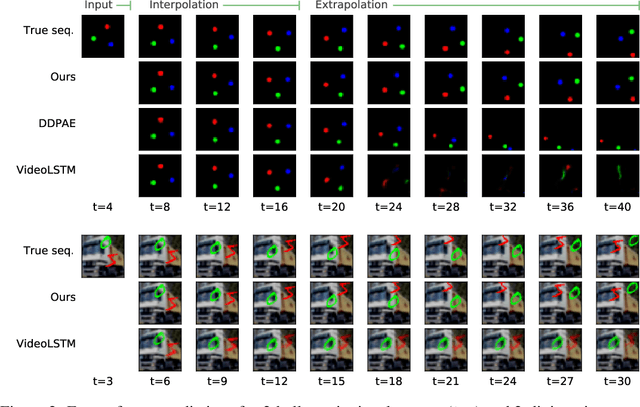

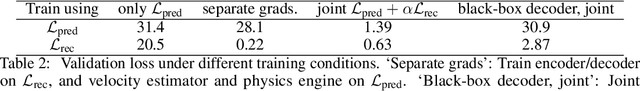

Abstract:We aim to perform unsupervised discovery of objects and their states such as location and velocity, as well as physical system parameters such as mass and gravity from video -- given only the differential equations governing the scene dynamics. Existing physical scene understanding methods require either object state supervision, or do not integrate with differentiable physics to learn interpretable system parameters and states. We address this problem through a $\textit{physics-as-inverse-graphics}$ approach that brings together vision-as-inverse-graphics and differentiable physics engines. This framework allows us to perform long term extrapolative video prediction, as well as vision-based model-predictive control. Our approach significantly outperforms related unsupervised methods in long-term future frame prediction of systems with interacting objects (such as ball-spring or 3-body gravitational systems). We further show the value of this tight vision-physics integration by demonstrating data-efficient learning of vision-actuated model-based control for a pendulum system. The controller's interpretability also provides unique capabilities in goal-driven control and physical reasoning for zero-data adaptation.

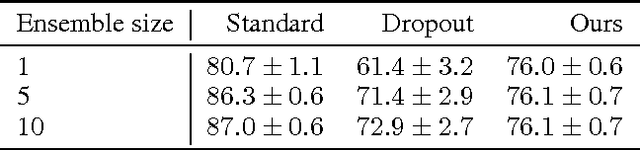

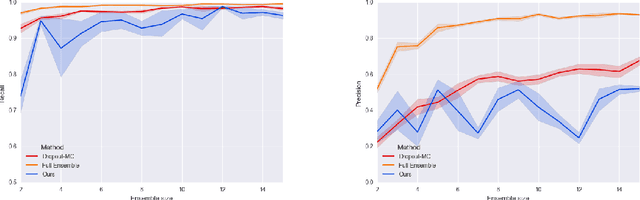

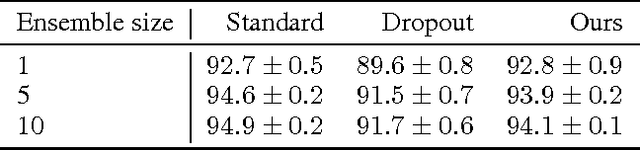

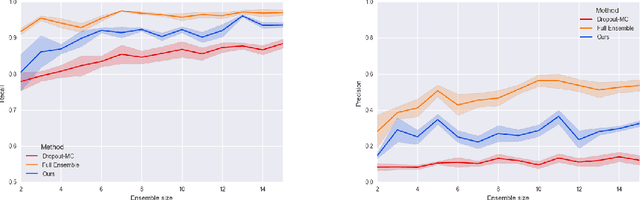

Efficient variational Bayesian neural network ensembles for outlier detection

Apr 22, 2017

Abstract:In this work we perform outlier detection using ensembles of neural networks obtained by variational approximation of the posterior in a Bayesian neural network setting. The variational parameters are obtained by sampling from the true posterior by gradient descent. We show our outlier detection results are comparable to those obtained using other efficient ensembling methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge