Physics-as-Inverse-Graphics: Joint Unsupervised Learning of Objects and Physics from Video

Paper and Code

May 27, 2019

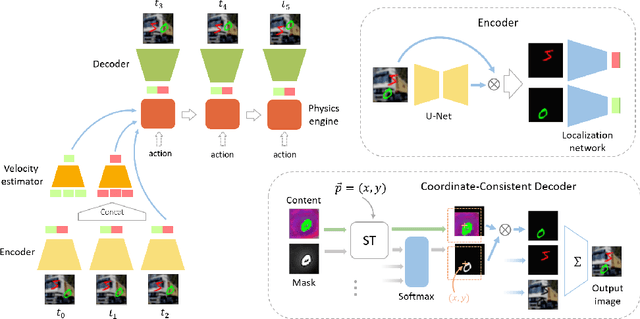

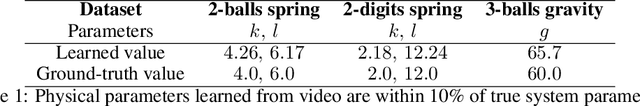

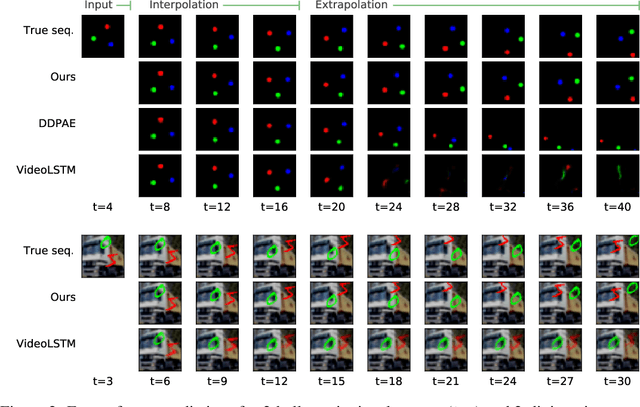

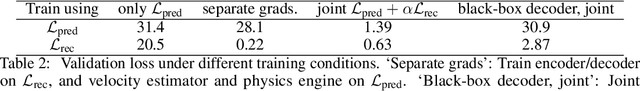

We aim to perform unsupervised discovery of objects and their states such as location and velocity, as well as physical system parameters such as mass and gravity from video -- given only the differential equations governing the scene dynamics. Existing physical scene understanding methods require either object state supervision, or do not integrate with differentiable physics to learn interpretable system parameters and states. We address this problem through a $\textit{physics-as-inverse-graphics}$ approach that brings together vision-as-inverse-graphics and differentiable physics engines. This framework allows us to perform long term extrapolative video prediction, as well as vision-based model-predictive control. Our approach significantly outperforms related unsupervised methods in long-term future frame prediction of systems with interacting objects (such as ball-spring or 3-body gravitational systems). We further show the value of this tight vision-physics integration by demonstrating data-efficient learning of vision-actuated model-based control for a pendulum system. The controller's interpretability also provides unique capabilities in goal-driven control and physical reasoning for zero-data adaptation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge