Michele Salvi

Fractal and Regular Geometry of Deep Neural Networks

Apr 08, 2025

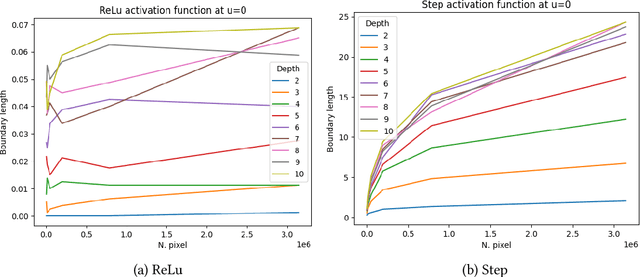

Abstract:We study the geometric properties of random neural networks by investigating the boundary volumes of their excursion sets for different activation functions, as the depth increases. More specifically, we show that, for activations which are not very regular (e.g., the Heaviside step function), the boundary volumes exhibit fractal behavior, with their Hausdorff dimension monotonically increasing with the depth. On the other hand, for activations which are more regular (e.g., ReLU, logistic and $\tanh$), as the depth increases, the expected boundary volumes can either converge to zero, remain constant or diverge exponentially, depending on a single spectral parameter which can be easily computed. Our theoretical results are confirmed in some numerical experiments based on Monte Carlo simulations.

A Systematization of the Wagner Framework: Graph Theory Conjectures and Reinforcement Learning

Jun 18, 2024Abstract:In 2021, Adam Zsolt Wagner proposed an approach to disprove conjectures in graph theory using Reinforcement Learning (RL). Wagner's idea can be framed as follows: consider a conjecture, such as a certain quantity f(G) < 0 for every graph G; one can then play a single-player graph-building game, where at each turn the player decides whether to add an edge or not. The game ends when all edges have been considered, resulting in a certain graph G_T, and f(G_T) is the final score of the game; RL is then used to maximize this score. This brilliant idea is as simple as innovative, and it lends itself to systematic generalization. Several different single-player graph-building games can be employed, along with various RL algorithms. Moreover, RL maximizes the cumulative reward, allowing for step-by-step rewards instead of a single final score, provided the final cumulative reward represents the quantity of interest f(G_T). In this paper, we discuss these and various other choices that can be significant in Wagner's framework. As a contribution to this systematization, we present four distinct single-player graph-building games. Each game employs both a step-by-step reward system and a single final score. We also propose a principled approach to select the most suitable neural network architecture for any given conjecture, and introduce a new dataset of graphs labeled with their Laplacian spectra. Furthermore, we provide a counterexample for a conjecture regarding the sum of the matching number and the spectral radius, which is simpler than the example provided in Wagner's original paper. The games have been implemented as environments in the Gymnasium framework, and along with the dataset, are available as open-source supplementary materials.

Spectral complexity of deep neural networks

May 15, 2024

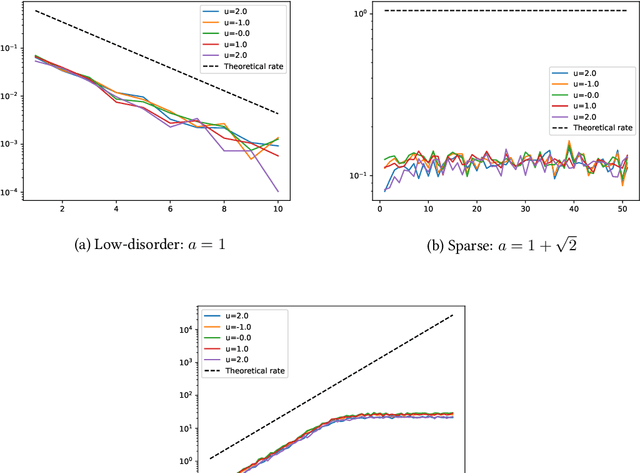

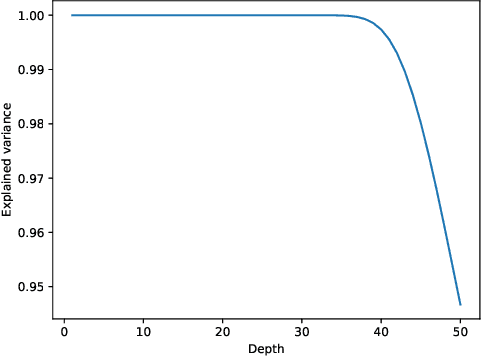

Abstract:It is well-known that randomly initialized, push-forward, fully-connected neural networks weakly converge to isotropic Gaussian processes, in the limit where the width of all layers goes to infinity. In this paper, we propose to use the angular power spectrum of the limiting field to characterize the complexity of the network architecture. In particular, we define sequences of random variables associated with the angular power spectrum, and provide a full characterization of the network complexity in terms of the asymptotic distribution of these sequences as the depth diverges. On this basis, we classify neural networks as low-disorder, sparse, or high-disorder; we show how this classification highlights a number of distinct features for standard activation functions, and in particular, sparsity properties of ReLU networks. Our theoretical results are also validated by numerical simulations.

A Quantitative Functional Central Limit Theorem for Shallow Neural Networks

Jul 05, 2023

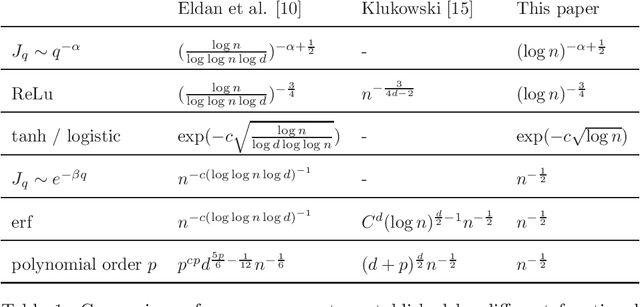

Abstract:We prove a Quantitative Functional Central Limit Theorem for one-hidden-layer neural networks with generic activation function. The rates of convergence that we establish depend heavily on the smoothness of the activation function, and they range from logarithmic in non-differentiable cases such as the Relu to $\sqrt{n}$ for very regular activations. Our main tools are functional versions of the Stein-Malliavin approach; in particular, we exploit heavily a quantitative functional central limit theorem which has been recently established by Bourguin and Campese (2020).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge