Silvia Giulia Galfrè

A Machine Learning Pipeline for Multiple Sclerosis Biomarker Discovery: Comparing explainable AI and Traditional Statistical Approaches

Sep 26, 2025

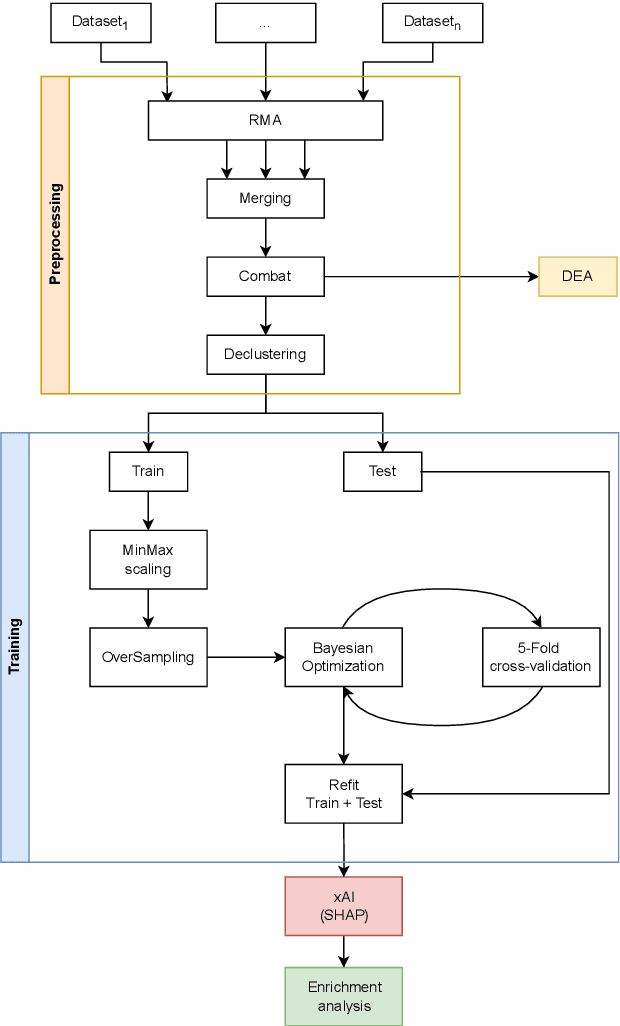

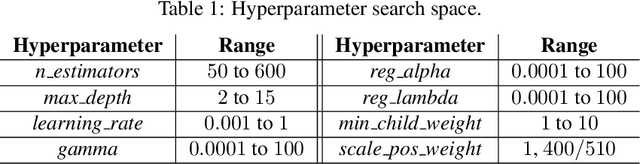

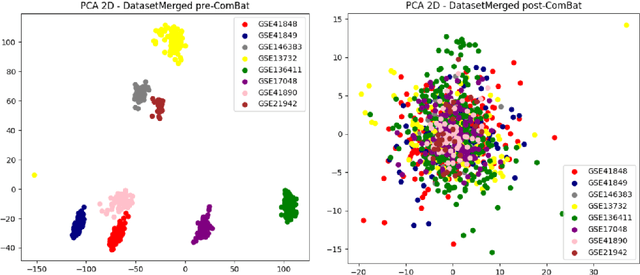

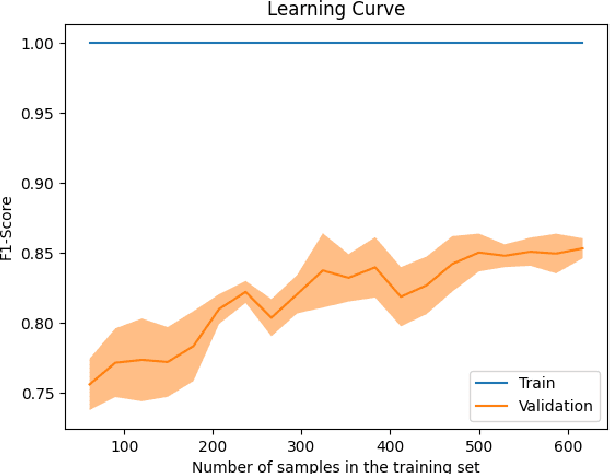

Abstract:We present a machine learning pipeline for biomarker discovery in Multiple Sclerosis (MS), integrating eight publicly available microarray datasets from Peripheral Blood Mononuclear Cells (PBMC). After robust preprocessing we trained an XGBoost classifier optimized via Bayesian search. SHapley Additive exPlanations (SHAP) were used to identify key features for model prediction, indicating thus possible biomarkers. These were compared with genes identified through classical Differential Expression Analysis (DEA). Our comparison revealed both overlapping and unique biomarkers between SHAP and DEA, suggesting complementary strengths. Enrichment analysis confirmed the biological relevance of SHAP-selected genes, linking them to pathways such as sphingolipid signaling, Th1/Th2/Th17 cell differentiation, and Epstein-Barr virus infection all known to be associated with MS. This study highlights the value of combining explainable AI (xAI) with traditional statistical methods to gain deeper insights into disease mechanism.

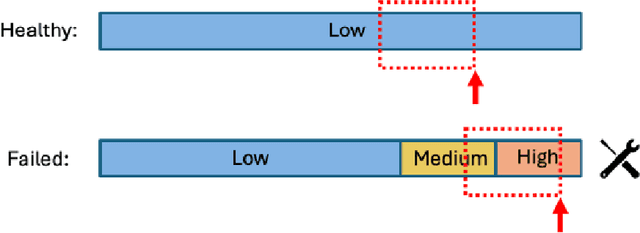

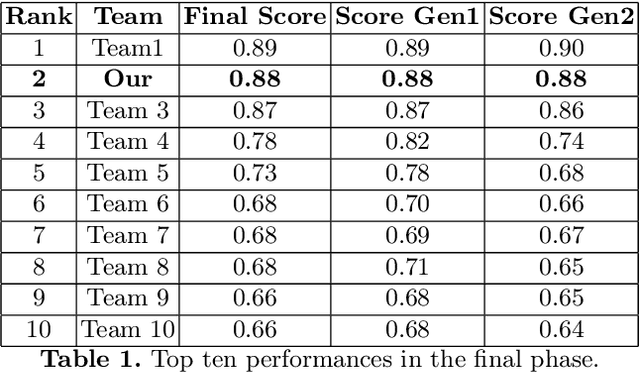

Achieving Predictive Precision: Leveraging LSTM and Pseudo Labeling for Volvo's Discovery Challenge at ECML-PKDD 2024

Sep 20, 2024

Abstract:This paper presents the second-place methodology in the Volvo Discovery Challenge at ECML-PKDD 2024, where we used Long Short-Term Memory networks and pseudo-labeling to predict maintenance needs for a component of Volvo trucks. We processed the training data to mirror the test set structure and applied a base LSTM model to label the test data iteratively. This approach refined our model's predictive capabilities and culminated in a macro-average F1-score of 0.879, demonstrating robust performance in predictive maintenance. This work provides valuable insights for applying machine learning techniques effectively in industrial settings.

A Systematization of the Wagner Framework: Graph Theory Conjectures and Reinforcement Learning

Jun 18, 2024Abstract:In 2021, Adam Zsolt Wagner proposed an approach to disprove conjectures in graph theory using Reinforcement Learning (RL). Wagner's idea can be framed as follows: consider a conjecture, such as a certain quantity f(G) < 0 for every graph G; one can then play a single-player graph-building game, where at each turn the player decides whether to add an edge or not. The game ends when all edges have been considered, resulting in a certain graph G_T, and f(G_T) is the final score of the game; RL is then used to maximize this score. This brilliant idea is as simple as innovative, and it lends itself to systematic generalization. Several different single-player graph-building games can be employed, along with various RL algorithms. Moreover, RL maximizes the cumulative reward, allowing for step-by-step rewards instead of a single final score, provided the final cumulative reward represents the quantity of interest f(G_T). In this paper, we discuss these and various other choices that can be significant in Wagner's framework. As a contribution to this systematization, we present four distinct single-player graph-building games. Each game employs both a step-by-step reward system and a single final score. We also propose a principled approach to select the most suitable neural network architecture for any given conjecture, and introduce a new dataset of graphs labeled with their Laplacian spectra. Furthermore, we provide a counterexample for a conjecture regarding the sum of the matching number and the spectral radius, which is simpler than the example provided in Wagner's original paper. The games have been implemented as environments in the Gymnasium framework, and along with the dataset, are available as open-source supplementary materials.

Improving Performance in Neural Networks by Dendrites-Activated Connections

Jan 03, 2023Abstract:Computational units in artificial neural networks follow a simplified model of biological neurons. In the biological model, the output signal of a neuron runs down the axon, splits following the many branches at its end, and passes identically to all the downward neurons of the network. Each of the downward neurons will use their copy of this signal as one of many inputs dendrites, integrate them all and fire an output, if above some threshold. In the artificial neural network, this translates to the fact that the nonlinear filtering of the signal is performed in the upward neuron, meaning that in practice the same activation is shared between all the downward neurons that use that signal as their input. Dendrites thus play a passive role. We propose a slightly more complex model for the biological neuron, where dendrites play an active role: the activation in the output of the upward neuron becomes optional, and instead the signals going through each dendrite undergo independent nonlinear filterings, before the linear combination. We implement this new model into a ReLU computational unit and discuss its biological plausibility. We compare this new computational unit with the standard one and describe it from a geometrical point of view. We provide a Keras implementation of this unit into fully connected and convolutional layers and estimate their FLOPs and weights change. We then use these layers in ResNet architectures on CIFAR-10, CIFAR-100, Imagenette, and Imagewoof, obtaining performance improvements over standard ResNets up to 1.73%. Finally, we prove a universal representation theorem for continuous functions on compact sets and show that this new unit has more representational power than its standard counterpart.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge