Michael Pust

Essential-Web v1.0: 24T tokens of organized web data

Jun 17, 2025

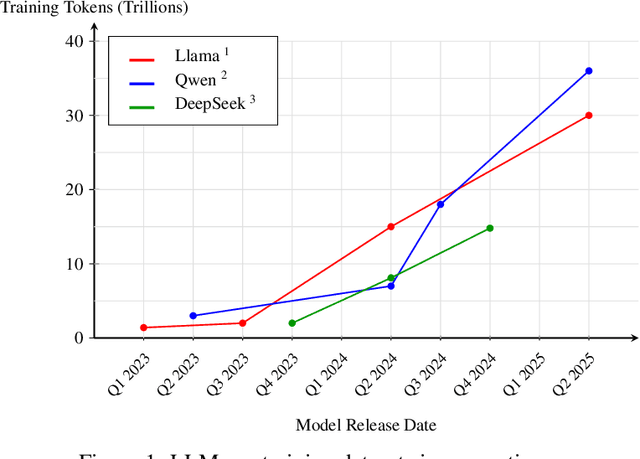

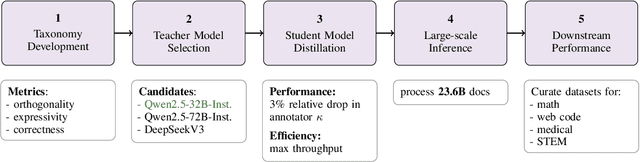

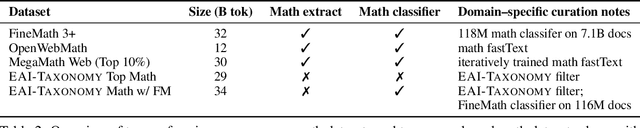

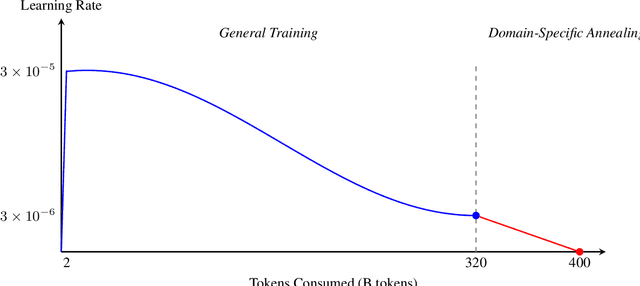

Abstract:Data plays the most prominent role in how language models acquire skills and knowledge. The lack of massive, well-organized pre-training datasets results in costly and inaccessible data pipelines. We present Essential-Web v1.0, a 24-trillion-token dataset in which every document is annotated with a twelve-category taxonomy covering topic, format, content complexity, and quality. Taxonomy labels are produced by EAI-Distill-0.5b, a fine-tuned 0.5b-parameter model that achieves an annotator agreement within 3% of Qwen2.5-32B-Instruct. With nothing more than SQL-style filters, we obtain competitive web-curated datasets in math (-8.0% relative to SOTA), web code (+14.3%), STEM (+24.5%) and medical (+8.6%). Essential-Web v1.0 is available on HuggingFace: https://huggingface.co/datasets/EssentialAI/essential-web-v1.0

Practical Efficiency of Muon for Pretraining

May 04, 2025Abstract:We demonstrate that Muon, the simplest instantiation of a second-order optimizer, explicitly expands the Pareto frontier over AdamW on the compute-time tradeoff. We find that Muon is more effective than AdamW in retaining data efficiency at large batch sizes, far beyond the so-called critical batch size, while remaining computationally efficient, thus enabling more economical training. We study the combination of Muon and the maximal update parameterization (muP) for efficient hyperparameter transfer and present a simple telescoping algorithm that accounts for all sources of error in muP while introducing only a modest overhead in resources. We validate our findings through extensive experiments with model sizes up to four billion parameters and ablations on the data distribution and architecture.

Rethinking Reflection in Pre-Training

Apr 05, 2025

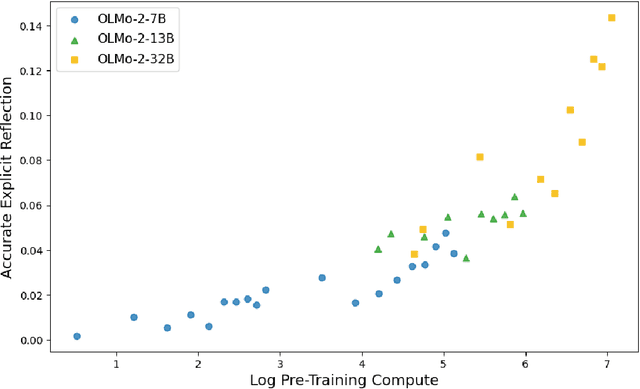

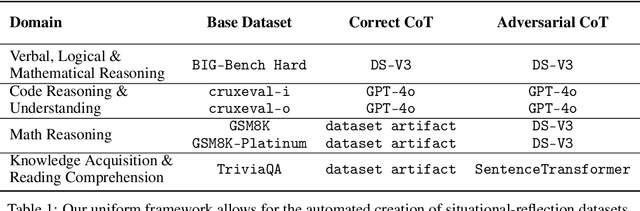

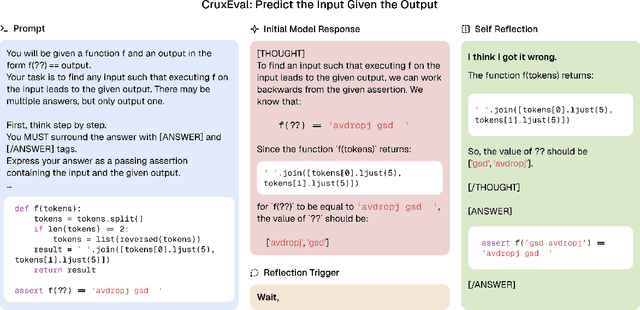

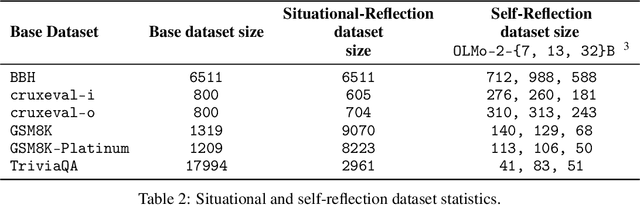

Abstract:A language model's ability to reflect on its own reasoning provides a key advantage for solving complex problems. While most recent research has focused on how this ability develops during reinforcement learning, we show that it actually begins to emerge much earlier - during the model's pre-training. To study this, we introduce deliberate errors into chains-of-thought and test whether the model can still arrive at the correct answer by recognizing and correcting these mistakes. By tracking performance across different stages of pre-training, we observe that this self-correcting ability appears early and improves steadily over time. For instance, an OLMo2-7B model pre-trained on 4 trillion tokens displays self-correction on our six self-reflection tasks.

Augmenting Statistical Machine Translation with Subword Translation of Out-of-Vocabulary Words

Aug 16, 2018

Abstract:Most statistical machine translation systems cannot translate words that are unseen in the training data. However, humans can translate many classes of out-of-vocabulary (OOV) words (e.g., novel morphological variants, misspellings, and compounds) without context by using orthographic clues. Following this observation, we describe and evaluate several general methods for OOV translation that use only subword information. We pose the OOV translation problem as a standalone task and intrinsically evaluate our approaches on fourteen typologically diverse languages across varying resource levels. Adding OOV translators to a statistical machine translation system yields consistent BLEU gains (0.5 points on average, and up to 2.0) for all fourteen languages, especially in low-resource scenarios.

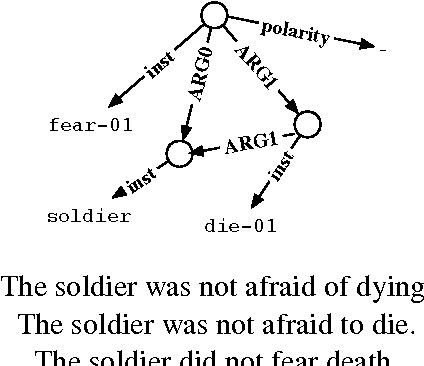

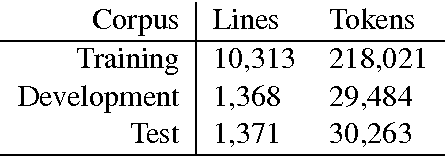

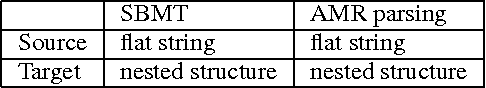

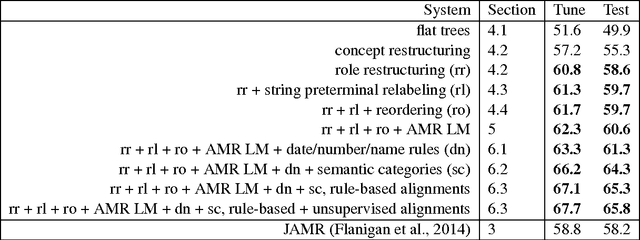

Using Syntax-Based Machine Translation to Parse English into Abstract Meaning Representation

Apr 28, 2015

Abstract:We present a parser for Abstract Meaning Representation (AMR). We treat English-to-AMR conversion within the framework of string-to-tree, syntax-based machine translation (SBMT). To make this work, we transform the AMR structure into a form suitable for the mechanics of SBMT and useful for modeling. We introduce an AMR-specific language model and add data and features drawn from semantic resources. Our resulting AMR parser improves upon state-of-the-art results by 7 Smatch points.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge