Michael Lew

Preprint: Norm Loss: An efficient yet effective regularization method for deep neural networks

Mar 11, 2021

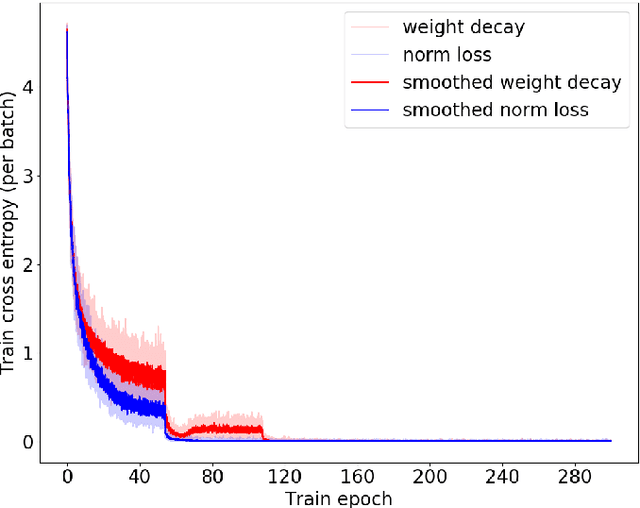

Abstract:Convolutional neural network training can suffer from diverse issues like exploding or vanishing gradients, scaling-based weight space symmetry and covariant-shift. In order to address these issues, researchers develop weight regularization methods and activation normalization methods. In this work we propose a weight soft-regularization method based on the Oblique manifold. The proposed method uses a loss function which pushes each weight vector to have a norm close to one, i.e. the weight matrix is smoothly steered toward the so-called Oblique manifold. We evaluate our method on the very popular CIFAR-10, CIFAR-100 and ImageNet 2012 datasets using two state-of-the-art architectures, namely the ResNet and wide-ResNet. Our method introduces negligible computational overhead and the results show that it is competitive to the state-of-the-art and in some cases superior to it. Additionally, the results are less sensitive to hyperparameter settings such as batch size and regularization factor.

PREPRINT: Comparison of deep learning and hand crafted features for mining simulation data

Mar 11, 2021

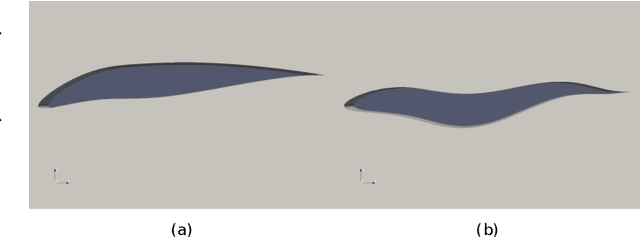

Abstract:Computational Fluid Dynamics (CFD) simulations are a very important tool for many industrial applications, such as aerodynamic optimization of engineering designs like cars shapes, airplanes parts etc. The output of such simulations, in particular the calculated flow fields, are usually very complex and hard to interpret for realistic three-dimensional real-world applications, especially if time-dependent simulations are investigated. Automated data analysis methods are warranted but a non-trivial obstacle is given by the very large dimensionality of the data. A flow field typically consists of six measurement values for each point of the computational grid in 3D space and time (velocity vector values, turbulent kinetic energy, pressure and viscosity). In this paper we address the task of extracting meaningful results in an automated manner from such high dimensional data sets. We propose deep learning methods which are capable of processing such data and which can be trained to solve relevant tasks on simulation data, i.e. predicting drag and lift forces applied on an airfoil. We also propose an adaptation of the classical hand crafted features known from computer vision to address the same problem and compare a large variety of descriptors and detectors. Finally, we compile a large dataset of 2D simulations of the flow field around airfoils which contains 16000 flow fields with which we tested and compared approaches. Our results show that the deep learning-based methods, as well as hand crafted feature based approaches, are well-capable to accurately describe the content of the CFD simulation output on the proposed dataset.

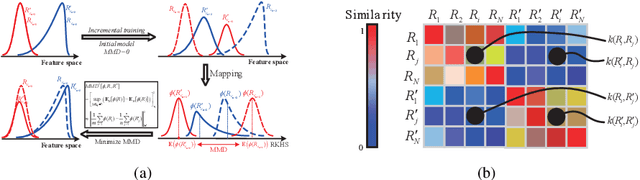

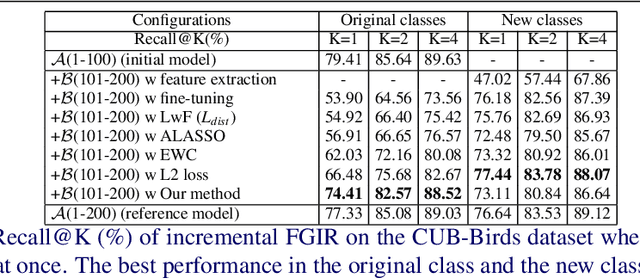

On the Exploration of Incremental Learning for Fine-grained Image Retrieval

Oct 15, 2020

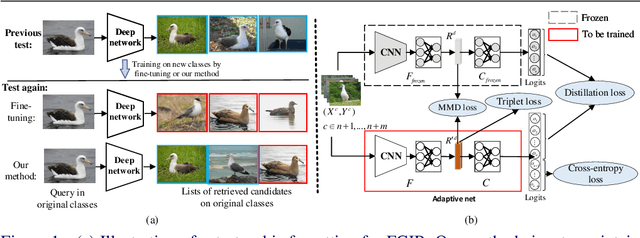

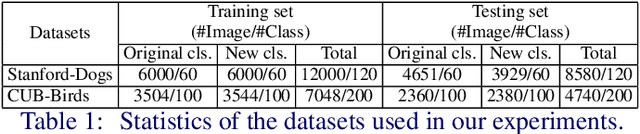

Abstract:In this paper, we consider the problem of fine-grained image retrieval in an incremental setting, when new categories are added over time. On the one hand, repeatedly training the representation on the extended dataset is time-consuming. On the other hand, fine-tuning the learned representation only with the new classes leads to catastrophic forgetting. To this end, we propose an incremental learning method to mitigate retrieval performance degradation caused by the forgetting issue. Without accessing any samples of the original classes, the classifier of the original network provides soft "labels" to transfer knowledge to train the adaptive network, so as to preserve the previous capability for classification. More importantly, a regularization function based on Maximum Mean Discrepancy is devised to minimize the discrepancy of new classes features from the original network and the adaptive network, respectively. Extensive experiments on two datasets show that our method effectively mitigates the catastrophic forgetting on the original classes while achieving high performance on the new classes.

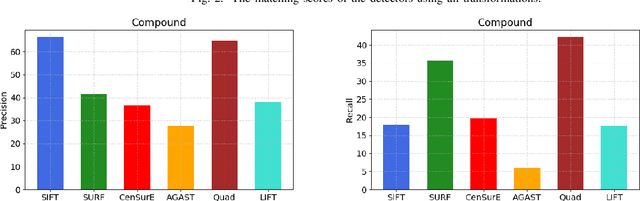

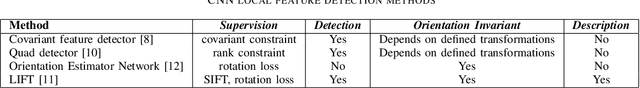

A Comparison of CNN and Classic Features for Image Retrieval

Aug 25, 2019

Abstract:Feature detectors and descriptors have been successfully used for various computer vision tasks, such as video object tracking and content-based image retrieval. Many methods use image gradients in different stages of the detection-description pipeline to describe local image structures. Recently, some, or all, of these stages have been replaced by convolutional neural networks (CNNs), in order to increase their performance. A detector is defined as a selection problem, which makes it more challenging to implement as a CNN. They are therefore generally defined as regressors, converting input images to score maps and keypoints can be selected with non-maximum suppression. This paper discusses and compares several recent methods that use CNNs for keypoint detection. Experiments are performed both on the CNN based approaches, as well as a selection of conventional methods. In addition to qualitative measures defined on keypoints and descriptors, the bag-of-words (BoW) model is used to implement an image retrieval application, in order to determine how the methods perform in practice. The results show that each type of features are best in different contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge