Michael Baiocchi

Avoiding Biased Clinical Machine Learning Model Performance Estimates in the Presence of Label Selection

Sep 15, 2022

Abstract:When evaluating the performance of clinical machine learning models, one must consider the deployment population. When the population of patients with observed labels is only a subset of the deployment population (label selection), standard model performance estimates on the observed population may be misleading. In this study we describe three classes of label selection and simulate five causally distinct scenarios to assess how particular selection mechanisms bias a suite of commonly reported binary machine learning model performance metrics. Simulations reveal that when selection is affected by observed features, naive estimates of model discrimination may be misleading. When selection is affected by labels, naive estimates of calibration fail to reflect reality. We borrow traditional weighting estimators from causal inference literature and find that when selection probabilities are properly specified, they recover full population estimates. We then tackle the real-world task of monitoring the performance of deployed machine learning models whose interactions with clinicians feed-back and affect the selection mechanism of the labels. We train three machine learning models to flag low-yield laboratory diagnostics, and simulate their intended consequence of reducing wasteful laboratory utilization. We find that naive estimates of AUROC on the observed population undershoot actual performance by up to 20%. Such a disparity could be large enough to lead to the wrongful termination of a successful clinical decision support tool. We propose an altered deployment procedure, one that combines injected randomization with traditional weighted estimates, and find it recovers true model performance.

A comparison of methods for model selection when estimating individual treatment effects

Jun 13, 2018

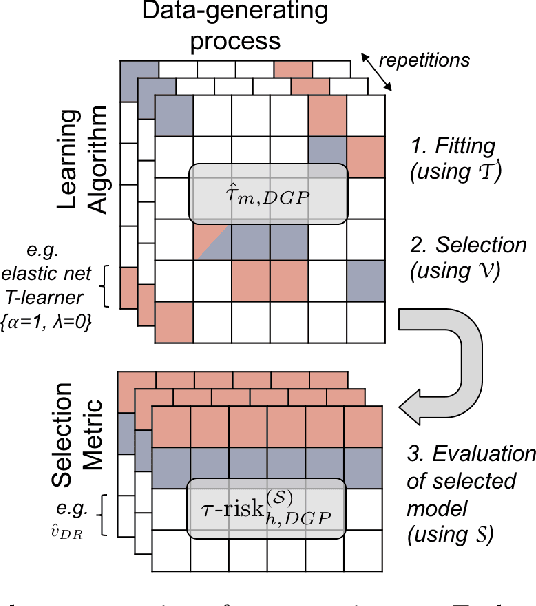

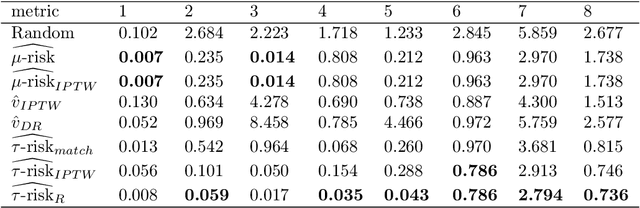

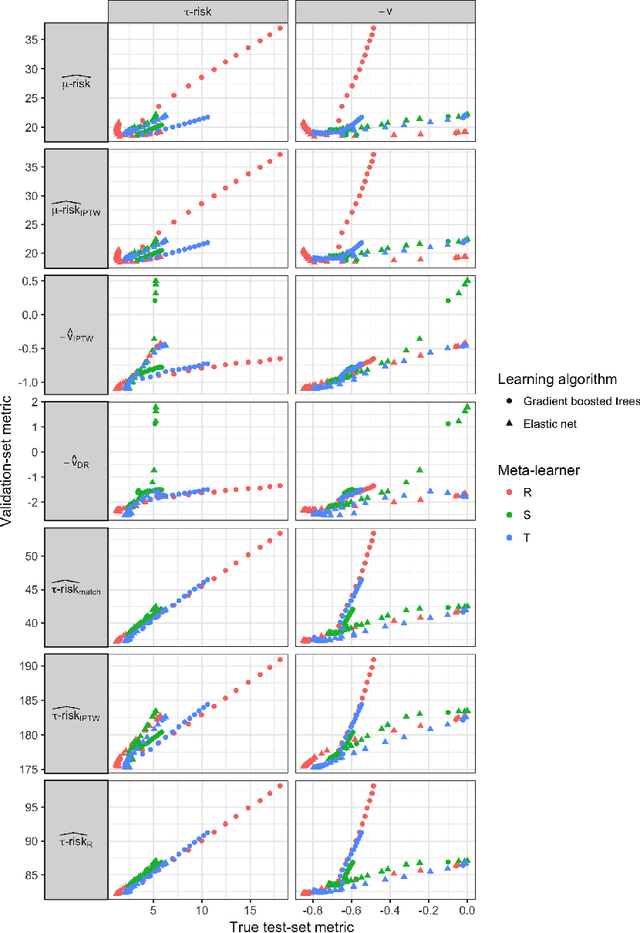

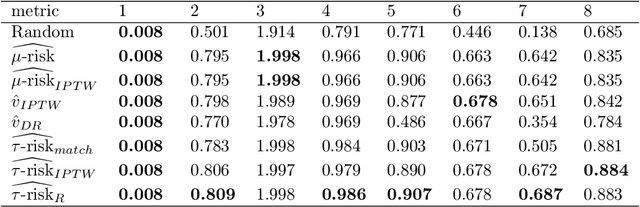

Abstract:Practitioners in medicine, business, political science, and other fields are increasingly aware that decisions should be personalized to each patient, customer, or voter. A given treatment (e.g. a drug or advertisement) should be administered only to those who will respond most positively, and certainly not to those who will be harmed by it. Individual-level treatment effects can be estimated with tools adapted from machine learning, but different models can yield contradictory estimates. Unlike risk prediction models, however, treatment effect models cannot be easily evaluated against each other using a held-out test set because the true treatment effect itself is never directly observed. Besides outcome prediction accuracy, several metrics that can leverage held-out data to evaluate treatment effects models have been proposed, but they are not widely used. We provide a didactic framework that elucidates the relationships between the different approaches and compare them all using a variety of simulations of both randomized and observational data. Our results show that researchers estimating heterogenous treatment effects need not limit themselves to a single model-fitting algorithm. Instead of relying on a single method, multiple models fit by a diverse set of algorithms should be evaluated against each other using an objective function learned from the validation set. The model minimizing that objective should be used for estimating the individual treatment effect for future individuals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge