Mengyun Shi

Fashionpedia-Ads: Do Your Favorite Advertisements Reveal Your Fashion Taste?

May 03, 2023

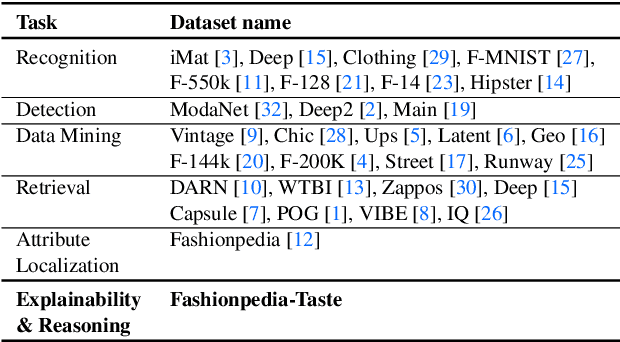

Abstract:Consumers are exposed to advertisements across many different domains on the internet, such as fashion, beauty, car, food, and others. On the other hand, fashion represents second highest e-commerce shopping category. Does consumer digital record behavior on various fashion ad images reveal their fashion taste? Does ads from other domains infer their fashion taste as well? In this paper, we study the correlation between advertisements and fashion taste. Towards this goal, we introduce a new dataset, Fashionpedia-Ads, which asks subjects to provide their preferences on both ad (fashion, beauty, car, and dessert) and fashion product (social network and e-commerce style) images. Furthermore, we exhaustively collect and annotate the emotional, visual and textual information on the ad images from multi-perspectives (abstractive level, physical level, captions, and brands). We open-source Fashionpedia-Ads to enable future studies and encourage more approaches to interpretability research between advertisements and fashion taste.

Fashionpedia-Taste: A Dataset towards Explaining Human Fashion Taste

May 03, 2023

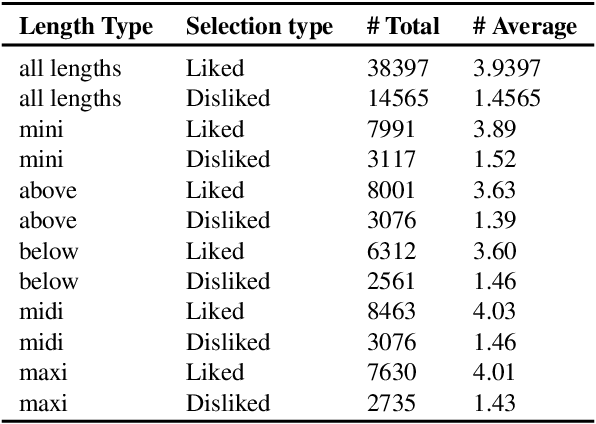

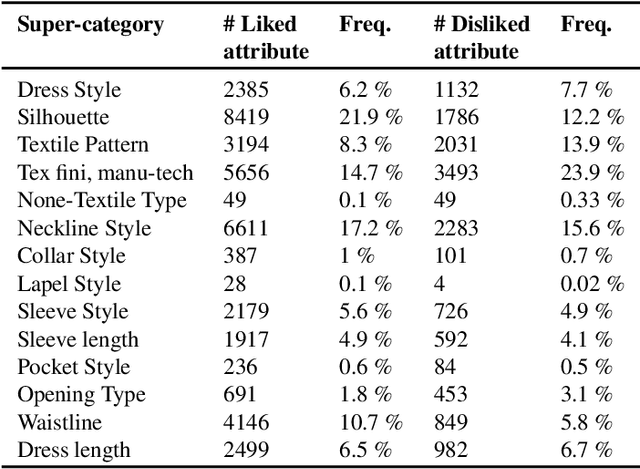

Abstract:Existing fashion datasets do not consider the multi-facts that cause a consumer to like or dislike a fashion image. Even two consumers like a same fashion image, they could like this image for total different reasons. In this paper, we study the reason why a consumer like a certain fashion image. Towards this goal, we introduce an interpretability dataset, Fashionpedia-taste, consist of rich annotation to explain why a subject like or dislike a fashion image from the following 3 perspectives: 1) localized attributes; 2) human attention; 3) caption. Furthermore, subjects are asked to provide their personal attributes and preference on fashion, such as personality and preferred fashion brands. Our dataset makes it possible for researchers to build computational models to fully understand and interpret human fashion taste from different humanistic perspectives and modalities.

Using Artificial Intelligence to Analyze Fashion Trends

May 03, 2020

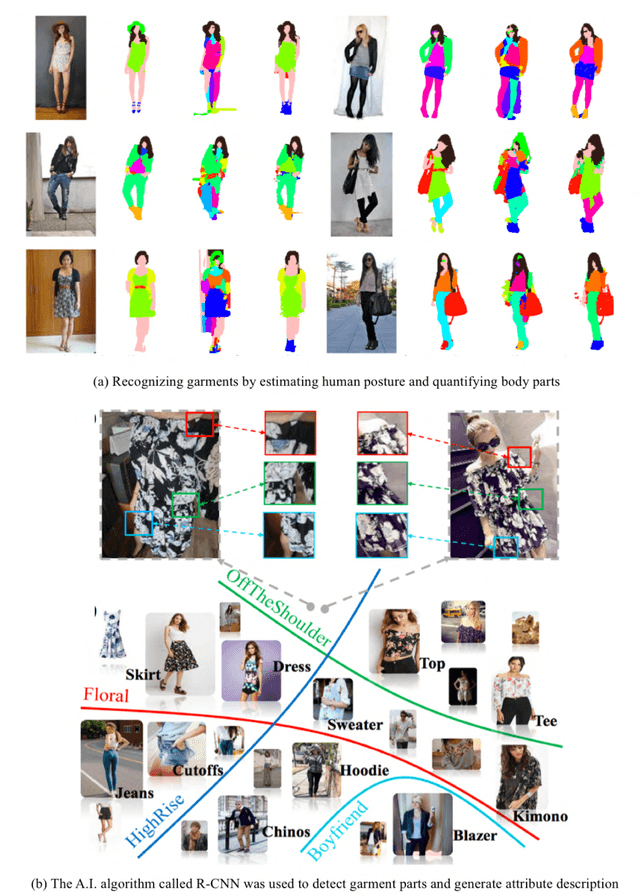

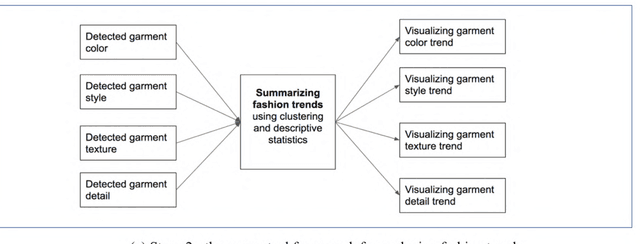

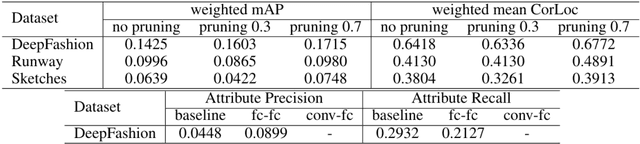

Abstract:Analyzing fashion trends is essential in the fashion industry. Current fashion forecasting firms, such as WGSN, utilize the visual information from around the world to analyze and predict fashion trends. However, analyzing fashion trends is time-consuming and extremely labor intensive, requiring individual employees' manual editing and classification. To improve the efficiency of data analysis of such image-based information and lower the cost of analyzing fashion images, this study proposes a data-driven quantitative abstracting approach using an artificial intelligence (A.I.) algorithm. Specifically, an A.I. model was trained on fashion images from a large-scale dataset under different scenarios, for example in online stores and street snapshots. This model was used to detect garments and classify clothing attributes such as textures, garment style, and details for runway photos and videos. It was found that the A.I. model can generate rich attribute descriptions of detected regions and accurately bind the garments in the images. Adoption of A.I. algorithm demonstrated promising results and the potential to classify garment types and details automatically, which can make the process of trend forecasting more cost-effective and faster.

Fashionpedia: Ontology, Segmentation, and an Attribute Localization Dataset

Apr 26, 2020

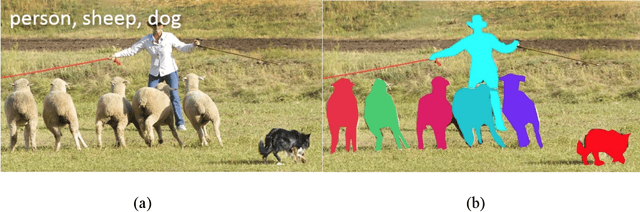

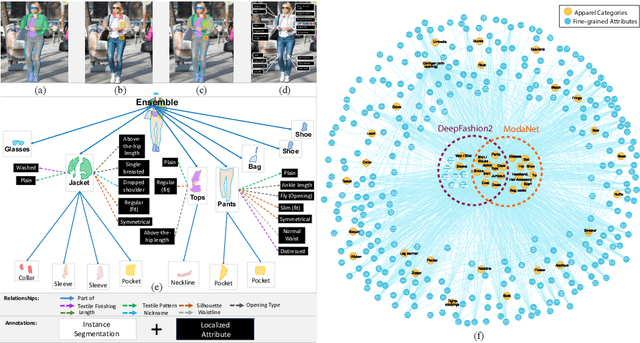

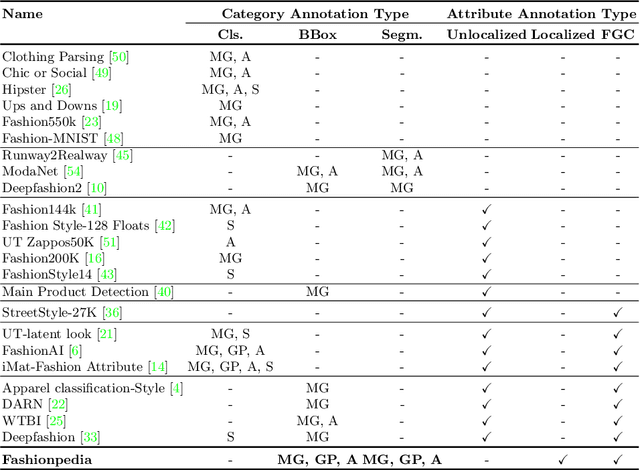

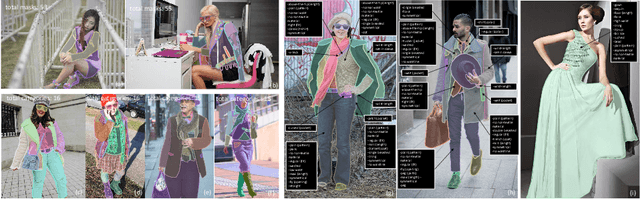

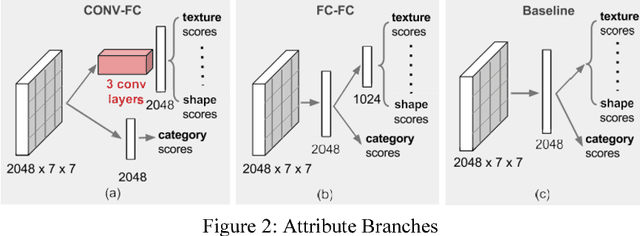

Abstract:In this work we explore the task of instance segmentation with attribute localization, which unifies instance segmentation (detect and segment each object instance) and fine-grained visual attribute categorization (recognize one or multiple attributes). The proposed task requires both localizing an object and describing its properties. To illustrate the various aspects of this task, we focus on the domain of fashion and introduce Fashionpedia as a step toward mapping out the visual aspects of the fashion world. Fashionpedia consists of two parts: (1) an ontology built by fashion experts containing 27 main apparel categories, 19 apparel parts, 294 fine-grained attributes and their relationships; (2) a dataset with everyday and celebrity event fashion images annotated with segmentation masks and their associated per-mask fine-grained attributes, built upon the Fashionpedia ontology. In order to solve this challenging task, we propose a novel Attribute-Mask RCNN model to jointly perform instance segmentation and localized attribute recognition, and provide a novel evaluation metric for the task. We also demonstrate instance segmentation models pre-trained on Fashionpedia achieve better transfer learning performance on other fashion datasets than ImageNet pre-training. Fashionpedia is available at: https://fashionpedia.github.io/home/index.html.

A Deep-Learning-Based Fashion Attributes Detection Model

Oct 24, 2018

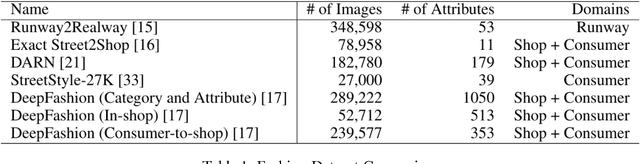

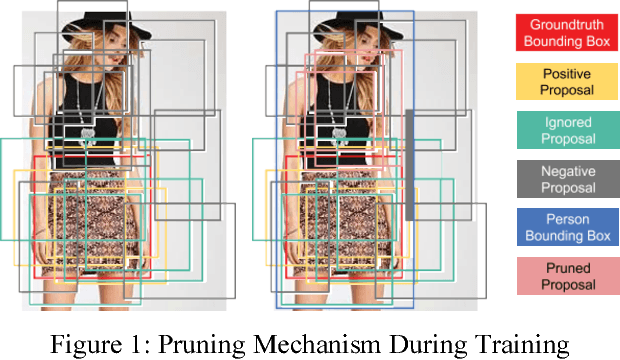

Abstract:Analyzing fashion attributes is essential in the fashion design process. Current fashion forecasting firms, such as WGSN utilizes information from all around the world (from fashion shows, visual merchandising, blogs, etc). They gather information by experience, by observation, by media scan, by interviews, and by exposed to new things. Such information analyzing process is called abstracting, which recognize similarities or differences across all the garments and collections. In fact, such abstraction ability is useful in many fashion careers with different purposes. Fashion forecasters abstract across design collections and across time to identify fashion change and directions; designers, product developers and buyers abstract across a group of garments and collections to develop a cohesive and visually appeal lines; sales and marketing executives abstract across product line each season to recognize selling points; fashion journalist and bloggers abstract across runway photos to recognize symbolic core concepts that can be translated into editorial features. Fashion attributes analysis for such fashion insiders requires much detailed and in-depth attributes annotation than that for consumers, and requires inference on multiple domains. In this project, we propose a data-driven approach for recognizing fashion attributes. Specifically, a modified version of Faster R-CNN model is trained on images from a large-scale localization dataset with 594 fine-grained attributes under different scenarios, for example in online stores and street snapshots. This model will then be used to detect garment items and classify clothing attributes for runway photos and fashion illustrations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge