A Deep-Learning-Based Fashion Attributes Detection Model

Paper and Code

Oct 24, 2018

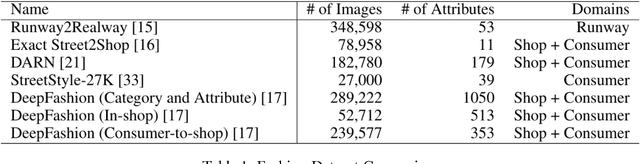

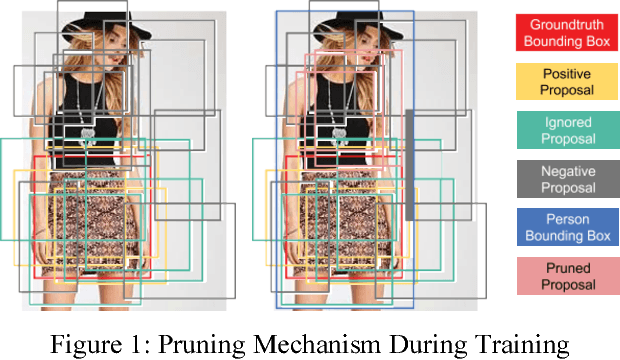

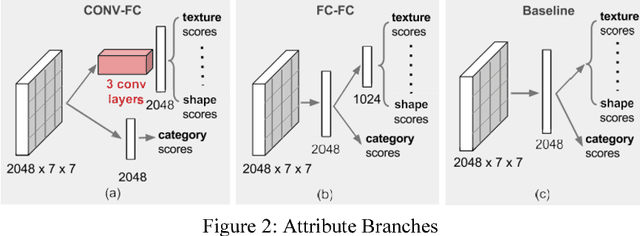

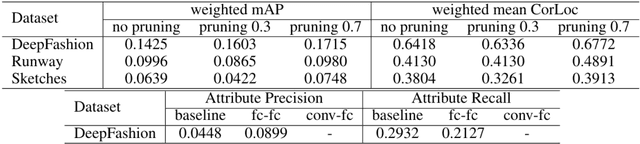

Analyzing fashion attributes is essential in the fashion design process. Current fashion forecasting firms, such as WGSN utilizes information from all around the world (from fashion shows, visual merchandising, blogs, etc). They gather information by experience, by observation, by media scan, by interviews, and by exposed to new things. Such information analyzing process is called abstracting, which recognize similarities or differences across all the garments and collections. In fact, such abstraction ability is useful in many fashion careers with different purposes. Fashion forecasters abstract across design collections and across time to identify fashion change and directions; designers, product developers and buyers abstract across a group of garments and collections to develop a cohesive and visually appeal lines; sales and marketing executives abstract across product line each season to recognize selling points; fashion journalist and bloggers abstract across runway photos to recognize symbolic core concepts that can be translated into editorial features. Fashion attributes analysis for such fashion insiders requires much detailed and in-depth attributes annotation than that for consumers, and requires inference on multiple domains. In this project, we propose a data-driven approach for recognizing fashion attributes. Specifically, a modified version of Faster R-CNN model is trained on images from a large-scale localization dataset with 594 fine-grained attributes under different scenarios, for example in online stores and street snapshots. This model will then be used to detect garment items and classify clothing attributes for runway photos and fashion illustrations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge