Mengxi Wu

Gecko: An Efficient Neural Architecture Inherently Processing Sequences with Arbitrary Lengths

Jan 10, 2026Abstract:Designing a unified neural network to efficiently and inherently process sequential data with arbitrary lengths is a central and challenging problem in sequence modeling. The design choices in Transformer, including quadratic complexity and weak length extrapolation, have limited their ability to scale to long sequences. In this work, we propose Gecko, a neural architecture that inherits the design of Mega and Megalodon (exponential moving average with gated attention), and further introduces multiple technical components to improve its capability to capture long range dependencies, including timestep decay normalization, sliding chunk attention mechanism, and adaptive working memory. In a controlled pretraining comparison with Llama2 and Megalodon in the scale of 7 billion parameters and 2 trillion training tokens, Gecko achieves better efficiency and long-context scalability. Gecko reaches a training loss of 1.68, significantly outperforming Llama2-7B (1.75) and Megalodon-7B (1.70), and landing close to Llama2-13B (1.67). Notably, without relying on any context-extension techniques, Gecko exhibits inherent long-context processing and retrieval capabilities, stably handling sequences of up to 4 million tokens and retrieving information from contexts up to $4\times$ longer than its attention window. Code: https://github.com/XuezheMax/gecko-llm

Curvature Diversity-Driven Deformation and Domain Alignment for Point Cloud

Oct 03, 2024

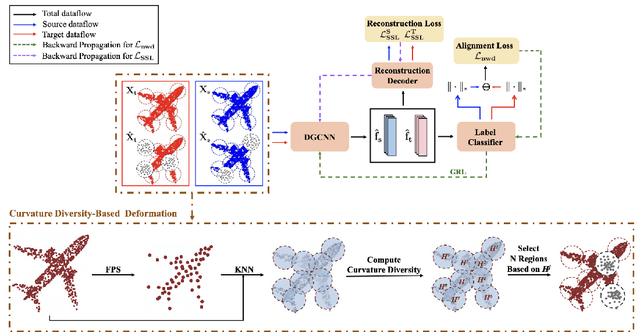

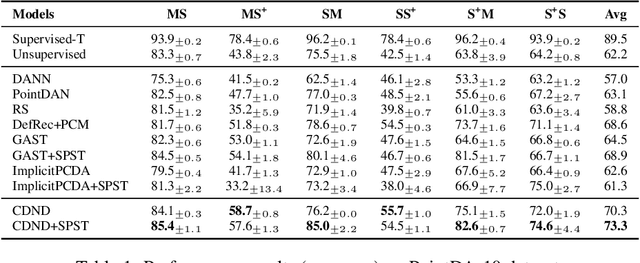

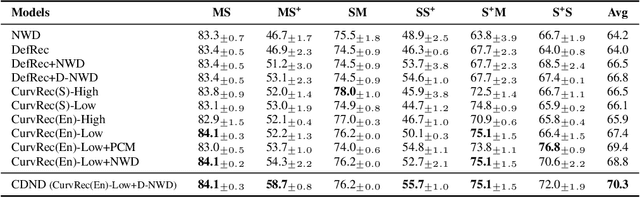

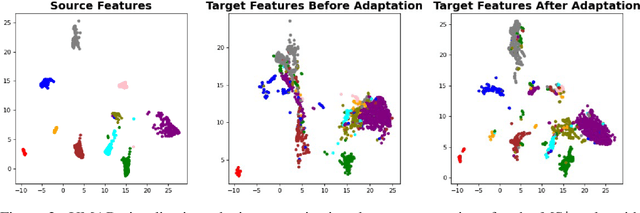

Abstract:Unsupervised Domain Adaptation (UDA) is crucial for reducing the need for extensive manual data annotation when training deep networks on point cloud data. A significant challenge of UDA lies in effectively bridging the domain gap. To tackle this challenge, we propose \textbf{C}urvature \textbf{D}iversity-Driven \textbf{N}uclear-Norm Wasserstein \textbf{D}omain Alignment (CDND). Our approach first introduces a \textit{\textbf{Curv}ature Diversity-driven Deformation \textbf{Rec}onstruction (CurvRec)} task, which effectively mitigates the gap between the source and target domains by enabling the model to extract salient features from semantically rich regions of a given point cloud. We then propose \textit{\textbf{D}eformation-based \textbf{N}uclear-norm \textbf{W}asserstein \textbf{D}iscrepancy (D-NWD)}, which applies the Nuclear-norm Wasserstein Discrepancy to both \textit{deformed and original} data samples to align the source and target domains. Furthermore, we contribute a theoretical justification for the effectiveness of D-NWD in distribution alignment and demonstrate that it is \textit{generic} enough to be applied to \textbf{any} deformations. To validate our method, we conduct extensive experiments on two public domain adaptation datasets for point cloud classification and segmentation tasks. Empirical experiment results show that our CDND achieves state-of-the-art performance by a noticeable margin over existing approaches.

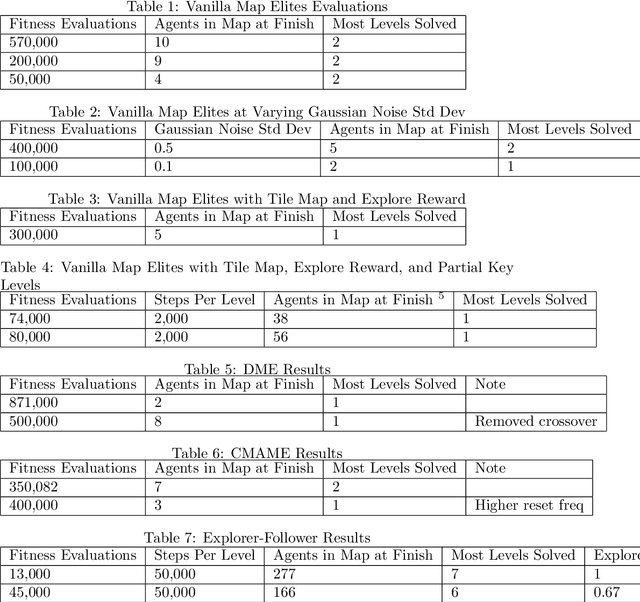

Exploring Novel Quality Diversity Methods For Generalization in Reinforcement Learning

Mar 26, 2023

Abstract:The Reinforcement Learning field is strong on achievements and weak on reapplication; a computer playing GO at a super-human level is still terrible at Tic-Tac-Toe. This paper asks whether the method of training networks improves their generalization. Specifically we explore core quality diversity algorithms, compare against two recent algorithms, and propose a new algorithm to deal with shortcomings in existing methods. Although results of these methods are well below the performance hoped for, our work raises important points about the choice of behavior criterion in quality diversity, the interaction of differential and evolutionary training methods, and the role of offline reinforcement learning and randomized learning in evolutionary search.

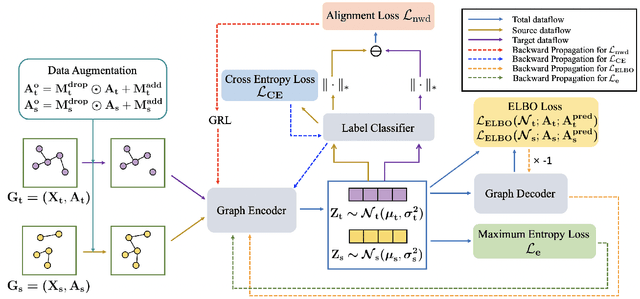

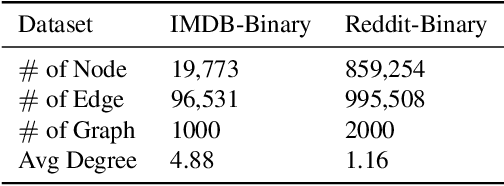

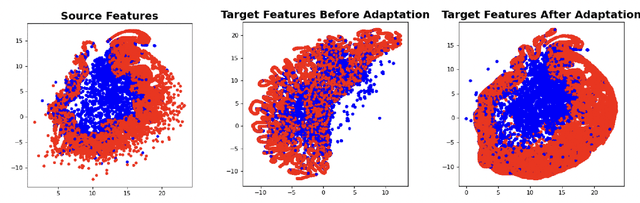

Unsupervised Domain Adaptation for Graph-Structured Data Using Class-Conditional Distribution Alignment

Jan 29, 2023

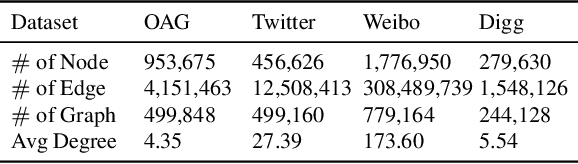

Abstract:Adopting deep learning models for graph-structured data is challenging due to the high cost of collecting and annotating large training data. Unsupervised domain adaptation (UDA) has been used successfully to address the challenge of data annotation for array-structured data. However, UDA methods for graph-structured data are quite limited. We develop a novel UDA algorithm for graph-structured data based on aligning the distribution of the target domain with unannotated data with the distribution of a source domain with annotated data in a shared embedding space. Specifically, we minimize both the sliced Wasserstein distance (SWD) and the maximum mean discrepancy (MMD) between the distributions of the source and the target domains at the output of graph encoding layers. Moreover, we develop a novel pseudo-label generation technique to align the distributions class-conditionally to address the challenge of class mismatch. Our empirical results on the Ego-network and the IMDB$\&$Reddit datasets demonstrate that our method is effective and leads to state-of-the-art performance.

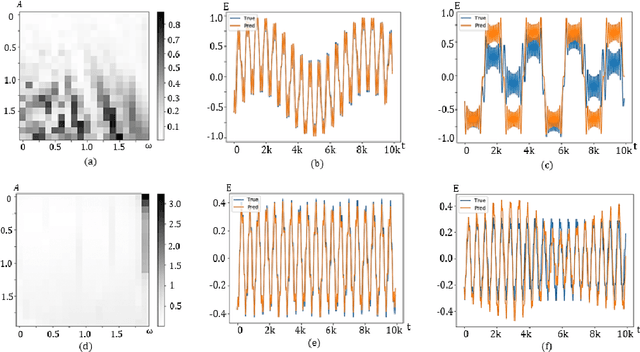

Conditional Seq2Seq model for the time-dependent two-level system

Jun 06, 2022

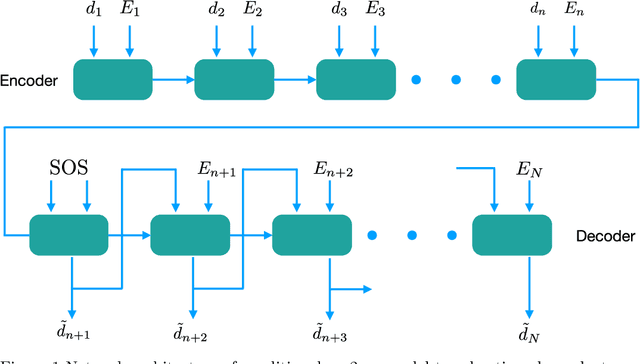

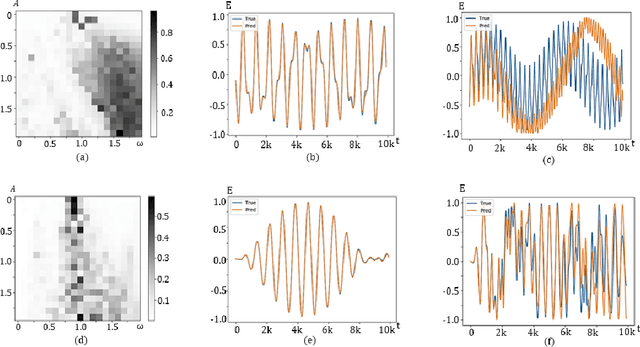

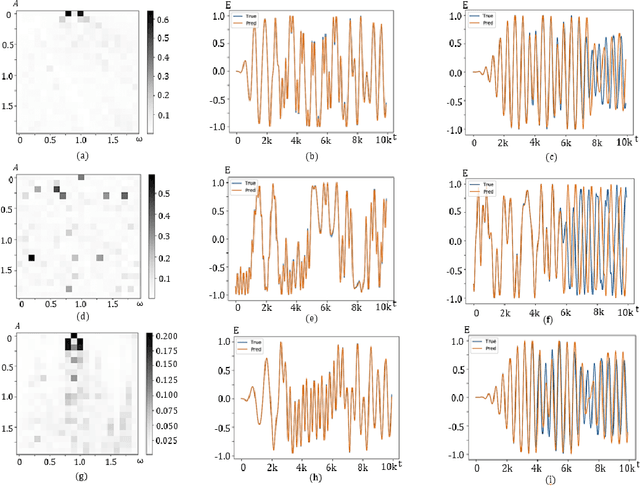

Abstract:We apply the deep learning neural network architecture to the two-level system in quantum optics to solve the time-dependent Schrodinger equation. By carefully designing the network structure and tuning parameters, above 90 percent accuracy in super long-term predictions can be achieved in the case of random electric fields, which indicates a promising new method to solve the time-dependent equation for two-level systems. By slightly modifying this network, we think that this method can solve the two- or three-dimensional time-dependent Schrodinger equation more efficiently than traditional approaches.

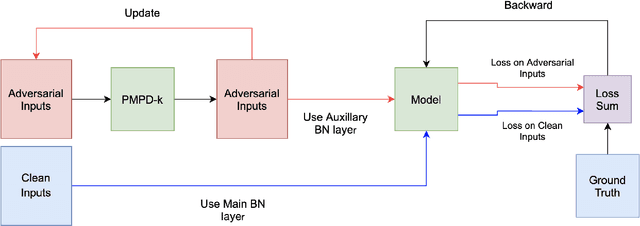

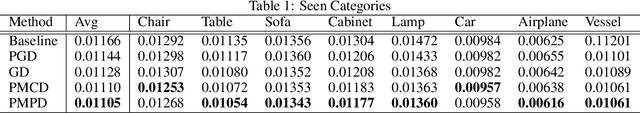

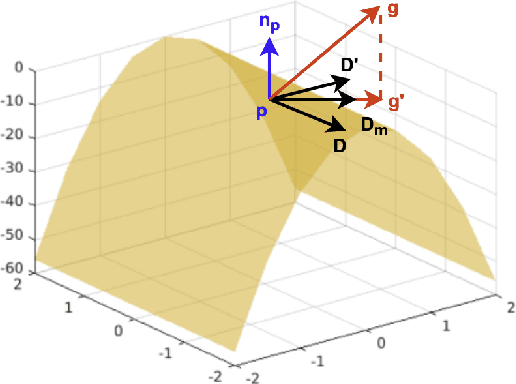

3D Point Cloud Completion with Geometric-Aware Adversarial Augmentation

Sep 21, 2021

Abstract:With the popularity of 3D sensors in self-driving and other robotics applications, extensive research has focused on designing novel neural network architectures for accurate 3D point cloud completion. However, unlike in point cloud classification and reconstruction, the role of adversarial samples in3D point cloud completion has seldom been explored. In this work, we show that training with adversarial samples can improve the performance of neural networks on 3D point cloud completion tasks. We propose a novel approach to generate adversarial samples that benefit both the performance of clean and adversarial samples. In contrast to the PGD-k attack, our method generates adversarial samples that keep the geometric features in clean samples and contain few outliers. In particular, we use principal directions to constrain the adversarial perturbations for each input point. The gradient components in the mean direction of principal directions are taken as adversarial perturbations. In addition, we also investigate the effect of using the minimum curvature direction. Besides, we adopt attack strength accumulation and auxiliary Batch Normalization layers method to speed up the training process and alleviate the distribution mismatch between clean and adversarial samples. Experimental results show that training with the adversarial samples crafted by our method effectively enhances the performance of PCN on the ShapeNet dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge