Mengqing Jiang

Real-Time Ground-Plane Refined LiDAR SLAM

Oct 21, 2021

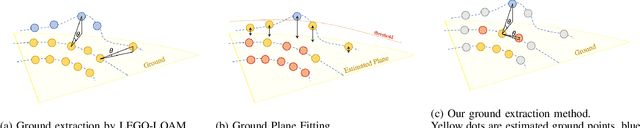

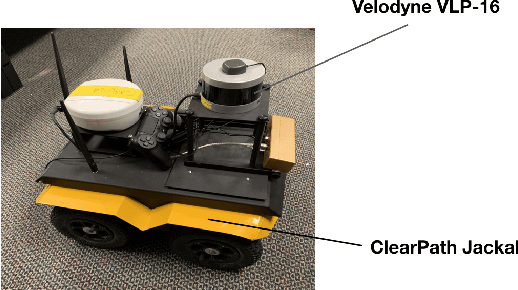

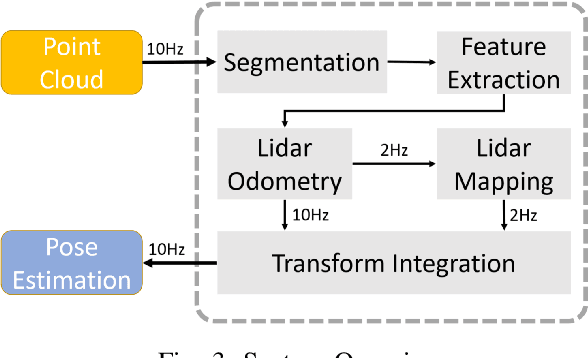

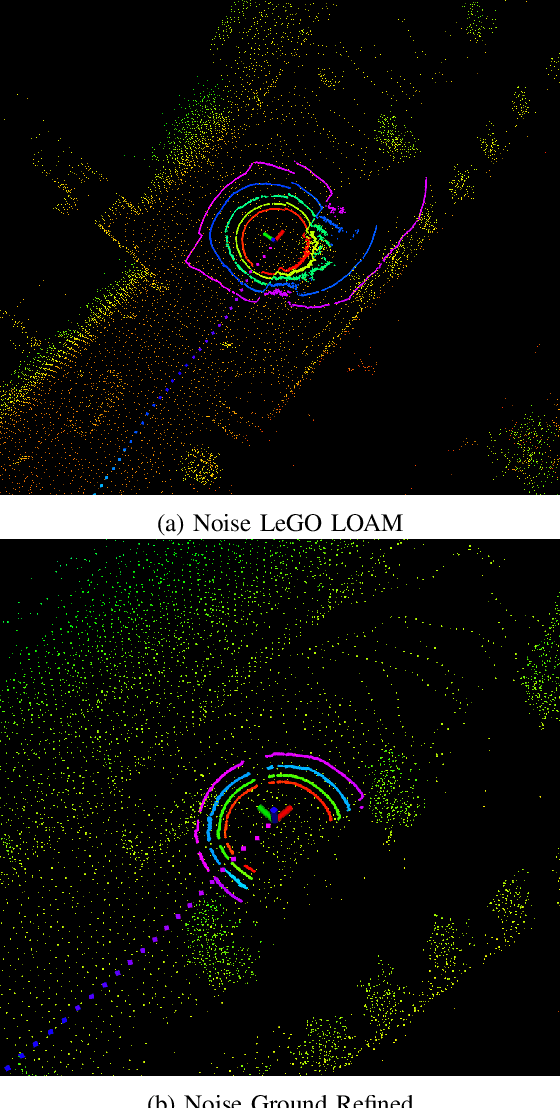

Abstract:SLAM system using only point cloud has been proven successful in recent years. In most of these systems, they extract features for tracking after ground removal, which causes large variance on the z-axis. Ground actually provides robust information to obtain [t_z, \theta_{roll}, \theta_{pitch}]$. In this project, we followed the LeGO-LOAM, a light-weighted real-time SLAM system that extracts and registers ground as an addition to the original LOAM, and we proposed a new clustering-based method to refine the planar extraction algorithm for ground such that the system can handle much more noisy or dynamic environments. We implemented this method and compared it with LeGo-LOAM on our collected data of CMU campus, as well as a collected dataset for ATV (All-Terrain Vehicle) for off-road self-driving. Both visualization and evaluation results show obvious improvement of our algorithm.

Residual Attention Network for Image Classification

Apr 23, 2017

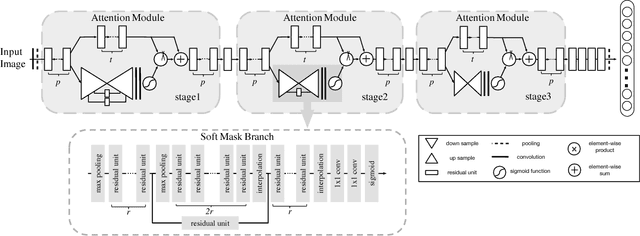

Abstract:In this work, we propose "Residual Attention Network", a convolutional neural network using attention mechanism which can incorporate with state-of-art feed forward network architecture in an end-to-end training fashion. Our Residual Attention Network is built by stacking Attention Modules which generate attention-aware features. The attention-aware features from different modules change adaptively as layers going deeper. Inside each Attention Module, bottom-up top-down feedforward structure is used to unfold the feedforward and feedback attention process into a single feedforward process. Importantly, we propose attention residual learning to train very deep Residual Attention Networks which can be easily scaled up to hundreds of layers. Extensive analyses are conducted on CIFAR-10 and CIFAR-100 datasets to verify the effectiveness of every module mentioned above. Our Residual Attention Network achieves state-of-the-art object recognition performance on three benchmark datasets including CIFAR-10 (3.90% error), CIFAR-100 (20.45% error) and ImageNet (4.8% single model and single crop, top-5 error). Note that, our method achieves 0.6% top-1 accuracy improvement with 46% trunk depth and 69% forward FLOPs comparing to ResNet-200. The experiment also demonstrates that our network is robust against noisy labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge