Md. Sabbir Ahmed

ForCM: Forest Cover Mapping from Multispectral Sentinel-2 Image by Integrating Deep Learning with Object-Based Image Analysis

Dec 29, 2025Abstract:This research proposes "ForCM", a novel approach to forest cover mapping that combines Object-Based Image Analysis (OBIA) with Deep Learning (DL) using multispectral Sentinel-2 imagery. The study explores several DL models, including UNet, UNet++, ResUNet, AttentionUNet, and ResNet50-Segnet, applied to high-resolution Sentinel-2 Level 2A satellite images of the Amazon Rainforest. The datasets comprise three collections: two sets of three-band imagery and one set of four-band imagery. After evaluation, the most effective DL models are individually integrated with the OBIA technique to enhance mapping accuracy. The originality of this work lies in evaluating different deep learning models combined with OBIA and comparing them with traditional OBIA methods. The results show that the proposed ForCM method improves forest cover mapping, achieving overall accuracies of 94.54 percent with ResUNet-OBIA and 95.64 percent with AttentionUNet-OBIA, compared to 92.91 percent using traditional OBIA. This research also demonstrates the potential of free and user-friendly tools such as QGIS for accurate mapping within their limitations, supporting global environmental monitoring and conservation efforts.

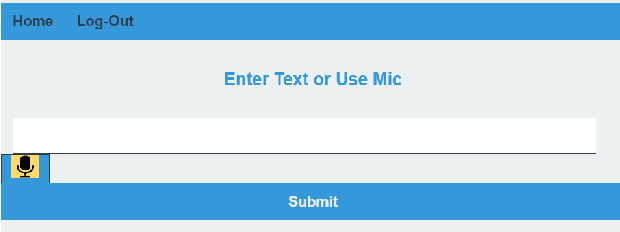

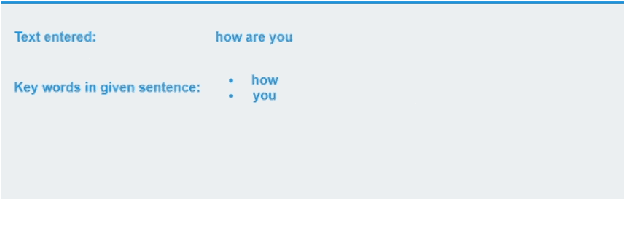

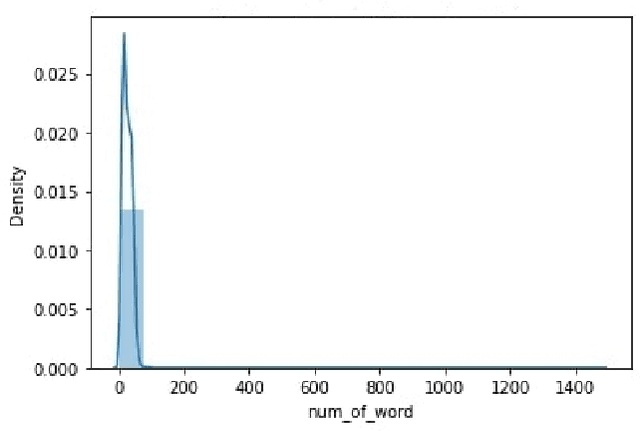

Enhancing Bidirectional Sign Language Communication: Integrating YOLOv8 and NLP for Real-Time Gesture Recognition & Translation

Nov 18, 2024

Abstract:The primary concern of this research is to take American Sign Language (ASL) data through real time camera footage and be able to convert the data and information into text. Adding to that, we are also putting focus on creating a framework that can also convert text into sign language in real time which can help us break the language barrier for the people who are in need. In this work, for recognising American Sign Language (ASL), we have used the You Only Look Once(YOLO) model and Convolutional Neural Network (CNN) model. YOLO model is run in real time and automatically extracts discriminative spatial-temporal characteristics from the raw video stream without the need for any prior knowledge, eliminating design flaws. The CNN model here is also run in real time for sign language detection. We have introduced a novel method for converting text based input to sign language by making a framework that will take a sentence as input, identify keywords from that sentence and then show a video where sign language is performed with respect to the sentence given as input in real time. To the best of our knowledge, this is a rare study to demonstrate bidirectional sign language communication in real time in the American Sign Language (ASL).

A Comparative Study on COVID-19 Fake News Detection Using Different Transformer Based Models

Aug 02, 2022

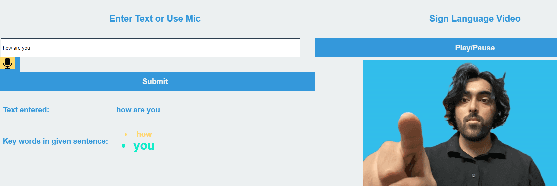

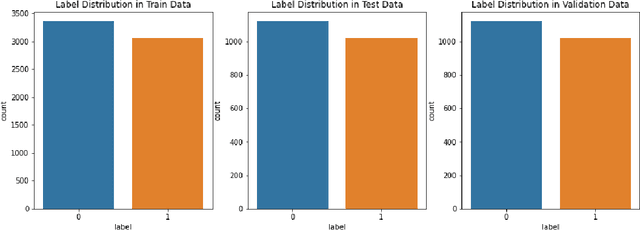

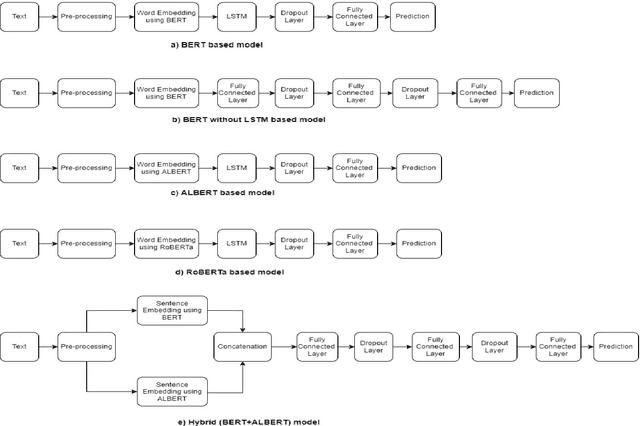

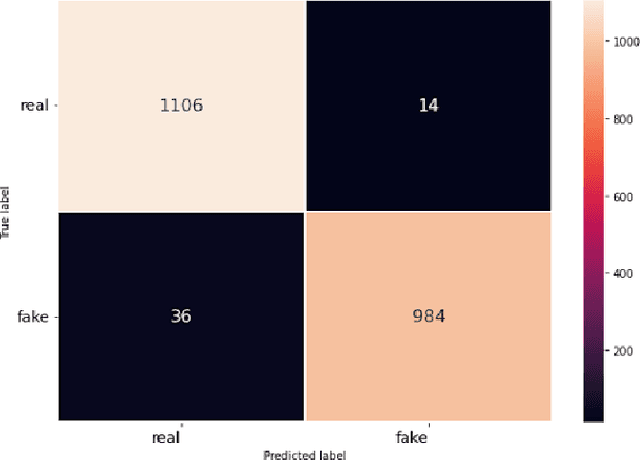

Abstract:The rapid advancement of social networks and the convenience of internet availability have accelerated the rampant spread of false news and rumors on social media sites. Amid the COVID 19 epidemic, this misleading information has aggravated the situation by putting peoples mental and physical lives in danger. To limit the spread of such inaccuracies, identifying the fake news from online platforms could be the first and foremost step. In this research, the authors have conducted a comparative analysis by implementing five transformer based models such as BERT, BERT without LSTM, ALBERT, RoBERTa, and a Hybrid of BERT & ALBERT in order to detect the fraudulent news of COVID 19 from the internet. COVID 19 Fake News Dataset has been used for training and testing the models. Among all these models, the RoBERTa model has performed better than other models by obtaining an F1 score of 0.98 in both real and fake classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge