Rafeed Rahman

PlateSegFL: A Privacy-Preserving License Plate Detection Using Federated Segmentation Learning

Apr 07, 2024

Abstract:Automatic License Plate Recognition (ALPR) is an integral component of an intelligent transport system with extensive applications in secure transportation, vehicle-to-vehicle communication, stolen vehicles detection, traffic violations, and traffic flow management. The existing license plate detection system focuses on one-shot learners or pre-trained models that operate with a geometric bounding box, limiting the model's performance. Furthermore, continuous video data streams uploaded to the central server result in network and complexity issues. To combat this, PlateSegFL was introduced, which implements U-Net-based segmentation along with Federated Learning (FL). U-Net is well-suited for multi-class image segmentation tasks because it can analyze a large number of classes and generate a pixel-level segmentation map for each class. Federated Learning is used to reduce the quantity of data required while safeguarding the user's privacy. Different computing platforms, such as mobile phones, are able to collaborate on the development of a standard prediction model where it makes efficient use of one's time; incorporates more diverse data; delivers projections in real-time; and requires no physical effort from the user; resulting around 95% F1 score.

A Comparative Study on COVID-19 Fake News Detection Using Different Transformer Based Models

Aug 02, 2022

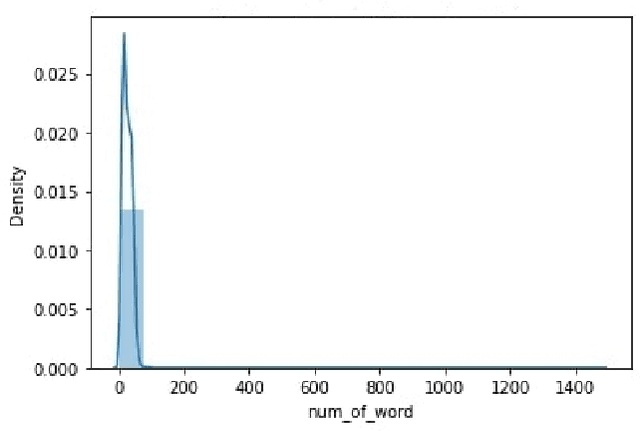

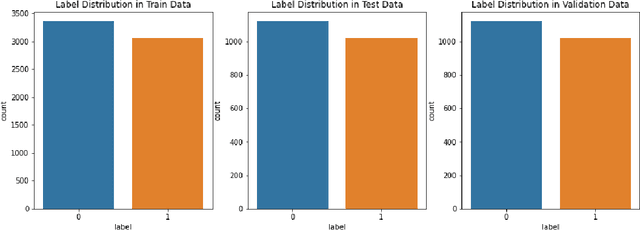

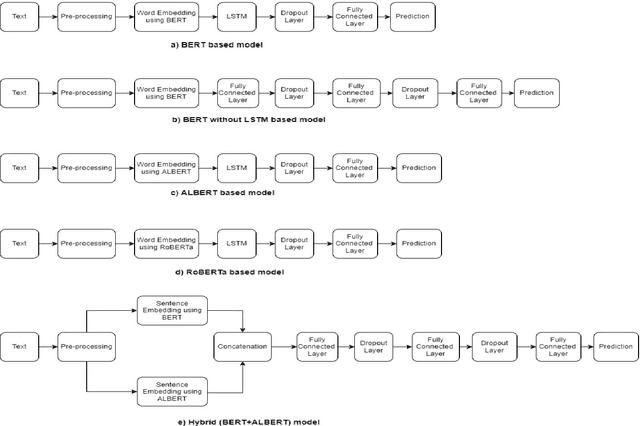

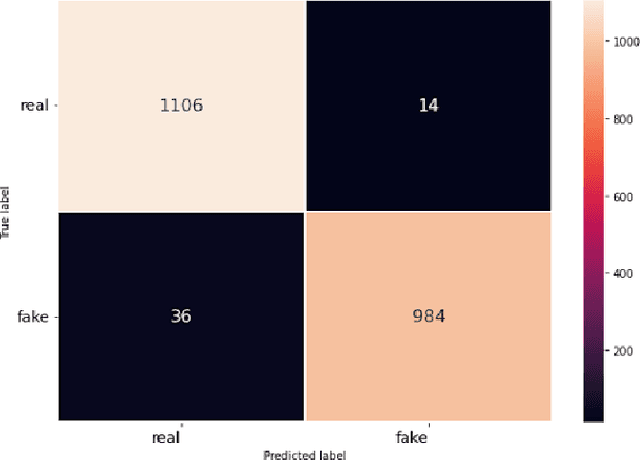

Abstract:The rapid advancement of social networks and the convenience of internet availability have accelerated the rampant spread of false news and rumors on social media sites. Amid the COVID 19 epidemic, this misleading information has aggravated the situation by putting peoples mental and physical lives in danger. To limit the spread of such inaccuracies, identifying the fake news from online platforms could be the first and foremost step. In this research, the authors have conducted a comparative analysis by implementing five transformer based models such as BERT, BERT without LSTM, ALBERT, RoBERTa, and a Hybrid of BERT & ALBERT in order to detect the fraudulent news of COVID 19 from the internet. COVID 19 Fake News Dataset has been used for training and testing the models. Among all these models, the RoBERTa model has performed better than other models by obtaining an F1 score of 0.98 in both real and fake classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge