Mohammad Zavid Parvez

ForCM: Forest Cover Mapping from Multispectral Sentinel-2 Image by Integrating Deep Learning with Object-Based Image Analysis

Dec 29, 2025Abstract:This research proposes "ForCM", a novel approach to forest cover mapping that combines Object-Based Image Analysis (OBIA) with Deep Learning (DL) using multispectral Sentinel-2 imagery. The study explores several DL models, including UNet, UNet++, ResUNet, AttentionUNet, and ResNet50-Segnet, applied to high-resolution Sentinel-2 Level 2A satellite images of the Amazon Rainforest. The datasets comprise three collections: two sets of three-band imagery and one set of four-band imagery. After evaluation, the most effective DL models are individually integrated with the OBIA technique to enhance mapping accuracy. The originality of this work lies in evaluating different deep learning models combined with OBIA and comparing them with traditional OBIA methods. The results show that the proposed ForCM method improves forest cover mapping, achieving overall accuracies of 94.54 percent with ResUNet-OBIA and 95.64 percent with AttentionUNet-OBIA, compared to 92.91 percent using traditional OBIA. This research also demonstrates the potential of free and user-friendly tools such as QGIS for accurate mapping within their limitations, supporting global environmental monitoring and conservation efforts.

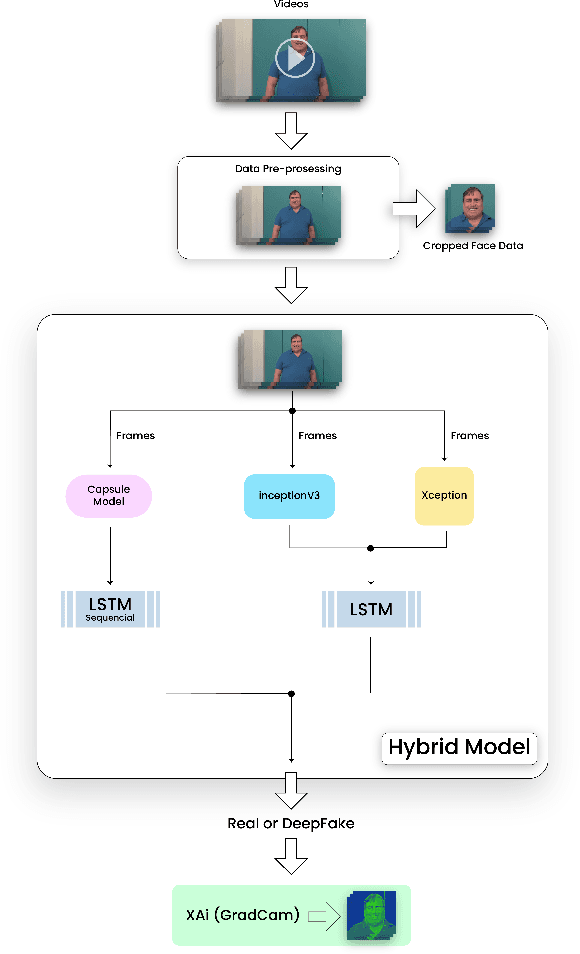

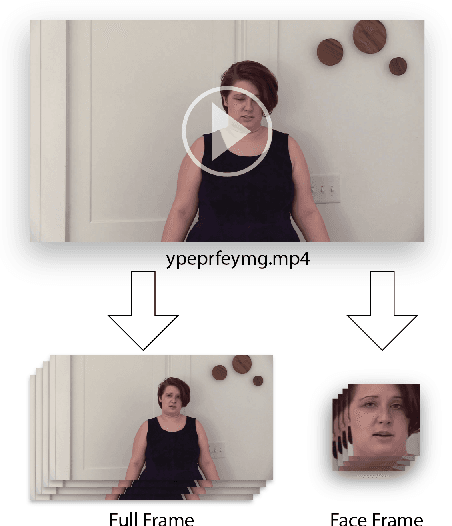

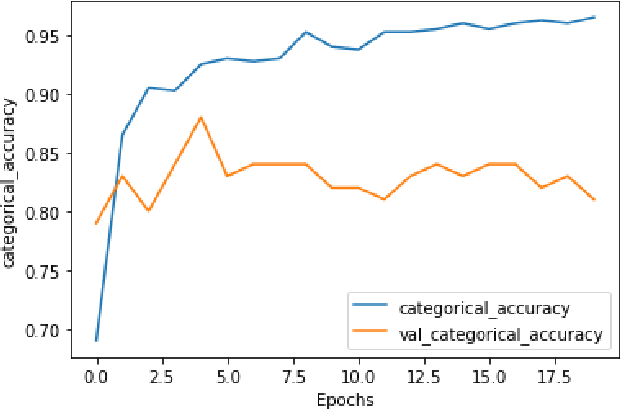

Explainable Deepfake Video Detection using Convolutional Neural Network and CapsuleNet

Apr 19, 2024

Abstract:Deepfake technology, derived from deep learning, seamlessly inserts individuals into digital media, irrespective of their actual participation. Its foundation lies in machine learning and Artificial Intelligence (AI). Initially, deepfakes served research, industry, and entertainment. While the concept has existed for decades, recent advancements render deepfakes nearly indistinguishable from reality. Accessibility has soared, empowering even novices to create convincing deepfakes. However, this accessibility raises security concerns.The primary deepfake creation algorithm, GAN (Generative Adversarial Network), employs machine learning to craft realistic images or videos. Our objective is to utilize CNN (Convolutional Neural Network) and CapsuleNet with LSTM to differentiate between deepfake-generated frames and originals. Furthermore, we aim to elucidate our model's decision-making process through Explainable AI, fostering transparent human-AI relationships and offering practical examples for real-life scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge