Md Mostafa Kamal Sarker

Segmentation Framework for Heat Loss Identification in Thermal Images: Empowering Scottish Retrofitting and Thermographic Survey Companies

Aug 07, 2023

Abstract:Retrofitting and thermographic survey (TS) companies in Scotland collaborate with social housing providers to tackle fuel poverty. They employ ground-level infrared (IR) camera-based-TSs (GIRTSs) for collecting thermal images to identi-fy the heat loss sources resulting from poor insulation. However, this identifica-tion process is labor-intensive and time-consuming, necessitating extensive data processing. To automate this, an AI-driven approach is necessary. Therefore, this study proposes a deep learning (DL)-based segmentation framework using the Mask Region Proposal Convolutional Neural Network (Mask RCNN) to validate its applicability to these thermal images. The objective of the framework is to au-tomatically identify, and crop heat loss sources caused by weak insulation, while also eliminating obstructive objects present in those images. By doing so, it min-imizes labor-intensive tasks and provides an automated, consistent, and reliable solution. To validate the proposed framework, approximately 2500 thermal imag-es were collected in collaboration with industrial TS partner. Then, 1800 repre-sentative images were carefully selected with the assistance of experts and anno-tated to highlight the target objects (TO) to form the final dataset. Subsequently, a transfer learning strategy was employed to train the dataset, progressively aug-menting the training data volume and fine-tuning the pre-trained baseline Mask RCNN. As a result, the final fine-tuned model achieved a mean average precision (mAP) score of 77.2% for segmenting the TO, demonstrating the significant po-tential of proposed framework in accurately quantifying energy loss in Scottish homes.

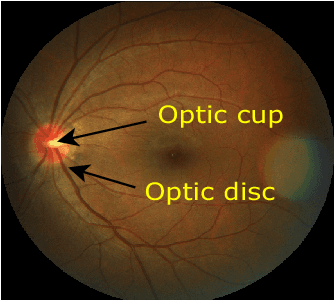

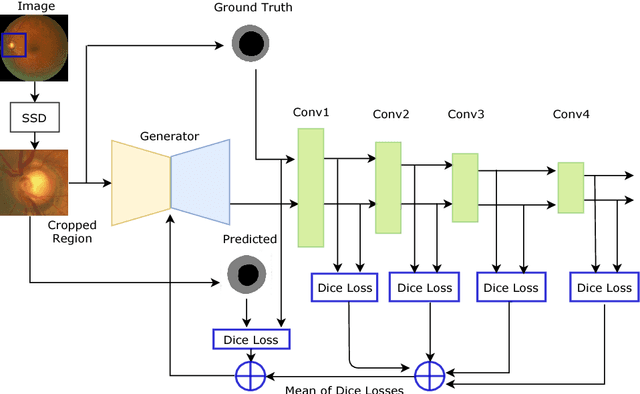

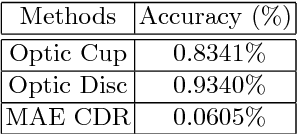

REFUGE CHALLENGE 2018-Task 2:Deep Optic Disc and Cup Segmentation in Fundus Images Using U-Net and Multi-scale Feature Matching Networks

Jul 30, 2018

Abstract:In this paper, an optic disc and cup segmentation method is proposed using U-Net followed by a multi-scale feature matching network. The proposed method targets task 2 of the REFUGE challenge 2018. In order to solve the segmentation problem of task 2, we firstly crop the input image using single shot multibox detector (SSD). The cropped image is then passed to an encoder-decoder network with skip connections also known as generator. Afterwards, both the ground truth and generated images are fed to a convolution neural network (CNN) to extract their multi-level features. A dice loss function is then used to match the features of the two images by minimizing the error at each layer. The aggregation of error from each layer is back-propagated through the generator network to enforce it to generate a segmented image closer to the ground truth. The CNN network improves the performance of the generator network without increasing the complexity of the model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge