Maximilian Diehl

Guided Demonstrations Using Automated Excuse Generation

Nov 30, 2023Abstract:Teaching task-level directives to robots via demonstration is a popular tool to expand the robot's capabilities to interact with its environment. While current learning from demonstration systems primarily focuses on abstracting the task-level knowledge to the robot, these systems lack the ability to understand which part of the task can be already solved given the robot's prior knowledge. Therefore, instead of only requiring demonstrations of the missing pieces, these systems will require a demonstration of the complete task, which is cumbersome, repetitive, and can discourage people from helping the robot by performing the demonstrations. Therefore, we propose to use the notion of "excuses" to identify the smallest change in the robot state that makes a task, currently not solvable by the robot, solvable -- as a means to solicit more targeted demonstrations from a human. These excuses are generated automatically using combinatorial search over possible changes that can be made to the robot's state and choosing the minimum changes that make it solvable. These excuses then serve as guidance for the demonstrator who can use it to decide what to demonstrate to the robot in order to make this requested change possible, thereby making the original task solvable for the robot without having to demonstrate it in its entirety. By working with symbolic state descriptions, the excuses can be directly communicated and intuitively understood by a human demonstrator. We show empirically and in a user study that the use of excuses reduces the demonstration time by 54% and leads to a 74% reduction in demonstration size.

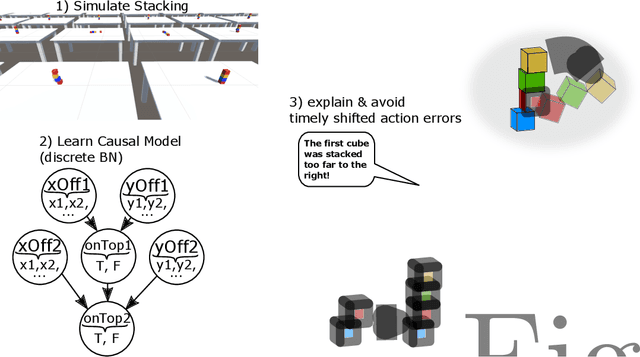

A Causal-based Approach to Explain, Predict and Prevent Failures in Robotic Tasks

Sep 12, 2022

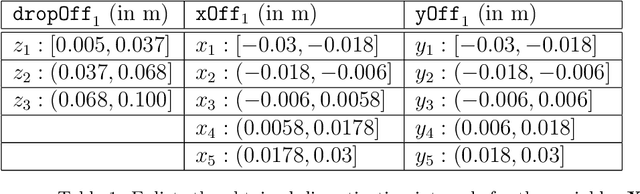

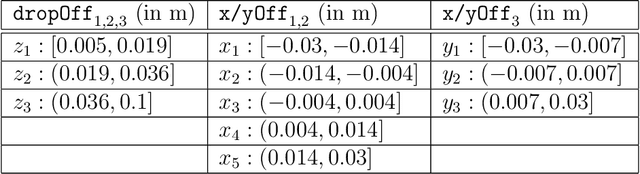

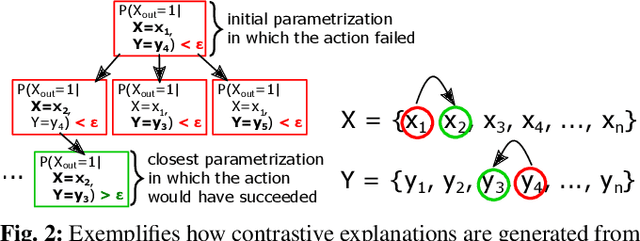

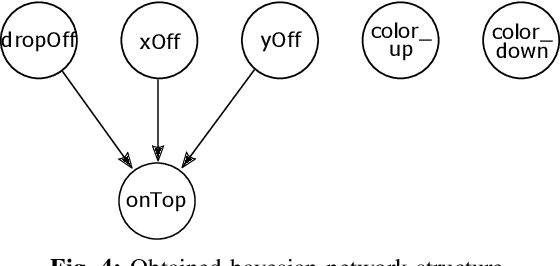

Abstract:Robots working in real environments need to adapt to unexpected changes to avoid failures. This is an open and complex challenge that requires robots to timely predict and identify the causes of failures to prevent them. In this paper, we present a causal method that will enable robots to predict when errors are likely to occur and prevent them from happening by executing a corrective action. First, we propose a causal-based method to detect the cause-effect relationships between task executions and their consequences by learning a causal Bayesian network (BN). The obtained model is transferred from simulated data to real scenarios to demonstrate the robustness and generalization of the obtained models. Based on the causal BN, the robot can predict if and why the executed action will succeed or not in its current state. Then, we introduce a novel method that finds the closest state alternatives through a contrastive Breadth-First-Search if the current action was predicted to fail. We evaluate our approach for the problem of stacking cubes in two cases; a) single stacks (stacking one cube) and; b) multiple stacks (stacking three cubes). In the single-stack case, our method was able to reduce the error rate by 97%. We also show that our approach can scale to capture multiple actions in one model, allowing to measure timely shifted action effects, such as the impact of an imprecise stack of the first cube on the stacking success of the third cube. For these complex situations, our model was able to prevent around 75% of the stacking errors, even for the challenging multiple-stack scenario. Thus, demonstrating that our method is able to explain, predict, and prevent execution failures, which even scales to complex scenarios that require an understanding of how the action history impacts future actions.

Why did I fail? A Causal-based Method to Find Explanations for Robot Failures

Apr 09, 2022

Abstract:Robot failures in human-centered environments are inevitable. Therefore, the ability of robots to explain such failures is paramount for interacting with humans to increase trust and transparency. To achieve this skill, the main challenges addressed in this paper are I) acquiring enough data to learn a cause-effect model of the environment and II) generating causal explanations based on that model. We address I) by learning a causal Bayesian network from simulation data. Concerning II), we propose a novel method that enables robots to generate contrastive explanations upon task failures. The explanation is based on setting the failure state in contrast with the closest state that would have allowed for successful execution, which is found through breadth-first search and is based on success predictions from the learned causal model. We assess the sim2real transferability of the causal model on a cube stacking scenario. Based on real-world experiments with two differently embodied robots, we achieve a sim2real accuracy of 70% without any adaptation or retraining. Our method thus allowed real robots to give failure explanations like, 'the upper cube was dropped too high and too far to the right of the lower cube.'

Optimizing robot planning domains to reduce search time for long-horizon planning

Nov 09, 2021

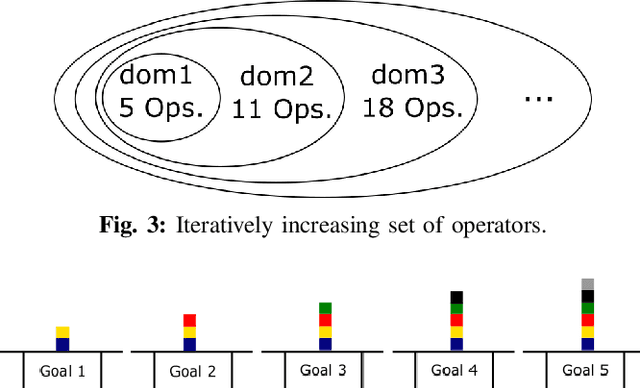

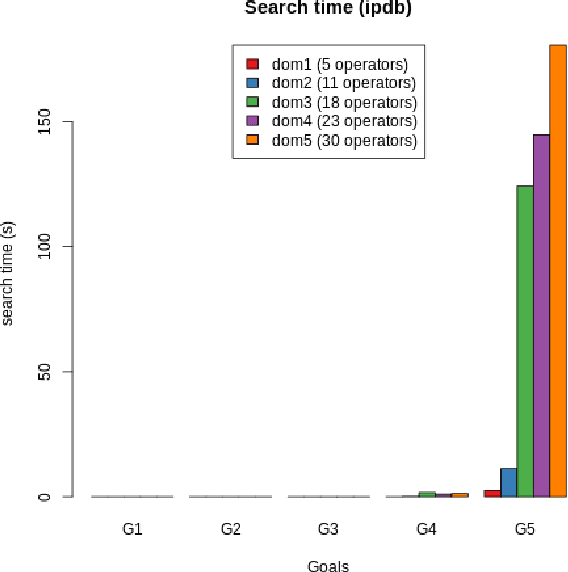

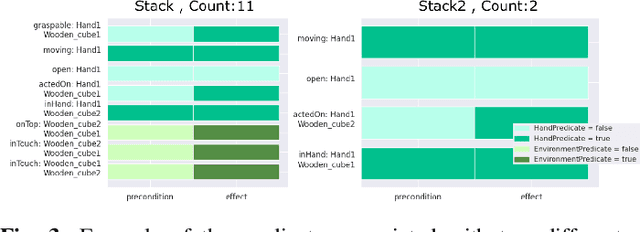

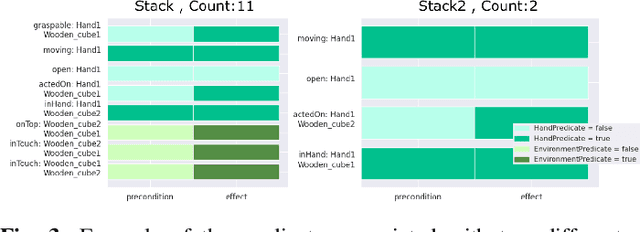

Abstract:We have recently introduced a system that automatically generates robotic planning operators from human demonstrations. One feature of our system is the operator count, which keeps track of the application frequency of every operator within the demonstrations. In this extended abstract, we show that we can use the count to slim down domains with the goal of decreasing the search time for long-horizon planning goals. The conceptual idea behind our approach is that we would like to prioritize operators that have occurred more often in the demonstrations over those that were not observed so frequently. We, therefore, propose to limit the domain only to the most popular operators. If this subset of operators is not sufficient to find a plan, we iteratively expand this subset of operators. We show that this significantly reduces the search time for long-horizon planning goals.

Work in Progress -- Automated Generation of Robotic Planning Domains from Observations

Jul 09, 2021

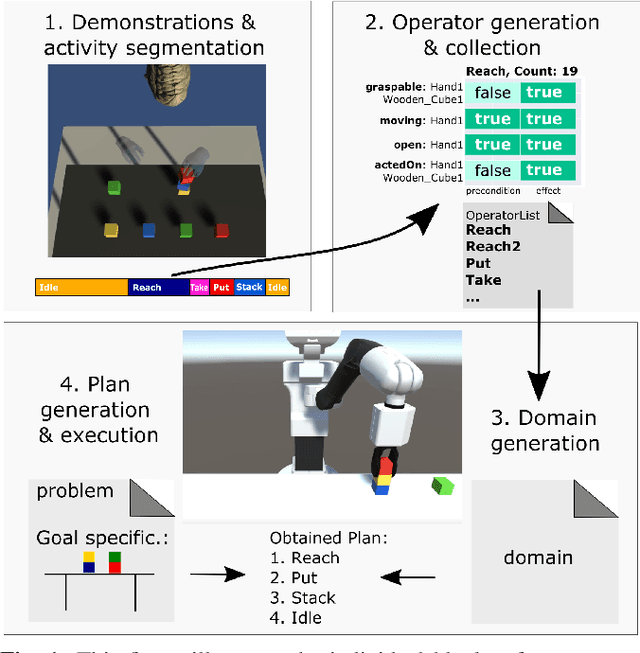

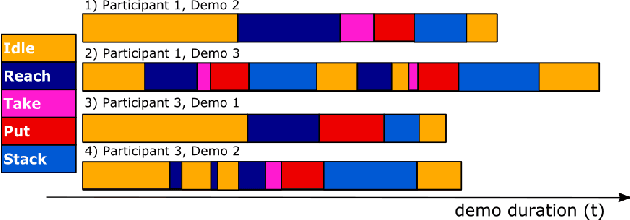

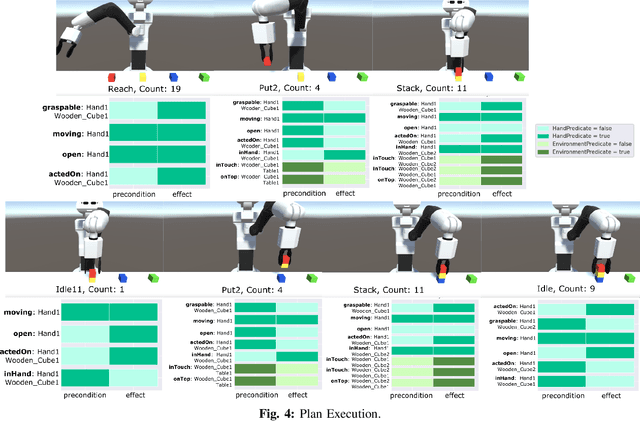

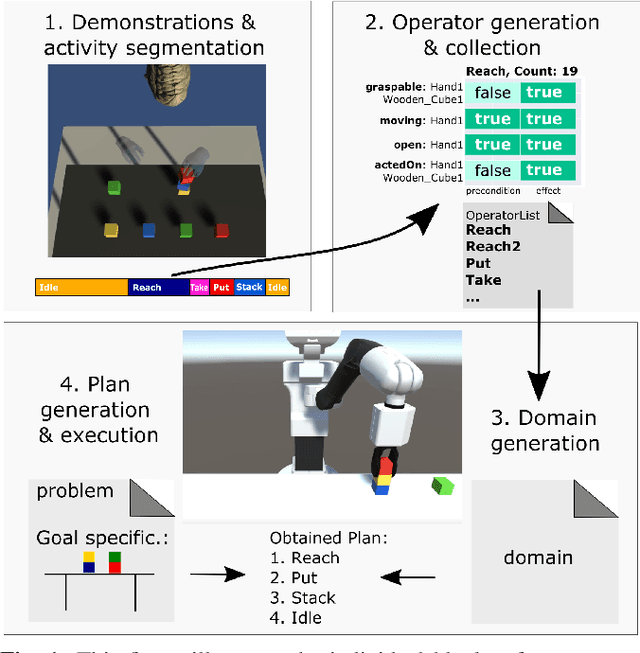

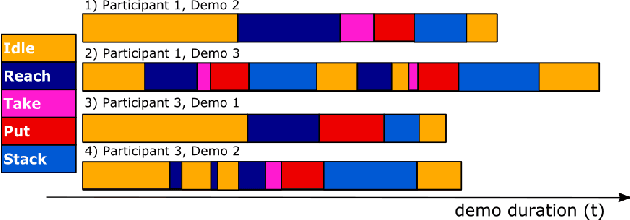

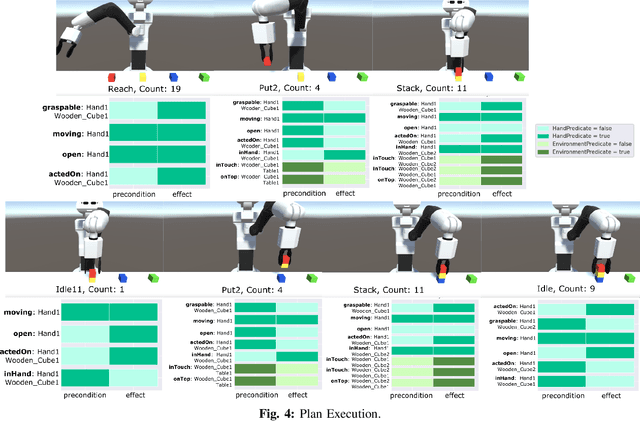

Abstract:In this paper, we report the results of our latest work on the automated generation of planning operators from human demonstrations, and we present some of our future research ideas. To automatically generate planning operators, our system segments and recognizes different observed actions from human demonstrations. We then proposed an automatic extraction method to detect the relevant preconditions and effects from these demonstrations. Finally, our system generates the associated planning operators and finds a sequence of actions that satisfies a user-defined goal using a symbolic planner. The plan is deployed on a simulated TIAGo robot. Our future research directions include learning from and explaining execution failures and detecting cause-effect relationships between demonstrated hand activities and their consequences on the robot's environment. The former is crucial for trust-based and efficient human-robot collaboration and the latter for learning in realistic and dynamic environments.

Automated Generation of Robotic Planning Domains from Observations

May 28, 2021

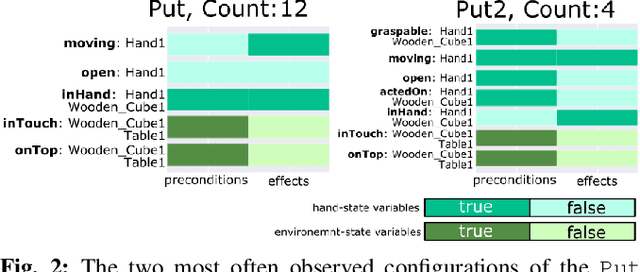

Abstract:Automated planning enables robots to find plans to achieve complex, long-horizon tasks, given a planning domain. This planning domain consists of a list of actions, with their associated preconditions and effects, and is usually manually defined by a human expert, which is very time-consuming or even infeasible. In this paper, we introduce a novel method for generating this domain automatically from human demonstrations. First, we automatically segment and recognize the different observed actions from human demonstrations. From these demonstrations, the relevant preconditions and effects are obtained, and the associated planning operators are generated. Finally, a sequence of actions that satisfies a user-defined goal can be planned using a symbolic planner. The generated plan is executed in a simulated environment by the TIAGo robot. We tested our method on a dataset of 12 demonstrations collected from three different participants. The results show that our method is able to generate executable plans from using one single demonstration with a 92% success rate, and 100% when the information from all demonstrations are included, even for previously unknown stacking goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge