Why did I fail? A Causal-based Method to Find Explanations for Robot Failures

Paper and Code

Apr 09, 2022

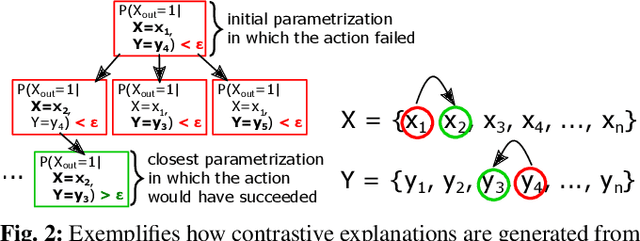

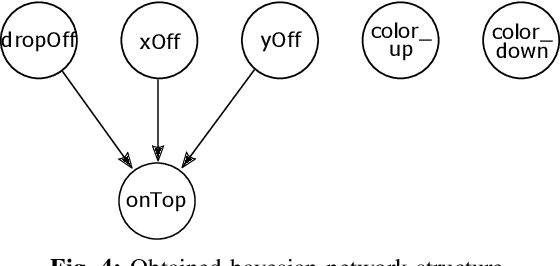

Robot failures in human-centered environments are inevitable. Therefore, the ability of robots to explain such failures is paramount for interacting with humans to increase trust and transparency. To achieve this skill, the main challenges addressed in this paper are I) acquiring enough data to learn a cause-effect model of the environment and II) generating causal explanations based on that model. We address I) by learning a causal Bayesian network from simulation data. Concerning II), we propose a novel method that enables robots to generate contrastive explanations upon task failures. The explanation is based on setting the failure state in contrast with the closest state that would have allowed for successful execution, which is found through breadth-first search and is based on success predictions from the learned causal model. We assess the sim2real transferability of the causal model on a cube stacking scenario. Based on real-world experiments with two differently embodied robots, we achieve a sim2real accuracy of 70% without any adaptation or retraining. Our method thus allowed real robots to give failure explanations like, 'the upper cube was dropped too high and too far to the right of the lower cube.'

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge