Work in Progress -- Automated Generation of Robotic Planning Domains from Observations

Paper and Code

Jul 09, 2021

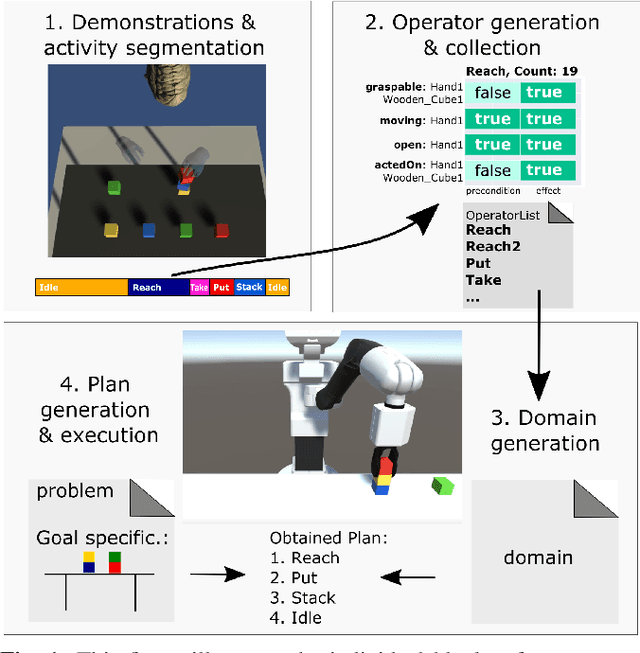

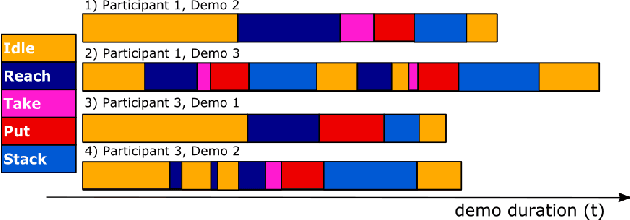

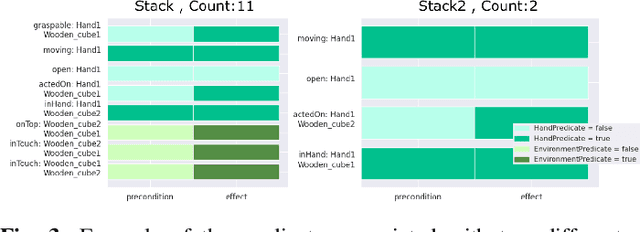

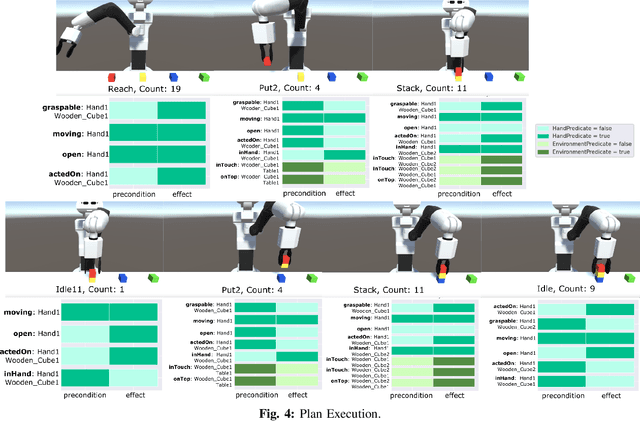

In this paper, we report the results of our latest work on the automated generation of planning operators from human demonstrations, and we present some of our future research ideas. To automatically generate planning operators, our system segments and recognizes different observed actions from human demonstrations. We then proposed an automatic extraction method to detect the relevant preconditions and effects from these demonstrations. Finally, our system generates the associated planning operators and finds a sequence of actions that satisfies a user-defined goal using a symbolic planner. The plan is deployed on a simulated TIAGo robot. Our future research directions include learning from and explaining execution failures and detecting cause-effect relationships between demonstrated hand activities and their consequences on the robot's environment. The former is crucial for trust-based and efficient human-robot collaboration and the latter for learning in realistic and dynamic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge