Matthieu Simeoni

PolyCLEAN: When Högbom meets Bayes -- Fast Super-Resolution Imaging with Bayesian MAP Estimation

Jun 03, 2024Abstract:Aims: We address two issues for the adoption of convex optimization in radio interferometric imaging. First, a method for a fine resolution setup is proposed which scales naturally in terms of memory usage and reconstruction speed. Second, a new tool to localize a region of uncertainty is developed, paving the way for quantitative imaging in radio interferometry. Methods: The classical $\ell_1$ penalty is used to turn the inverse problem into a sparsity-promoting optimization. For efficient implementation, the so-called Frank-Wolfe algorithm is used together with a \textit{polyatomic} refinement. The algorithm naturally produces sparse images at each iteration, leveraged to reduce memory and computational requirements. In that regard, PolyCLEAN reproduces the numerical behavior of CLEAN while guaranteeing that it solves the minimization problem of interest. Additionally, we introduce the dual certificate image, which appears as a numerical byproduct of the Frank-Wolfe algorithm. This image is proposed as a tool for uncertainty quantification on the location of the recovered sources. Results: PolyCLEAN demonstrates good scalability performance, in particular for fine-resolution grids. On simulations, the Python-based implementation is competitive with the fast numerically-optimized CLEAN solver. This acceleration does not affect image reconstruction quality: PolyCLEAN images are consistent with CLEAN-obtained ones for both point sources and diffuse emission recovery. We also highlight PolyCLEAN reconstruction capabilities on observed radio measurements. Conclusions: PolyCLEAN can be considered as an alternative to CLEAN in the radio interferometric imaging pipeline, as it enables the use of Bayesian priors without impacting the scalability and numerical performance of the imaging method.

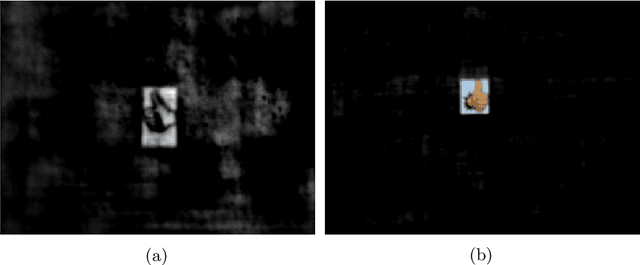

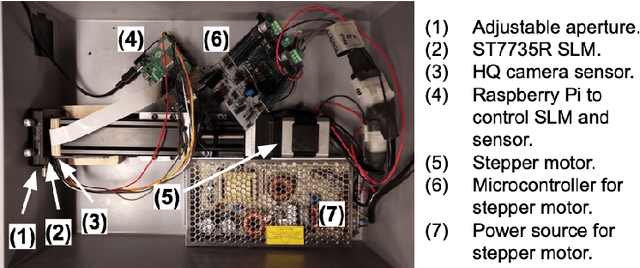

Privacy-Enhancing Optical Embeddings for Lensless Classification

Nov 23, 2022Abstract:Lensless imaging can provide visual privacy due to the highly multiplexed characteristic of its measurements. However, this alone is a weak form of security, as various adversarial attacks can be designed to invert the one-to-many scene mapping of such cameras. In this work, we enhance the privacy provided by lensless imaging by (1) downsampling at the sensor and (2) using a programmable mask with variable patterns as our optical encoder. We build a prototype from a low-cost LCD and Raspberry Pi components, for a total cost of around 100 USD. This very low price point allows our system to be deployed and leveraged in a broad range of applications. In our experiments, we first demonstrate the viability and reconfigurability of our system by applying it to various classification tasks: MNIST, CelebA (face attributes), and CIFAR10. By jointly optimizing the mask pattern and a digital classifier in an end-to-end fashion, low-dimensional, privacy-enhancing embeddings are learned directly at the sensor. Secondly, we show how the proposed system, through variable mask patterns, can thwart adversaries that attempt to invert the system (1) via plaintext attacks or (2) in the event of camera parameters leaks. We demonstrate the defense of our system to both risks, with 55% and 26% drops in image quality metrics for attacks based on model-based convex optimization and generative neural networks respectively. We open-source a wave propagation and camera simulator needed for end-to-end optimization, the training software, and a library for interfacing with the camera.

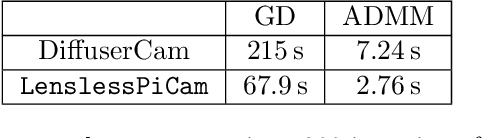

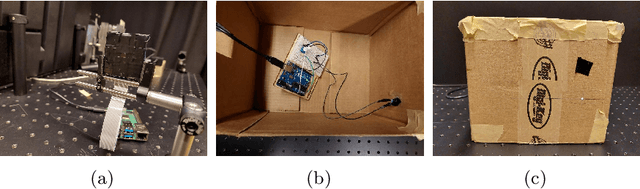

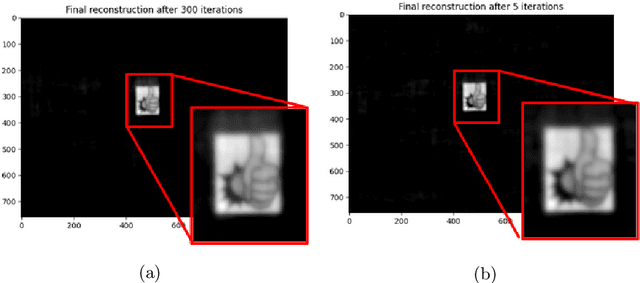

LenslessPiCam: A Hardware and Software Platform for Lensless Computational Imaging with a Raspberry Pi

Jun 03, 2022

Abstract:Lensless imaging seeks to replace/remove the lens in a conventional imaging system. The earliest cameras were in fact lensless, relying on long exposure times to form images on the other end of a small aperture in a darkened room/container (camera obscura). The introduction of a lens allowed for more light throughput and therefore shorter exposure times, while retaining sharp focus. The incorporation of digital sensors readily enabled the use of computational imaging techniques to post-process and enhance raw images (e.g. via deblurring, inpainting, denoising, sharpening). Recently, imaging scientists have started leveraging computational imaging as an integral part of lensless imaging systems, allowing them to form viewable images from the highly multiplexed raw measurements of lensless cameras (see [5] and references therein for a comprehensive treatment of lensless imaging). This represents a real paradigm shift in camera system design as there is more flexibility to cater the hardware to the application at hand (e.g. lightweight or flat designs). This increased flexibility comes however at the price of a more demanding post-processing of the raw digital recordings and a tighter integration of sensing and computation, often difficult to achieve in practice due to inefficient interactions between the various communities of scientists involved. With LenslessPiCam, we provide an easily accessible hardware and software framework to enable researchers, hobbyists, and students to implement and explore practical and computational aspects of lensless imaging. We also provide detailed guides and exercises so that LenslessPiCam can be used as an educational resource, and point to results from our graduate-level signal processing course.

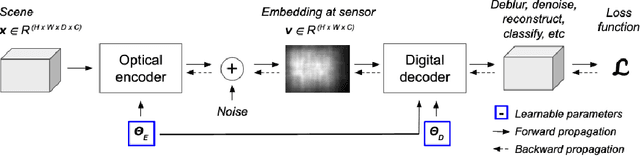

Learning rich optical embeddings for privacy-preserving lensless image classification

Jun 03, 2022

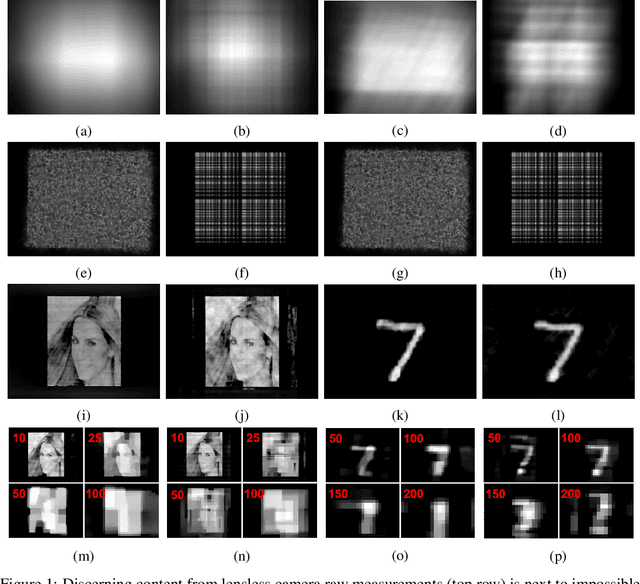

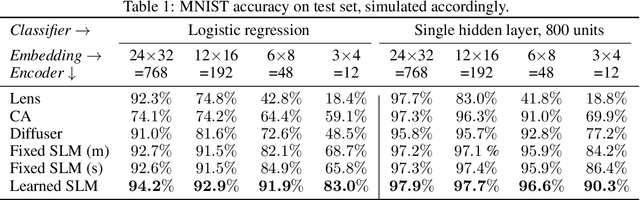

Abstract:By replacing the lens with a thin optical element, lensless imaging enables new applications and solutions beyond those supported by traditional camera design and post-processing, e.g. compact and lightweight form factors and visual privacy. The latter arises from the highly multiplexed measurements of lensless cameras, which require knowledge of the imaging system to recover a recognizable image. In this work, we exploit this unique multiplexing property: casting the optics as an encoder that produces learned embeddings directly at the camera sensor. We do so in the context of image classification, where we jointly optimize the encoder's parameters and those of an image classifier in an end-to-end fashion. Our experiments show that jointly learning the lensless optical encoder and the digital processing allows for lower resolution embeddings at the sensor, and hence better privacy as it is much harder to recover meaningful images from these measurements. Additional experiments show that such an optimization allows for lensless measurements that are more robust to typical real-world image transformations. While this work focuses on classification, the proposed programmable lensless camera and end-to-end optimization can be applied to other computational imaging tasks.

Une version polyatomique de l'algorithme Frank-Wolfe pour résoudre le problème LASSO en grandes dimensions

Apr 28, 2022

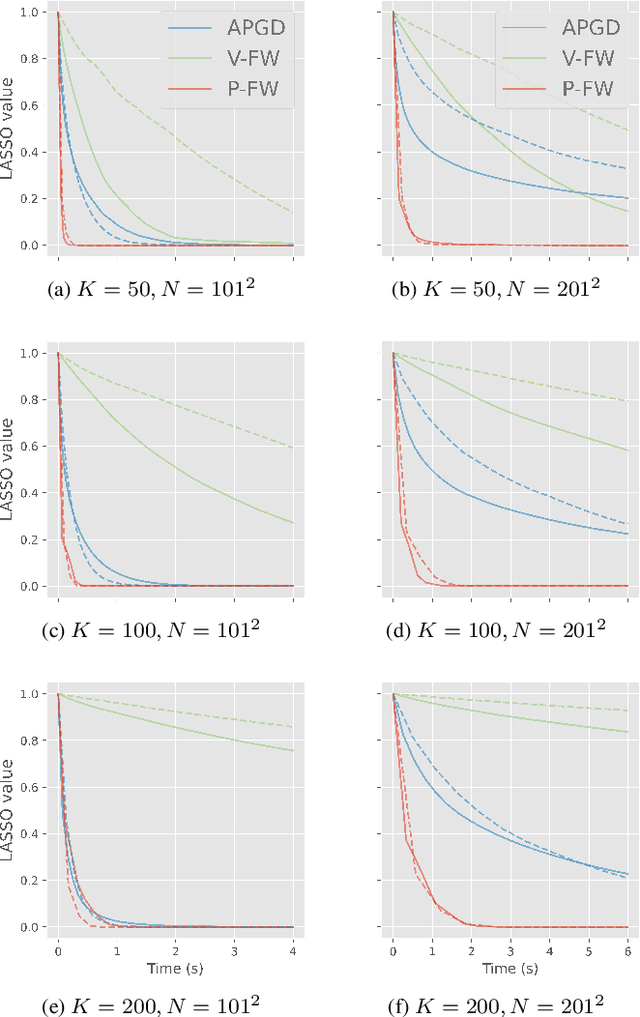

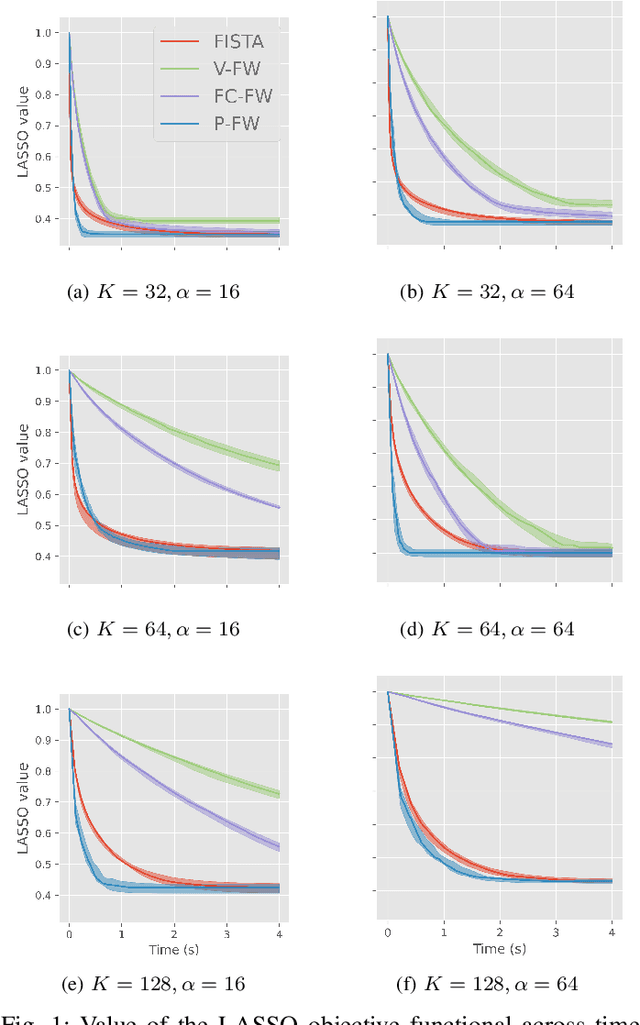

Abstract:Nous nous int\'eressons \`a la reconstruction parcimonieuse d'images \`a l'aide du probl\`eme d'optimisation r\'egularis\'e LASSO. Dans de nombreuses applications pratiques, les grandes dimensions des objets \`a reconstruire limitent, voire emp\^echent, l'utilisation des m\'ethodes de r\'esolution proximales classiques. C'est le cas par exemple en radioastronomie. Nous d\'etaillons dans cet article le fonctionnement de l'algorithme \textit{Frank-Wolfe Polyatomique}, sp\'ecialement d\'evelopp\'e pour r\'esoudre le probl\`eme LASSO dans ces contextes exigeants. Nous d\'emontrons sa sup\'eriorit\'e par rapport aux m\'ethodes proximales dans des situations en grande dimension avec des mesures de Fourier, lors de la r\'esolution de probl\`emes simul\'es inspir\'es de la radio-interf\'erom\'etrie. -- We consider the problem of recovering sparse images by means of the penalised optimisation problem LASSO. For various practical applications, it is impossible to rely on the proximal solvers commonly used for that purpose due to the size of the objects to recover, as it is the case for radio astronomy. In this article we explain the mechanisms of the \textit{Polyatomic Frank-Wolfe algorithm}, specifically designed to minimise the LASSO problem in such challenging contexts. We demonstrate in simulated problems inspired from radio-interferometry the preeminence of this algorithm over the proximal methods for high dimensional images with Fourier measurements.

A Fast and Scalable Polyatomic Frank-Wolfe Algorithm for the LASSO

Dec 06, 2021

Abstract:We propose a fast and scalable Polyatomic Frank-Wolfe (P-FW) algorithm for the resolution of high-dimensional LASSO regression problems. The latter improves upon traditional Frank-Wolfe methods by considering generalized greedy steps with polyatomic (i.e. linear combinations of multiple atoms) update directions, hence allowing for a more efficient exploration of the search space. To preserve sparsity of the intermediate iterates, we moreover re-optimize the LASSO problem over the set of selected atoms at each iteration. For efficiency reasons, the accuracy of this re-optimization step is relatively low for early iterations and gradually increases with the iteration count. We provide convergence guarantees for our algorithm and validate it in simulated compressed sensing setups. Our experiments reveal that P-FW outperforms state-of-the-art methods in terms of runtime, both for FW methods and optimal first-order proximal gradient methods such as the Fast Iterative Soft-Thresholding Algorithm (FISTA).

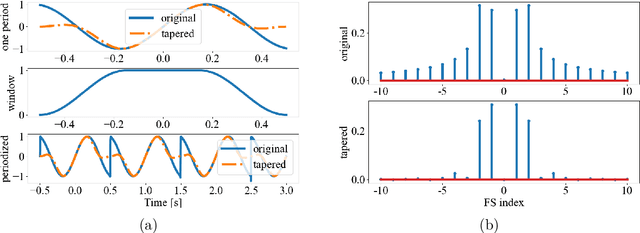

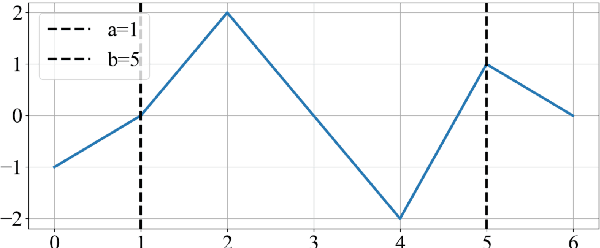

pyFFS: A Python Library for Fast Fourier Series Computation

Oct 01, 2021

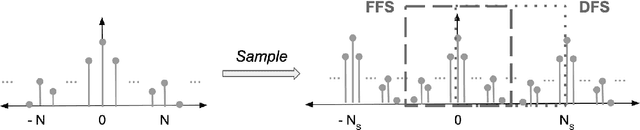

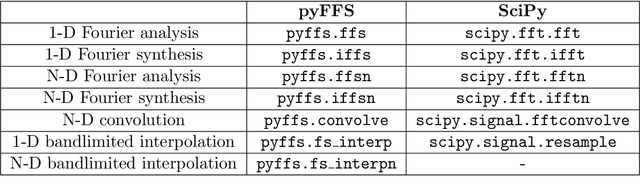

Abstract:Fourier transforms are an often necessary component in many computational tasks, and can be computed efficiently through the fast Fourier transform (FFT) algorithm. However, many applications involve an underlying continuous signal, and a more natural choice would be to work with e.g. the Fourier series (FS) coefficients in order to avoid the additional overhead of translating between the analog and discrete domains. Unfortunately, there exists very little literature and tools for the manipulation of FS coefficients from discrete samples. This paper introduces a Python library called pyFFS for efficient FS coefficient computation, convolution, and interpolation. While the libraries SciPy and NumPy provide efficient functionality for discrete Fourier transform coefficients via the FFT algorithm, pyFFS addresses the computation of FS coefficients through what we call the fast Fourier series (FFS). Moreover, pyFFS includes an FS interpolation method based on the chirp Z-transform that can make it more than an order of magnitude faster than the SciPy equivalent when one wishes to perform interpolation. GPU support through the CuPy library allows for further acceleration, e.g. an order of magnitude faster for computing the 2-D FS coefficients of 1000 x 1000 samples and nearly two orders of magnitude faster for 2-D interpolation. As an application, we discuss the use of pyFFS in Fourier optics. pyFFS is available as an open source package at https://github.com/imagingofthings/pyFFS, with documentation at https://pyffs.readthedocs.io.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge