Eric Bezzam

Open ASR Leaderboard: Towards Reproducible and Transparent Multilingual and Long-Form Speech Recognition Evaluation

Oct 08, 2025Abstract:Despite rapid progress, ASR evaluation remains saturated with short-form English, and efficiency is rarely reported. We present the Open ASR Leaderboard, a fully reproducible benchmark and interactive leaderboard comparing 60+ open-source and proprietary systems across 11 datasets, including dedicated multilingual and long-form tracks. We standardize text normalization and report both word error rate (WER) and inverse real-time factor (RTFx), enabling fair accuracy-efficiency comparisons. For English transcription, Conformer encoders paired with LLM decoders achieve the best average WER but are slower, while CTC and TDT decoders deliver much better RTFx, making them attractive for long-form and offline use. Whisper-derived encoders fine-tuned for English improve accuracy but often trade off multilingual coverage. All code and dataset loaders are open-sourced to support transparent, extensible evaluation.

Towards Robust and Generalizable Lensless Imaging with Modular Learned Reconstruction

Feb 03, 2025

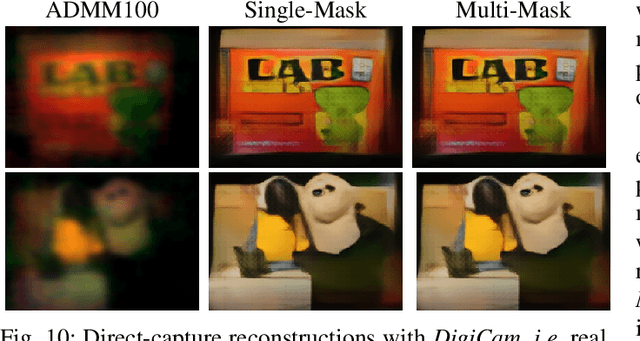

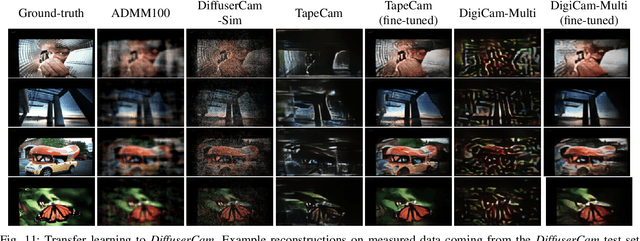

Abstract:Lensless cameras disregard the conventional design that imaging should mimic the human eye. This is done by replacing the lens with a thin mask, and moving image formation to the digital post-processing. State-of-the-art lensless imaging techniques use learned approaches that combine physical modeling and neural networks. However, these approaches make simplifying modeling assumptions for ease of calibration and computation. Moreover, the generalizability of learned approaches to lensless measurements of new masks has not been studied. To this end, we utilize a modular learned reconstruction in which a key component is a pre-processor prior to image recovery. We theoretically demonstrate the pre-processor's necessity for standard image recovery techniques (Wiener filtering and iterative algorithms), and through extensive experiments show its effectiveness for multiple lensless imaging approaches and across datasets of different mask types (amplitude and phase). We also perform the first generalization benchmark across mask types to evaluate how well reconstructions trained with one system generalize to others. Our modular reconstruction enables us to use pre-trained components and transfer learning on new systems to cut down weeks of tedious measurements and training. As part of our work, we open-source four datasets, and software for measuring datasets and for training our modular reconstruction.

Let There Be Light: Robust Lensless Imaging Under External Illumination With Deep Learning

Sep 25, 2024Abstract:Lensless cameras relax the design constraints of traditional cameras by shifting image formation from analog optics to digital post-processing. While new camera designs and applications can be enabled, lensless imaging is very sensitive to unwanted interference (other sources, noise, etc.). In this work, we address a prevalent noise source that has not been studied for lensless imaging: external illumination e.g. from ambient and direct lighting. Being robust to a variety of lighting conditions would increase the practicality and adoption of lensless imaging. To this end, we propose multiple recovery approaches that account for external illumination by incorporating its estimate into the image recovery process. At the core is a physics-based reconstruction that combines learnable image recovery and denoisers, all of whose parameters are trained using experimentally gathered data. Compared to standard reconstruction methods, our approach yields significant qualitative and quantitative improvements. We open-source our implementations and a 25K dataset of measurements under multiple lighting conditions.

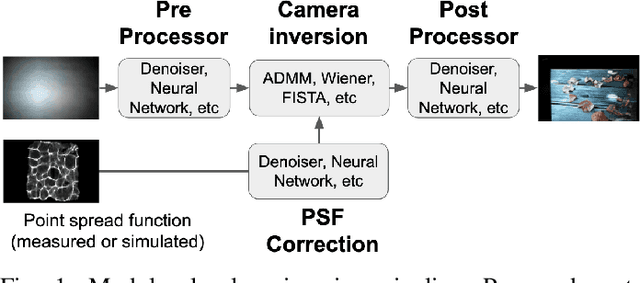

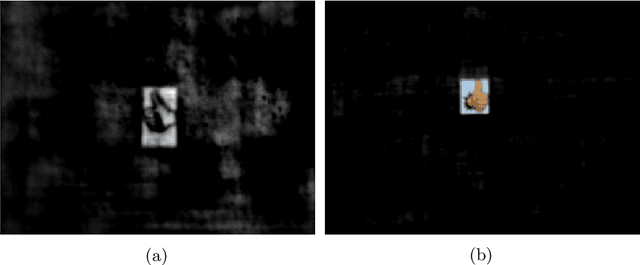

A Modular and Robust Physics-Based Approach for Lensless Image Reconstruction

Mar 01, 2024Abstract:In this paper, we present a modular approach for reconstructing lensless measurements. It consists of three components: a newly-proposed pre-processor, a physics-based camera inverter to undo the multiplexing of lensless imaging, and a post-processor. The pre- and post-processors address noise and artifacts unique to lensless imaging before and after camera inversion respectively. By training the three components end-to-end, we obtain a 1.9 dB increase in PSNR and a 14% relative improvement in a perceptual image metric (LPIPS) with respect to previously proposed physics-based methods. We also demonstrate how the proposed pre-processor provides more robustness to input noise, and how an auxiliary loss can improve interpretability.

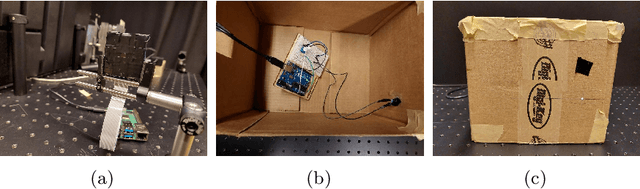

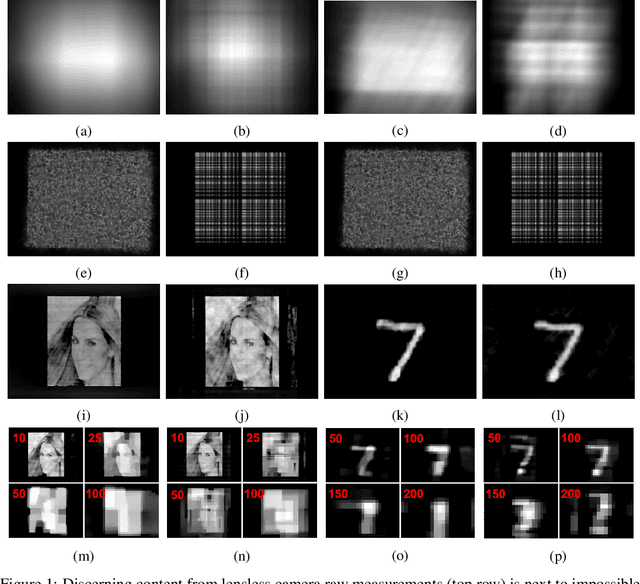

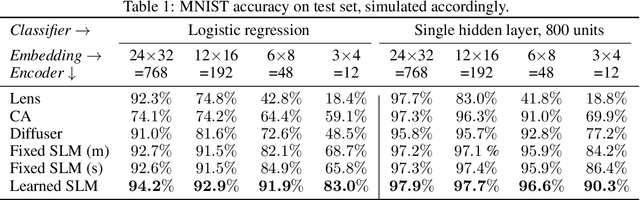

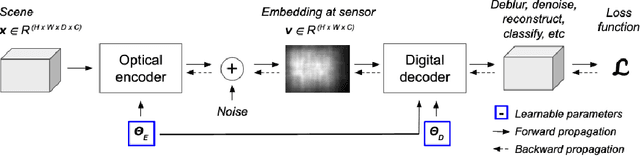

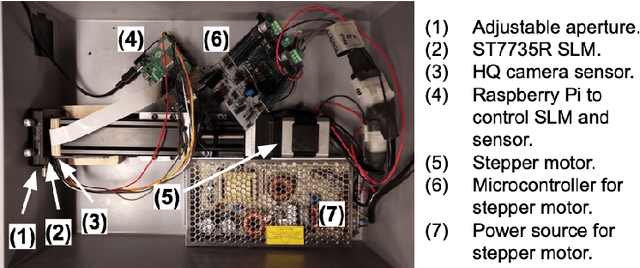

Privacy-Enhancing Optical Embeddings for Lensless Classification

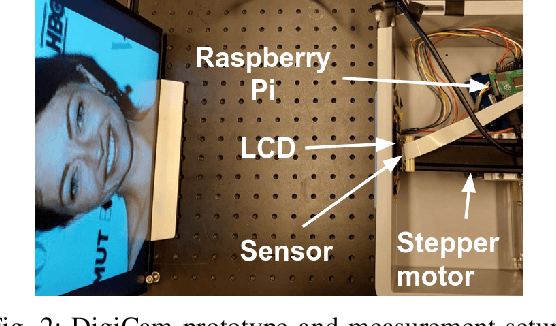

Nov 23, 2022Abstract:Lensless imaging can provide visual privacy due to the highly multiplexed characteristic of its measurements. However, this alone is a weak form of security, as various adversarial attacks can be designed to invert the one-to-many scene mapping of such cameras. In this work, we enhance the privacy provided by lensless imaging by (1) downsampling at the sensor and (2) using a programmable mask with variable patterns as our optical encoder. We build a prototype from a low-cost LCD and Raspberry Pi components, for a total cost of around 100 USD. This very low price point allows our system to be deployed and leveraged in a broad range of applications. In our experiments, we first demonstrate the viability and reconfigurability of our system by applying it to various classification tasks: MNIST, CelebA (face attributes), and CIFAR10. By jointly optimizing the mask pattern and a digital classifier in an end-to-end fashion, low-dimensional, privacy-enhancing embeddings are learned directly at the sensor. Secondly, we show how the proposed system, through variable mask patterns, can thwart adversaries that attempt to invert the system (1) via plaintext attacks or (2) in the event of camera parameters leaks. We demonstrate the defense of our system to both risks, with 55% and 26% drops in image quality metrics for attacks based on model-based convex optimization and generative neural networks respectively. We open-source a wave propagation and camera simulator needed for end-to-end optimization, the training software, and a library for interfacing with the camera.

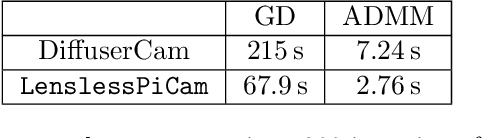

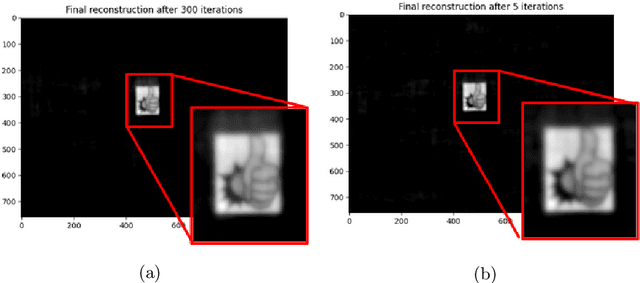

LenslessPiCam: A Hardware and Software Platform for Lensless Computational Imaging with a Raspberry Pi

Jun 03, 2022

Abstract:Lensless imaging seeks to replace/remove the lens in a conventional imaging system. The earliest cameras were in fact lensless, relying on long exposure times to form images on the other end of a small aperture in a darkened room/container (camera obscura). The introduction of a lens allowed for more light throughput and therefore shorter exposure times, while retaining sharp focus. The incorporation of digital sensors readily enabled the use of computational imaging techniques to post-process and enhance raw images (e.g. via deblurring, inpainting, denoising, sharpening). Recently, imaging scientists have started leveraging computational imaging as an integral part of lensless imaging systems, allowing them to form viewable images from the highly multiplexed raw measurements of lensless cameras (see [5] and references therein for a comprehensive treatment of lensless imaging). This represents a real paradigm shift in camera system design as there is more flexibility to cater the hardware to the application at hand (e.g. lightweight or flat designs). This increased flexibility comes however at the price of a more demanding post-processing of the raw digital recordings and a tighter integration of sensing and computation, often difficult to achieve in practice due to inefficient interactions between the various communities of scientists involved. With LenslessPiCam, we provide an easily accessible hardware and software framework to enable researchers, hobbyists, and students to implement and explore practical and computational aspects of lensless imaging. We also provide detailed guides and exercises so that LenslessPiCam can be used as an educational resource, and point to results from our graduate-level signal processing course.

Learning rich optical embeddings for privacy-preserving lensless image classification

Jun 03, 2022

Abstract:By replacing the lens with a thin optical element, lensless imaging enables new applications and solutions beyond those supported by traditional camera design and post-processing, e.g. compact and lightweight form factors and visual privacy. The latter arises from the highly multiplexed measurements of lensless cameras, which require knowledge of the imaging system to recover a recognizable image. In this work, we exploit this unique multiplexing property: casting the optics as an encoder that produces learned embeddings directly at the camera sensor. We do so in the context of image classification, where we jointly optimize the encoder's parameters and those of an image classifier in an end-to-end fashion. Our experiments show that jointly learning the lensless optical encoder and the digital processing allows for lower resolution embeddings at the sensor, and hence better privacy as it is much harder to recover meaningful images from these measurements. Additional experiments show that such an optimization allows for lensless measurements that are more robust to typical real-world image transformations. While this work focuses on classification, the proposed programmable lensless camera and end-to-end optimization can be applied to other computational imaging tasks.

pyFFS: A Python Library for Fast Fourier Series Computation

Oct 01, 2021

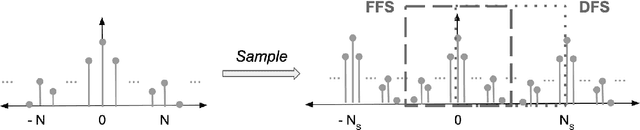

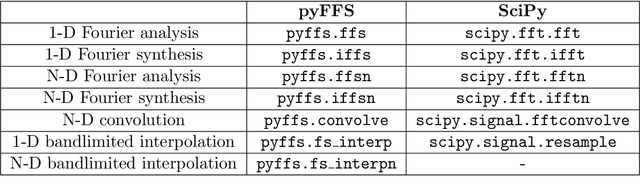

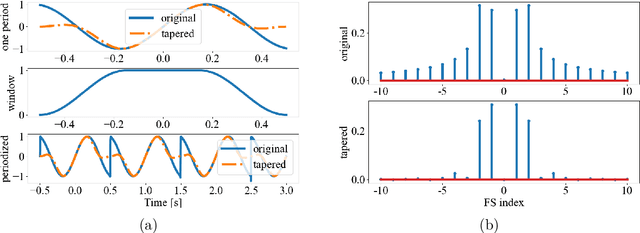

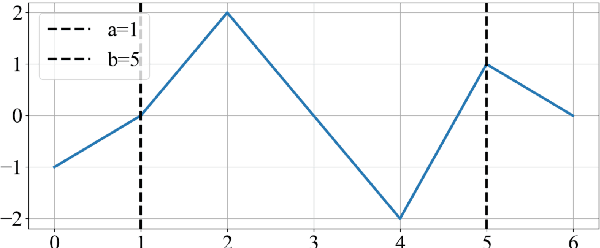

Abstract:Fourier transforms are an often necessary component in many computational tasks, and can be computed efficiently through the fast Fourier transform (FFT) algorithm. However, many applications involve an underlying continuous signal, and a more natural choice would be to work with e.g. the Fourier series (FS) coefficients in order to avoid the additional overhead of translating between the analog and discrete domains. Unfortunately, there exists very little literature and tools for the manipulation of FS coefficients from discrete samples. This paper introduces a Python library called pyFFS for efficient FS coefficient computation, convolution, and interpolation. While the libraries SciPy and NumPy provide efficient functionality for discrete Fourier transform coefficients via the FFT algorithm, pyFFS addresses the computation of FS coefficients through what we call the fast Fourier series (FFS). Moreover, pyFFS includes an FS interpolation method based on the chirp Z-transform that can make it more than an order of magnitude faster than the SciPy equivalent when one wishes to perform interpolation. GPU support through the CuPy library allows for further acceleration, e.g. an order of magnitude faster for computing the 2-D FS coefficients of 1000 x 1000 samples and nearly two orders of magnitude faster for 2-D interpolation. As an application, we discuss the use of pyFFS in Fourier optics. pyFFS is available as an open source package at https://github.com/imagingofthings/pyFFS, with documentation at https://pyffs.readthedocs.io.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge