Matthias Beckmann

Generalizations of the Normalized Radon Cumulative Distribution Transform for Limited Data Recognition

Dec 08, 2025Abstract:The Radon cumulative distribution transform (R-CDT) exploits one-dimensional Wasserstein transport and the Radon transform to represent prominent features in images. It is closely related to the sliced Wasserstein distance and facilitates classification tasks, especially in the small data regime, like the recognition of watermarks in filigranology. Here, a typical issue is that the given data may be subject to affine transformations caused by the measuring process. To make the R-CDT invariant under arbitrary affine transformations, a two-step normalization of the R-CDT has been proposed in our earlier works. The aim of this paper is twofold. First, we propose a family of generalized normalizations to enhance flexibility for applications. Second, we study multi-dimensional and non-Euclidean settings by making use of generalized Radon transforms. We prove that our novel feature representations are invariant under certain transformations and allow for linear separation in feature space. Our theoretical results are supported by numerical experiments based on 2d images, 3d shapes and 3d rotation matrices, showing near perfect classification accuracies and clustering results.

Normalized Radon Cumulative Distribution Transforms for Invariance and Robustness in Optimal Transport Based Image Classification

Jun 10, 2025Abstract:The Radon cumulative distribution transform (R-CDT), is an easy-to-compute feature extractor that facilitates image classification tasks especially in the small data regime. It is closely related to the sliced Wasserstein distance and provably guaranties the linear separability of image classes that emerge from translations or scalings. In many real-world applications, like the recognition of watermarks in filigranology, however, the data is subject to general affine transformations originating from the measurement process. To overcome this issue, we recently introduced the so-called max-normalized R-CDT that only requires elementary operations and guaranties the separability under arbitrary affine transformations. The aim of this paper is to continue our study of the max-normalized R-CDT especially with respect to its robustness against non-affine image deformations. Our sensitivity analysis shows that its separability properties are stable provided the Wasserstein-infinity distance between the samples can be controlled. Since the Wasserstein-infinity distance only allows small local image deformations, we moreover introduce a mean-normalized version of the R-CDT. In this case, robustness relates to the Wasserstein-2 distance and also covers image deformations caused by impulsive noise for instance. Our theoretical results are supported by numerical experiments showing the effectiveness of our novel feature extractors as well as their robustness against local non-affine deformations and impulsive noise.

Orthogonal Matching Pursuit based Reconstruction for Modulo Hysteresis Operators

Apr 08, 2025

Abstract:Unlimited sampling provides an acquisition scheme for high dynamic range signals by folding the signal into the dynamic range of the analog-to-digital converter (ADC) using modulo non-linearity prior to sampling to prevent saturation. Recently, a generalized scheme called modulo hysteresis was introduced to account for hardware non-idealities. The encoding operator, however, does not guarantee that the output signal is within the dynamic range of the ADC. To resolve this, we propose a modified modulo hysteresis operator and show identifiability of bandlimited signals from modulo hysteresis samples. We propose a recovery algorithm based on orthogonal matching pursuit and validate our theoretical results through numerical experiments.

Style-Extracting Diffusion Models for Semi-Supervised Histopathology Segmentation

Mar 21, 2024Abstract:Deep learning-based image generation has seen significant advancements with diffusion models, notably improving the quality of generated images. Despite these developments, generating images with unseen characteristics beneficial for downstream tasks has received limited attention. To bridge this gap, we propose Style-Extracting Diffusion Models, featuring two conditioning mechanisms. Specifically, we utilize 1) a style conditioning mechanism which allows to inject style information of previously unseen images during image generation and 2) a content conditioning which can be targeted to a downstream task, e.g., layout for segmentation. We introduce a trainable style encoder to extract style information from images, and an aggregation block that merges style information from multiple style inputs. This architecture enables the generation of images with unseen styles in a zero-shot manner, by leveraging styles from unseen images, resulting in more diverse generations. In this work, we use the image layout as target condition and first show the capability of our method on a natural image dataset as a proof-of-concept. We further demonstrate its versatility in histopathology, where we combine prior knowledge about tissue composition and unannotated data to create diverse synthetic images with known layouts. This allows us to generate additional synthetic data to train a segmentation network in a semi-supervised fashion. We verify the added value of the generated images by showing improved segmentation results and lower performance variability between patients when synthetic images are included during segmentation training. Our code will be made publicly available at [LINK].

Analysing Diffusion Segmentation for Medical Images

Mar 21, 2024

Abstract:Denoising Diffusion Probabilistic models have become increasingly popular due to their ability to offer probabilistic modeling and generate diverse outputs. This versatility inspired their adaptation for image segmentation, where multiple predictions of the model can produce segmentation results that not only achieve high quality but also capture the uncertainty inherent in the model. Here, powerful architectures were proposed for improving diffusion segmentation performance. However, there is a notable lack of analysis and discussions on the differences between diffusion segmentation and image generation, and thorough evaluations are missing that distinguish the improvements these architectures provide for segmentation in general from their benefit for diffusion segmentation specifically. In this work, we critically analyse and discuss how diffusion segmentation for medical images differs from diffusion image generation, with a particular focus on the training behavior. Furthermore, we conduct an assessment how proposed diffusion segmentation architectures perform when trained directly for segmentation. Lastly, we explore how different medical segmentation tasks influence the diffusion segmentation behavior and the diffusion process could be adapted accordingly. With these analyses, we aim to provide in-depth insights into the behavior of diffusion segmentation that allow for a better design and evaluation of diffusion segmentation methods in the future.

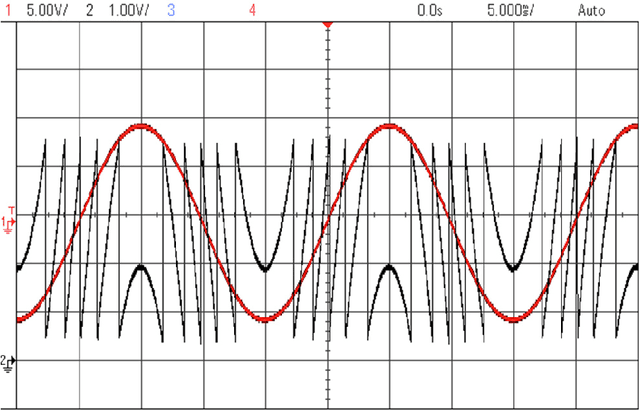

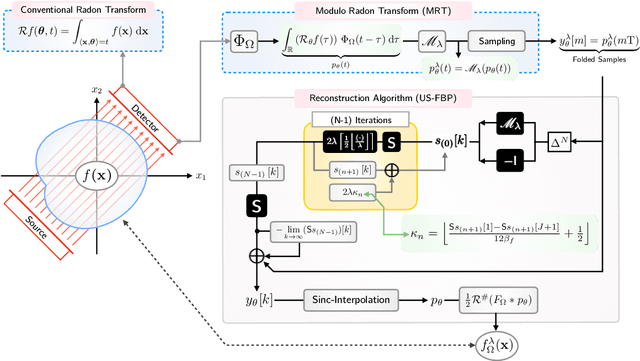

Fourier-Domain Inversion for the Modulo Radon Transform

Jul 24, 2023Abstract:Inspired by the multiple-exposure fusion approach in computational photography, recently, several practitioners have explored the idea of high dynamic range (HDR) X-ray imaging and tomography. While establishing promising results, these approaches inherit the limitations of multiple-exposure fusion strategy. To overcome these disadvantages, the modulo Radon transform (MRT) has been proposed. The MRT is based on a co-design of hardware and algorithms. In the hardware step, Radon transform projections are folded using modulo non-linearities. Thereon, recovery is performed by algorithmically inverting the folding, thus enabling a single-shot, HDR approach to tomography. The first steps in this topic established rigorous mathematical treatment to the problem of reconstruction from folded projections. This paper takes a step forward by proposing a new, Fourier domain recovery algorithm that is backed by mathematical guarantees. The advantages include recovery at lower sampling rates while being agnostic to modulo threshold, lower computational complexity and empirical robustness to system noise. Beyond numerical simulations, we use prototype modulo ADC based hardware experiments to validate our claims. In particular, we report image recovery based on hardware measurements up to 10 times larger than the sensor's dynamic range while benefiting with lower quantization noise ($\sim$12 dB).

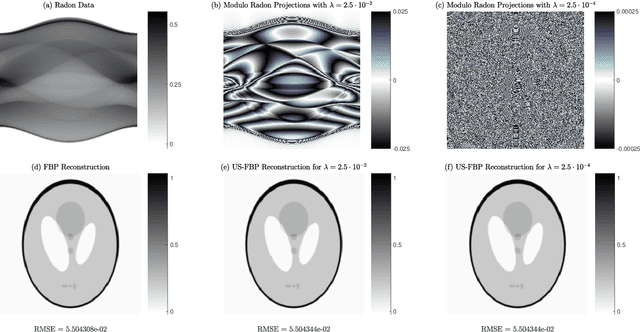

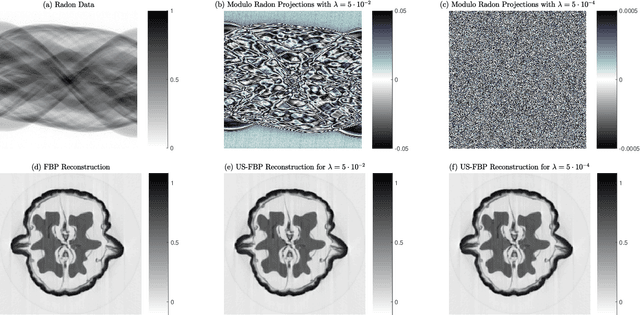

The Modulo Radon Transform: Theory, Algorithms and Applications

May 10, 2021

Abstract:Recently, experiments have been reported where researchers were able to perform high dynamic range (HDR) tomography in a heuristic fashion, by fusing multiple tomographic projections. This approach to HDR tomography has been inspired by HDR photography and inherits the same disadvantages. Taking a computational imaging approach to the HDR tomography problem, we here suggest a new model based on the Modulo Radon Transform (MRT), which we rigorously introduce and analyze. By harnessing a joint design between hardware and algorithms, we present a single-shot HDR tomography approach, which to our knowledge, is the only approach that is backed by mathematical guarantees. On the hardware front, instead of recording the Radon Transform projections that my potentially saturate, we propose to measure modulo values of the same. This ensures that the HDR measurements are folded into a lower dynamic range. On the algorithmic front, our recovery algorithms reconstruct the HDR images from folded measurements. Beyond mathematical aspects such as injectivity and inversion of the MRT for different scenarios including band-limited and approximately compactly supported images, we also provide a first proof-of-concept demonstration. To do so, we implement MRT by experimentally folding tomographic measurements available as an open source data set using our custom designed modulo hardware. Our reconstruction clearly shows the advantages of our approach for experimental data. In this way, our MRT based solution paves a path for HDR acquisition in a number of related imaging problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge